Introduction

Modern-day competition on resources has enabled countries to embark on extensive research and the use of modern technology to conserve natural resources which are always scarce. The use of hydroelectric power has been limited by the climatic changes which have actually been influenced by human beings. Due to this unreliable source of energy countries have invested heavily in other sources of power such as solar energy and nuclear energy.

Nuclear energy has been in use for a long time, research shows that the first nuclear plant to produce commercially used energy began its operations in 1954. This plant was constructed by the Union of Soviet Socialist Republics (USSR) and five megawatts of power were generated. When governments decide to use nuclear energy as a source of power, the safety of the employees should be taken into consideration. All factors compromising human safety are addressed and affirmative action is taken to prevent occurrences of accidents.

Unintended human error, ignorance or negligence may result in devastating effects including loss of life and the aftermath may be felt many years after the occurrence of the accident. Countries invest in establishing nuclear power generation plants mainly because during the production of this energy global warming is kept at its minimum. Global warming which is the main contributor of modern-day climatic changes and results to natural disasters such as the Hurricane and Tsunami, hence causing large casualty to the human race. Carbon dioxide and methane which may be harmful are not produced during nuclear power generation.

Advantages and disadvantages of nuclear energy

Nuclear energy is cheaper to produce in the long run compared to other sources of energy. Oil which is mainly used by many countries is expensive to extract and it’s non-renewable, making countries diverge in other forms of energy. However, the main disadvantage of using nuclear power energy is that, during the production process of this energy, radiation is produced as a by-product and once this radiation gets exposed to the living human cells, negative effects such as deformities to the newborn brings are witnessed.

A good example of the negativities caused by the atomic bomb was experienced when the USA attacked Japan during the Second World War. Nuclear weapons are dangerous weapons of mass destruction which is why the United Nations (UN) controls and regulates its production (Hala et al, 2003).

Three Mile Island nuclear plant accident

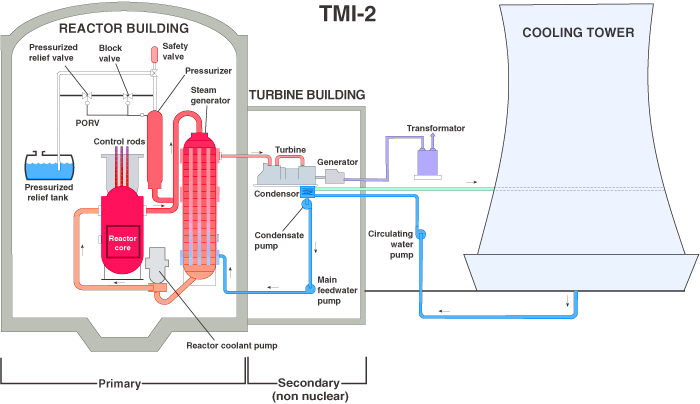

Most accidents that occur in nuclear power plants are fueled by the meltdown of the reactor which causes a radiation that is harmful to human beings. Three Mile Island nuclear power plant is located at Londonderry Township in the United States of America. It is a civilian power plant in that it is not managed by the US military like other nuclear Plants. This nuclear power plant began its commercial operations in the year 1974 after two years of construction which included the building of the two units; Three Mile Island units (TMI-1 and TMI2) are the two main units whose role is to cool the main reactor.

Three Mile Island nuclear power plant was contracted, built and operated by Exelon nuclear corporation which specializes in maintaining nuclear energy and ensuring that the environment is clear of any nuclear and radiation linkage, which causes devastating effects to the human population (Samuel and Walker, 1980). Nuclear radiation is usually emitted during the nuclear energy production process and can result in uncontrolled and irreversible harm to both the environment and living beings. The nuclear plant has the ability to generate 804 megawatts of power when at its full capacity through this range greatly depends on the current maintenance level.

The reactor was supplied by Babcock & Wilcox but designed by Westinghouse which deals with the provision of oil and design plans for nuclear-producing companies.TMi-1 was commissioned and contracted for a period of forty years with a renewal clause after the period has expired (Mark and Hertsgaard, 1983). This unit has never experienced a major accident though, during the last decade a radiation leakage was witnessed on the containment department by a computerized monitoring system that precisely identifies any radiation exposure and reports the exact location when the radiation has leaked from.

This mishap was immediately rectified by starting the ventilation systems which increased the oxygen circulation thereby preventing any human casualty. The TMI-1 unit was constructed by United Engineers and Constructors while the design was contracted to Gilbert Associates. Interestingly, although the two units are on the same nuclear plant and on the same location, each unit has its own management and a disaster occurring on one unit may not necessarily affect the other unit. The Three Mile Island second unit (TMI-2) was constructed by Babcock & Wilcox but designed by a different architect that is Burns and Roe.

The second unit which was constructed to perform the same functions as the TMI-1 had more ability to produce and generate more power than TMI-1. For instance, the TMI-2 produced more than 100 mw more than TMI-1 meaning that more operations took place on this unit, (Porter and Elias, 1964).

In the year 1978 when TMI-2 was on its normal operation, a major accident occurred enabling the reactor to shut down. The meltdown that occurred to the TMI-2 unit was caused by overheating. During emergency control procedures, when the reactor heats and exceeds the given and expected temperatures, the cooling system should correct this malfunction immediately preventing any further damage to the other systems.

The primary reactor is the core of the unit and all the major processes take place at this part. When the accident occurred, it was a result of blockage on the valve which connects the reactor to the cooling tower. Investigations suggest that the plant was supposed to be serviced when refueling was being done and it was supposed to be carried out in accordance with nuclear reactors regulations. According to Bonnie et al (2004) when the accident happened, emergency alarms were sounding in the control rooms and this enabled the operators to identify the root cause of the accident.

According to this author, after the reactor had shut down due to overheating, water from the emergency cooling system started to flow automatically to lower the temperatures of the reactor. Trouble began when the valve malfunctioned and failed to close causing radioactively contaminated water to overflow and spread to the plant’s compound. Research further shows that the plant had been serviced and tested the previous month before the accident. The engineers who were conducting the maintenance failed to open the valves as expected and this accelerated the problem as the emergency response team had trouble locating the valves (Goldstein, 2001).

Human factors

Human factors such as poor coordination and misunderstanding between the designers and the emergency response team were witnessed during the reactor accident at Three Mile Island nuclear plant. After the personnel serviced the reactor and leaving the valve open, the response team didn’t actually understand where the valve was located. This led to the automatic shutdown of the reactor (TMI-2) unit. Failure by the US government to ensure that all nuclear plants in its territory were properly maintained and by professional nuclear engineers was another human factor that contributed to this accident.

For instance, suppose the government had provided this service perhaps this accident could not have occurred. According to Neville (2004), human factors are concerned with equilibrium between humans and the machines to be used in the production process. Human factors are focused on improving the performance of machines while at the same time protecting themselves from any harm which may occur during the operation of those machines. Human factors can be well analyzed by using a set of guidelines that are supposed to be followed and consequently implemented.

The guideline may include elements such as the expected level of organization and the precautions to be taken when handling the machines, which should always aim at increasing its efficiency. It should be noted that; individual human judgment should always precede any set guidelines. This is because human beings are expected to react differently depending on the present situation and it’s argued that, there are no two situations that are totally similar (Hampton and Wilborn 2001).

Mark and Hertsgaard (1983), argue that human factors cannot be designed by an individual. This is based on the fact that human beings are completely different and their reasoning capacity different. This actually includes the difference between individual tastes and preferences. He further argues that, just because an engineer is the sole designer of equipment and understands its functionality with ease, other users of the equipment may find its operation complex and difficult to master. Human factors must be designed and integrated into a system after extensive research has been conducted. Analyzing individual differences is normally complex and each and every individual limitation or deficiency must be clearly and precisely considered when determining the human factors.

Human error that led to the accident

The first human error which resulted in the Three Mile Island nuclear power accident was that the emergency control personnel weren’t experienced. For instance, when the TMI-2 unit experienced a meltdown, the operators who were present tried to control the accident by locating the closed valve, this wasn’t possible because the water had already overflowed the pressurizer and it couldn’t be located easily. The second human error was the ignorance and incompetence of the reactor service team. Likewise, the root cause of the accident originated from the fact that the week before the accident, the reactor service personnel left the valve open.

The third human error that resulted in the accident was that the suppliers and manufacturers of the unit lacked the required skill to effectively build a risk-free nuclear power reactor. In another recent accident occurred at Davis Besse nuclear power plant as a result of mechanical complications. Interesting is the fact that the machinery and installation were supplied by the same contractor who constructed TMI-2 unit at Three-mile Island nuclear power plant.

After the reactor had cooled down and no more danger was expected, Jimmy Carter, by then the president of the United States visited the scene. It is further argued that, although the president was a qualified engineer specializing in nuclear warships (experience received when he served in the navy) it was not appropriate for him to personally attend the scene, as it was later observed that a bubble was formed at the pressure vessel and the worst-case scenario was that, the bubble could explode and spill radioactive material to the environment, thereby accelerating the aftermath effects.

This scare and dilemma were to be solved at a later period when it was confirmed that the bubble could not explode as there was no adequate supply of oxygen (Cole and Michael, 2002). After the nuclear experts confirmed that no immediate casualties occurred, the most venerable people including young children who were below the age of ten years and expectant mothers were advised to relocate to a safer distance some miles from the accident scene.

The fourth human error was that the emergency response team delayed in responding to the distress call. The continuous sounding of the alarm in the control room did not result in the expected rapid response. This was mainly due to the assumption that the reactor was on repair and maintenance when the accident happen.

The Best Human Reliability Analysis Tool to be used

Technique for human error rate prediction

In relation to the Three Mile Island nuclear accident, the Technique for human error rate prediction (THERP) is the best suitable human reliability analysis tool to be used. According to Kirwan (1994), THERP analysis was invented in 1950 for the main purpose of predicting the impact and the level of human error during the production and use of nuclear weapons. According to this author, the frequency on which human beings are expected to deviate from the established procedures can be accurately measured.

When this technique is used correctly, the human errors expected at this nuclear plant can be precisely identified and control measures enacted in advance. For instance, if the operator’s inability to locate the reactor’s valve was expected, an emergency alternative could have been established.

Accident sequence evaluation program (ASEP)

This human reliability analysis was invented in the late 1980s with the objective of measuring human errors and estimating the response time expected when the system was operating at normal capacity. When human errors are identified in advance and the expected aftermath determined, the operators can evaluate each human error in relation to the expected effects.

Justification of the use of ASEP

Three Mile Island nuclear plant will require this tool as it’s cheap to implement and easier for new employees to master. Measures can be implemented to limit and reduce the accident aftermath. Response teams may also be equipped with the necessary techniques to deal with the expected human error.

How to manage the Human Factor

The human factor can be managed through the establishment of periodic analysis of the current human factors affecting the organization. Thorough training of new employees should be adopted to enable them to learn the organizational protocols. These include the safety precautions and the level of efficiency required by the organization.

Human Factor Integration plan

Due to the complexity of modern machines and equipment, the design engineers must devise methods to involve the end-users of these machines. The following elements must be considered when designing a human factor integration plan. These factors will also help the organization of the Three Mile Island nuclear power plant in preventing future accidents occurrences (Henderson, 1998).

Goals of the plan

The plan should precisely identify the objectives of the human integration policies which the organization should strictly follow. This human factor integration plan will aim at reducing or completely preventing the future occurrence of a radiation accident at the Three Mile Island nuclear power plant. Another objective of this plan is to ensure that the employees of this organization operate at the highest safety standards possible. In addition, the employees should adhere to collective responsibility ethics whereby each employee will be answerable to his or her own actions (DeAngelis and Therese, 2002).

Scope of the plan

Nuclear power plants require a high level of human factor integration. This plan will focus on the major activities which require integration. Following the 1979 nuclear accident, all the employees and operators of the Three Mile Island nuclear power plant must be involved in the installation process. This will ensure that the employees fully understand the importance and the operations of the system. The plan will involve employees during the documentation of the system.

Documentations involve issues like the end-user support documents in which employees should be involved during its production. This plan will also cover the major issues involved from the designer, the supplier and the contractor of the nuclear reactor. Raw materials that should be used during the construction of the reactor will be verified by qualified personnel to eliminate any use of substandard materials.

Background of the activity

The 1979 accident left the US government’s nuclear experts and the entire United States residents in great shock since the mishap wasn’t anticipated. Nuclear accidents which have severe effects on mankind have to be controlled regardless of the costs involved. In this regard, the management at the Three Mile Island nuclear power plant has a responsibility of ensuring that all the human factors are incorporated during the design and implementation of the already installed reactors (TMI-1 and TMI-2).

Security measures should be emulated from the initial stages of the implementation to the final stages. Teamwork should also be encouraged since it’s through teamwork where employees and operators show solidarity during times of emergency. Problems are better solved when each and every individual is committed to accomplishing common objectives. The US government should also establish strict rules and procedures regulating the licensing processes of nuclear energy maintenance companies (Sanders et al, 2005).

Criteria for determining areas of consideration

The area of consideration will be determined by the level of a safety risk, the general performance of the reactor and the priority attached to the nuclear power system. In nuclear power generation, the safety of the operators including the low-level employees should be given the first priority. As a rule of thumb, the government of the United States ensures that employees are protected and assured against high-risk jobs. This means that the higher the employment risk, the higher the remunerations.

When the risks have been considered, the general performance of the reactors is put into consideration. This implies that the human factors must ensure that the nuclear reactor is operating at its optimal level. Underperformance leads to underutilization of natural resources which leads to low productivity and failure to meet the targeted goals and objectives. When the reactor is performing at its highest level, energy levels skyrocket hence the pressure to use hydroelectric power is eased resulting in conservation of the scarce natural resource maintained (Cravens and Gwyneth, 2007).

Rationale

Due to the emphasis which countries place on their nuclear-generating plants and the high global demand for nuclear energy, human factors are mostly not considered during the architectural design of the reactors. The architectural designs are limited to the senior operators supplied by the government and who are usually sworn in secrecy. Many countries have been known to use nuclear energy in the production of weapons of mass destruction thereby endangering the existence of mankind (Milne et al, 1989).

Human factors input

These include the elements enacted to ensure an efficient human integration plan. They comprise of various factors such as the roles and responsibilities of persons involved in the development of the plan

Roles and responsibilities

The senior plant supervisor is responsible for the integration of human factors concerning the safety procedures of the reactor. The human resource manager will be involved in motivating the employees and designing stress releasing protocol in the plant. In this way, employees will have a high concentration in their duties hence reducing the probability of an accident.

Training requirement

New employees will be trained and safety procedures communicated to them. When all the operators are trained and the job description clearly stated to them, the major accidents will be minimized. Each and every employee does her job as required; the rate of human error is greatly reduced. For instance, if the servicing personnel did his or her work as expected and following all laid down protocols, perhaps the TMI-2 unit accident could have been prevented.

Related groups

The operations and maintenance teams will have to be considered due to their job similarities. Operations department deals specifically with supervising the daily operations of the nuclear plant while the maintenance team deals with testing and ensuring the reactor and other involved processes function properly. They will be interviewed at the same time and their contributions will be highly respected (Ferguson and Charles, 2007).

The technical basis of the plan

The US government demands that every nuclear energy-producing plant should conform to the set rules and procedures on the safety and maintenance of these nuclear plants. Maintenance of the reactor should be done according to the government’s guidelines. This prevents an occurrence of human error as it was witnessed in1979 when the operators failed to replace a valve hence resulting in the worst nuclear accident in US history (Stephanie, 2009).

Technical elements for review

According to Brennan (2002), the performance of the employees is expected to be reliable in that, during all the energy extraction processes the operators are supposed to be efficient and detailed in order to prevent any accident reoccurrence. Common sense should be used at all times and preventative measures to be observed.

Methods for addressing the technical elements

Technical elements will be analyzed using human inaccuracy analysis. The employees’ performance analysis will be based in relation to the mistakes committed during normal operations of the nuclear plant. This enhances the improvement programs, where underperforming employees are further trained and motivated (Allen and Howieson, 1990).

Intended tools

During the human factor integration process, virtual simulators will be used to measure the employee’s response during an emergency and these responses will be analyzed to improve the safety measures.

Technical guides

Directions will be clearly labeled removing any confusing messages and emergency tools like fire extinguishers will be located at convenient and strategic places. During training, the abbreviations will be precisely elaborated for easy understanding. For instance, critical points will be marked with a large colored font for easy reference.

Processes and procedures

General

This human integration plan will follow a systematic implementation procedure. The non-technical employees will be required to be present during the re-installation of the MTI-2 reactor in order to understand the basic functionality of the reactor. General maintenance and emergency precautions will be observed throughout the entire reactor operations. The human integration plan will be implemented during the training of new employees.

Timelines

During the implementation of the human integration plan, the following activities will be taken into consideration during different stages of reactor development.

Documentation

The new human integration plan will be integrated by updating the softcopy of the existing documentation. After the update, hardcopy materials will be printed and distributed to the entire employee community for review. The Three Mile Island nuclear plant organization will make it a culture to supply a copy of the documentation to any new employee.

Disposition of human factors issues

Weekly reviews of the existing human factors and the anticipated change of those factors will be determined and the appropriate changes are adopted. A database management system will be used to maintain the past incidences and devise ways to prevent future happenings which may cause devastating effects like what happened in the Three Mile Island nuclear plant.

Regulatory contact

After the human factor integration plan has been implemented, a meeting date will be communicated so that the Three Mile Island management can meet with the government representatives in order to review the viability of the entire plan. According to the procedures followed during the implementation of this human factor plan, it is imperative to guarantee the success of this plan which will definitely prevent future nuclear accidents.

Conclusion

As countries seek to reduce the cost of producing energy and meeting the skyrocketing demand for energy, it is imperative to ensure that nuclear power plants are regulated in order to avoid accidents. As analyzed from the above investigation, it is paramount that companies devise ways of reducing the rate of human error occurrences which are the major causes of accidents. Nuclear energy is argued to be environmentally friendly but the emissions which occur during its production, cause radiations that destroy the human body cell resulting in the growth of cancerous cells.

Reference list

Allen and Howieson, (1990) Probabilistic Safety Assessment Study Results. London: Sage.

Bonnie, Anthony J. Baratta, Thomas, (2004) The Three Mile Island Nuclear Power Plant Accident and Its Impact. New York: McGraw-hill.

Brennan, (2002) The Chernobyl Nuclear Disaster. London: Chelsea House.

Cole, Michael D. (2002) Three Mile Island Disaster. New Jersey: Enslow Publishers.

Cravens, Gwyneth, (2007) Power to Save the World: The Truth about Nuclear Energy. New York: Knopf.

DeAngelis, Therese, (2002) Three Mile Island: Great Disasters: Reforms and Ramification, London: Chelsea House.

Ferguson, Charles D, (2007) Nuclear Energy: Balancing Benefits and Risks. New York: McGraw -Hill.

Goldstein, (2001) The Nature of the Atom. New York: The Rosen Publishing Group.

Hala, James, Navratil, (2003) Radioactivity, Ionizing Radiation and Nuclear Energy. Brno: Konvoj.

Hampton, Wilborn. (2001) A Race against Nuclear Disaster at Three Mile Island. Cambridge: Candlewick Press.

Henderson, (1998) Nuclear Physics. New York: Facts.

Kirwan B. (1994) A guide to practical human reliability assessment. Florence: Taylor & Francis.

Mark, Hertsgaard, (1983). The Men and Money behind Nuclear Energy. New York: Pantheon Books.

Milne, Lorus, Margery, (1989) Understanding Radioactivity. North Carolina: Atheneum.

Neville, Stanton, (2004) Human factors methods: a practical guide for engineering and design. Uxbridge: Brunel University.

Porter, Elias H. (1964) Manpower Development: The System Training Concept. Chicago: Harper.

Samuel and Walker, (1980) Three Mile Island nuclear crisis. New York: US Nuclear Regulatory Commission.

Sanders, Ernest J. McCormick (2005) Human factors in engineering and design. Uxbridge: Brunel University Press.

Stephanie, (2009) In Mortal Hands: A Cautionary History of the Nuclear Age. Black.