When the Academic Nurse Educator (ANE) builds a course, they should strive to design it with the end in mind. This will help to measure the retention of student knowledge, and their ability to meet desired outcomes. After a set of measurable learning outcomes has been established, one should begin to work on developing the assessments to align with the course objectives and student learning outcomes. The ANE should think of the learning objectives as a set of skills, knowledge, or abilities that the students will be able to demonstrate at the end of the module. This can be measured with a final summative objective assessment, which will provide a way for students to prove they have mastered the content within the module by transferring their knowledge in choosing the correct answer on the exam.

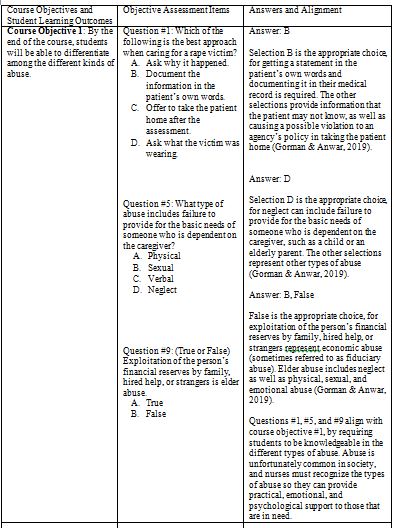

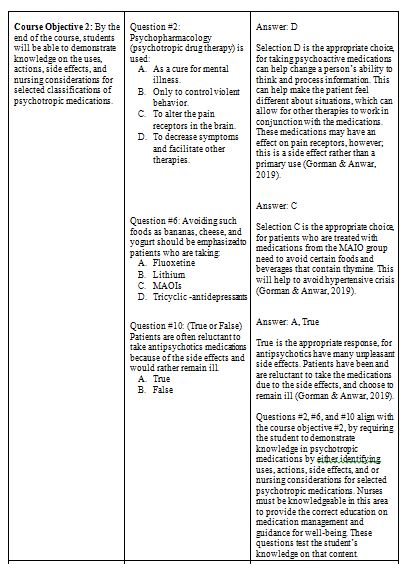

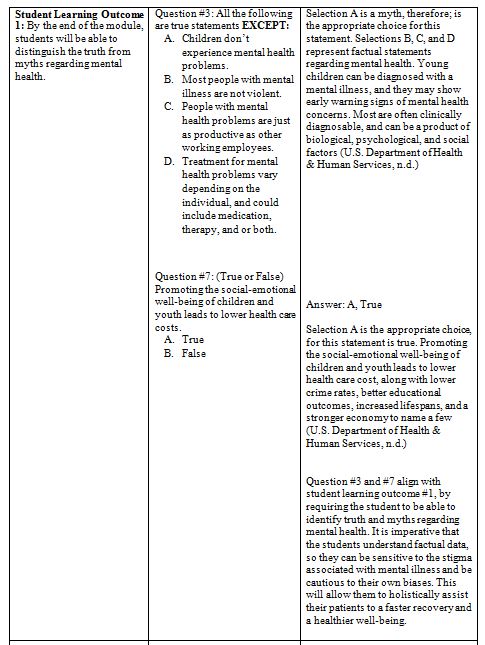

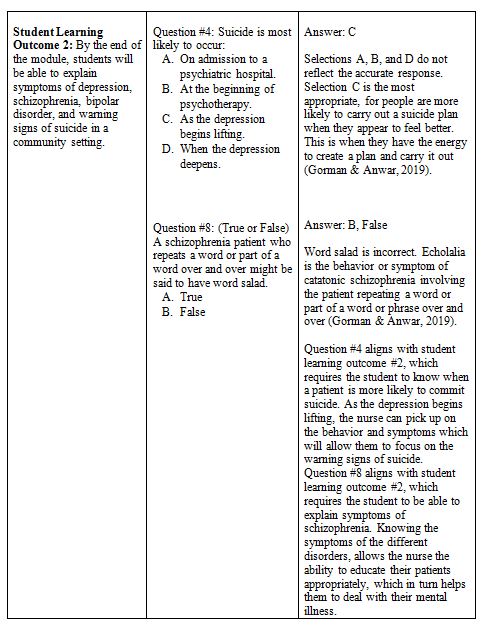

Assessment Activity Alignment

This module contains an online summative objective assessment that is designed to test the students’ knowledge and comprehension level of mental health in a community setting. The objective assessment, also referred to as the final exam, is based off the course objectives and student learning outcomes. It is vital the exam items align with the course objectives and student learning outcomes, so it can provide feedback to both instructors and students. This allows for measurement to which extent students are successfully meeting and mastering the module objectives. The learning objectives and student outcomes for the module were presented in many forms throughout class material via PowerPoint lectures, class discussions, and homework assignments. In the chart below, one can see the alignment of the exam items to the learning objectives and student learning outcomes.

Assessment Results and Pass or Fail Criteria

The online final exam will be graded promptly, and grades will be posted to the online learning platform of Schoology within 24 hours of exam submission. Students will have the opportunity to visualize their grade online, however; will not have the ability to review the exam items until they schedule an appointment with the instructor for a review. Since the assessment is a final exam, another module will be beginning, so there will not be time for class discussion to review what was missed. During the scheduled review, each student will receive an exam report that will include each exam item with the correct answer, the student’s answer, along with the correct answer rationales. The appointment will provide one-on-one instructor time with each student and allow them the opportunity to discuss difficulties and misunderstandings of the exam and module content. All constructive feedback will be specific, genuine, and supportive to the learner. If the student remains with the need for further remedial assistance, they will be directed to the appropriate resources for additional review and will be asked to schedule a follow-up appointment to ensure the material was comprehended correctly.

Prior to taking the final exam, students will have the pass or fail criteria, for it will be the standardized grading scale that is used for this specific institution. This grading scale is utilized by all educators for test and final examinations. Each student will need to obtain a 70% or higher to successfully pass the final exam. The following is the grading scale that is used: 90-100 is an A, 80-89 is a B, 70-79 is a C, 60-69 is a D, and 59 and below is an F. This grading scale is listed on all course rubrics, and nursing handbooks for students to refer to throughout the module.

Assessment Theory, Concept, or Principal

Constructivism is a learning theory that implies learners gather new knowledge and information to build on information learned in the past (Billings & Halstead, 2020). This learning theory helped guide my choice for the online summative objective assessment regarding the module, Mental Health in a Community Setting. The assessment allowed for connection of what the students had learned from in-class instructional lectures, group assignments, and role play activities within the previous module.

The online summative objective assessment had the students complete a 75-question exam within a ninety-minute timeframe. The exam consisted of a series of multiple-choice and true and false questions. These items had the students use prior knowledge and connect it to the content on the exam which was new questions that had not been visible throughout the module. This challenged them to apply their knowledge and use principles of constructivism. This also allowed me as the instructor to evaluate their understanding on the content and learning objectives that was previously presented. I believe the constructivist model applies to online learning and testing, since it motivates the students to think critically, digest, and deploy their acquired nursing knowledge in the form of assessments.

Test Security Procedures

One could agree that students can take advantage of online assessments, and go against academic integrity standards, when the assessments are not administered inside of the academic setting. This alone can lead to an increase in cheating temptation, not to mention the increase in growing technology accessibility and hackers. It will be necessary for the ANE to prevent, detect, and respond to breaches in the security of this online summative objective assessment. Since there is only so much prevention that can be placed, educators will need to continue reinforcing the importance of academic integrity, with a focus on the consequences if one chooses to be dishonest.

The prevention designs I have established for this online exam, will help to minimize the testing security and dishonesty concerns. Since the test is online, there will only be a limited number of hardcopies, which will only be accessible to the ANE. They will be kept in a locked filing cabinet within the nursing department, which is always locked when vacant. There will also be three different versions; A, B, and C, which will be utilized during the exam, so not all students are receiving the same version (Oermann & Gaberson, 2016). Students are also required to use their assigned username and password prior to accessing the online learning platform of Schoology, plus they will need to input their student ID number when prompted to begin the exam. These passwords are not to be shared with other students, as they have been directed from the beginning of the program. During the exam, students are not allowed to use any recourses, smart watches, phones, or have any distractions from their home environments. Also, one of the major requirements prior to attempting the exam is to have the lockdown browser in place, which will hinder the student to be able to view anything but the exam during the testing period.

The detection strategy for any breaches in test security is through the proctored environment. Each student will have signed a permission consent to be recorded and proctored with online exams that are taken off-site. This exam will have the students turn on their cameras prior to the start of the exam, and they will be monitored by at least 2 instructors throughout the entirety of the testing period. The instructors will provide security checks to ensure no resources are visible, as well as other security issues.

If a student would be found to have created a breach by cheating, the proctors would end the exam immediately, and the student would receive a call from the ANE to schedule an in-person conference. The issue would be discussed and reviewed with the technical department to ensure that the issues was cheating, and not another issue. If the situation were confirmed to be cheating, the student would be disciplined according to the program policy, which is automatic dismissal for going against academic dishonesty.

Potential Barriers

Many educators and institutions have been forced to use online technology such as learning management systems (LMS), to assist in homework activities, lectures, and assessments. Online learning can be a positive tool, however; it can also lead to potential barriers for both educators and students. In this module, barriers of having the online summative objective exam were if students had access to a computer or laptop outside of the academic setting. Since the class consist of varied age groups and cultures, would everyone understand how to open the online exam due to possible technology deficits in the older aged students.

To overcome the barriers, I ensured all students had access to a functional computer or laptop at home or had reserved time in the school computer lab for the testing. Each student signed a voucher stating they had an operational computer at home, or listed the time scheduled in the school computer lab. This list served as assurance that all students had access to a functional computer. To overcome issues retrieving the exam, students were provided step by step directions in class, along with the student-facing directions on the online learning platform of Schoology. The directions guided the students to the exact folder, along with every step needed to access the exam. Students were also informed in class; they would be able to notify the proctors any time during the exam if technical issues occurred. This assessment design will help to minimize barriers and will aid in the implementation of the online objective assessment.

Analytical Methods

An objective summative assessment of the Mental Health in a Community Setting module requires expertise conducted using analytical methods at both the test and individual item levels. The use of statistical software, which includes IBM SPSS, R, or MS Excel, is a valuable tool application to monitor assessment results both for the whole class and for each student individually. This section offers a description of the analytical methods used to examine Item Difficulty, Item Discrimination, and Test-Level Reliability.

- Item Difficulty

Item Difficulty describes the perceived difficulty of an item or the minimum number of skills required to successfully pass an objective assessment. High difficulty corresponds to a situation in which a large number of students could not answer the questions correctly, while low difficulty corresponds to the opposite outcome. Analytically, to calculate this parameter, it is necessary to determine the percentage of students who answered the question correctly. For multiple-choice questions, the ideal difficulty — that is, the percentage of people who answered the question correctly — ranges from 70 to 77 depending on the number of answers offered (McDonald, 2017; OEA, n.d.). For True/False type questions, the ideal difficulty is 85. Values significantly below these signal that students have not adequately mastered the skills necessary to solve a test question. - Item Discrimination

Item Discrimination is an analytic metric that characterizes the correspondence between the success of a particular item and the entire test, or to put it another way, it allows for differences between groups of students with different performances. This is useful because it allows for determining the effectiveness and quality of a question on a test and assessing how well the question reflects students’ overall knowledge. The Item Discrimination measurement tool is the Discrimination Index (DI), which ranks from -1 to +1: a good score on an assessment question is +0.40 or higher, which is what ANE should aim for (McDonald, 2017). The calculation of this index can be done automatically by calculating the Pearson Product Moment squared or manually, resulting in lower accuracy. For manual calculation, a certain fraction — from 25 to 50% — of answers from both ends is selected in the sorted descending score distribution. The number of correct answers in the samples is determined, and then the difference between them is divided by the total number of students in each sample. - Test-Level Reliability

Test-Level Reliability demonstrates the degree of internal consistency, that is, the measure to which different questions fit together. A good assessment test should have a high degree of internal consistency in order to reflect reliability, consistency, and relationships between questions. A measure of reliability is Cronbach’s alpha, a statistical tool that quantifies this measure (McDonald , 2017). Specifically, an alpha value above 0.70 demonstrates good internal consistency, and this value (or higher) should be sought when creating a questionnaire.

Potential Low Factors

Despite the desire to improve the objective assessment of the module, one cannot rule out the existence of potential factors that can lead to poor design performance and reliability. An in-depth understanding of these factors is an essential attribute of critical improvement and refinement of the assessment, so they must be taken into account. This section discusses such predictors that can influence poor performance and low test quality in the context of reflecting real-world knowledge.

Low Pass Rates

Low Pass Rates correspond to a situation in which most students fail the test, that is, they cannot do well on it. Potential factors that could lead to this outcome include overly complicated questions, low student preparation, and a lack of sufficient competence stimulated by high absenteeism rates. Organizational predictors should also be considered, including inadequate time constraints, high levels of stress caused by the demands and rigor of the assessment, and technical difficulties arising from a lack of Internet or an inability to find and begin taking the test in a timely manner.

Low Item Discrimination

Low Item Discrimination characterizes low test performance, in which students who perform poorly on a test can score high on individual questions, and vice versa: in other words, the questions do not match the reliability of the proficiency test in this assessment. Predictors of Low Item Discrimination may include poor question design and lack of consistency checks, ambiguity, and ambiguous wording (OEA, n.d.). If an error was made in the design of the question, that is, the genuinely correct answer was not selected as a non-key answer, this may also be the cause of this problem.

Low Test-Level Reliability

Low Test-Level Reliability, or low internal consistency, can lead to inconsistent results, lack of complete confidence in the reliability of the assessment, and errors in performance results. Potential factors that could contribute to this problem are a lack of prior consistency checking with Cronbach’s alpha or a lack of making adjustments for a low alpha and a lack of consistency in Low Item Discrimination. Organizational reasons include inequalities in assessment conditions and a lack of balance adequacy in the preparation of test logic.

Improvement Plan

If a pretest or posttest has shown poor reliability and performance for that assessment, this is a signal to implement improvements. Such improvements can be either short-term or long-term and ensure that the assessment created and improved will accurately and reliably reflect student knowledge. Notably, for any of the criteria below, a common recommendation is to use focus groups to obtain preliminary results from which changes can be made for improvement.

- Item Discrimination

Short-term actions to improve Item Discrimination scores should include carefully reading the test several times and assessing potential barriers that will affect performance, then removing those questions that meet the low-discrimination criterion. In addition, the test should be checked for a variety of question types so that the number of question types is not too high. Long-term measures could include working with experts and methodologists to carefully audit the test and identify weak questions so that further changes can be made. - Test Reliability

Short-term actions to improve objective assessment reliability include calculating Cronbach’s alpha with a “Scale if item deleted” table to determine which questions need to be deleted to improve internal consistency. In addition, it is necessary to ensure that students take the test on a level playing field. In terms of long-term action, ANE proficiency improvement programs can be resorted to for test development as well as regular monitoring. - Item Difficulty

To improve the consistency of question difficulty in the assessment design, a careful reading should be done to determine whether questions differ significantly in difficulty and to remove those that meet redundancy and deficit difficulty. To improve in the long run, continuous monitoring is necessary, improving the competencies of the test developer and using technology to develop and automatically examine tests, that is, resorting to data-driven solutions.

Using Assessment Results to Improve Teaching

The purpose of objective assessment, in addition to formally identifying student and class learning progress, is to understand strategies that can be used to improve teaching engagement and learning experiences. By using the analytical methods presented above, ANE can understand where a group of students stands and how they perceive an objective assessment and formulate insights for further development based on the results and feedback. For example, if the assessment showed Low Pass Rates, this could signal a low quality of learning, causing the ANE to reconsider instructional practices. The advantage of this analytical approach is that it allows for determining not only overall performance on the assessment but also results on individual questions within the test. It provides insights into which concepts were learned better than others. All of this provides a foundation to support improved pedagogical practice and create an environment in which resources and tools are used in the best way to improve the student experience.

References

Billings, D. M., & Halstead, J. A. (2020). Teaching in nursing: A guide for faculty (6th ed.). Elsevier.

Gorman, L. M., & Anwar, R. F. (2019). Neeb’s mental health nursing (5th ed.). F.A. Davis Company.

McDonald, M. (2017). The nurse educator’s guide to assessing learning outcomes (4th ed.). Jones & Bartlett Learning.

OEA. (n.d.). Understanding item analyses. University of Washington. Web.

Oermann, M. H., & Gaberson, K. B. (2016). Evaluation and testing in nursing education (5th ed.). Springer Publishing Company. Web.

U.S. Department of Health & Human Services. (n.d.). Mental health myths and facts. MentalHealth.gov. Web.