Introduction

Organisations make vital decisions based on customer information concerning their consumption and preference patterns. Scientific studies are also based on the collection of data whose analysis brings the appropriate information that can be interpreted to draw the required inferences.

Nations make vital decisions concerning requisite policies that address social problems such as poverty. Hence, they have to collect and subsequently analyse a large amount of data.

This situation highlights the need for a mechanism for managing big data. One might raise questions concerning the role that information management systems play in this task.

Using four scholarly articles, this paper defines and discusses management information systems. It also discusses the importance of such systems in the context of the need to handle big data.

Definition and Discussion of Information Management Systems

Data refers to raw facts that relate to a given phenomenon or issue. Information refers to organised facts about a given phenomenon or issues. Such facts are presented in a way that adds value to the previous data.

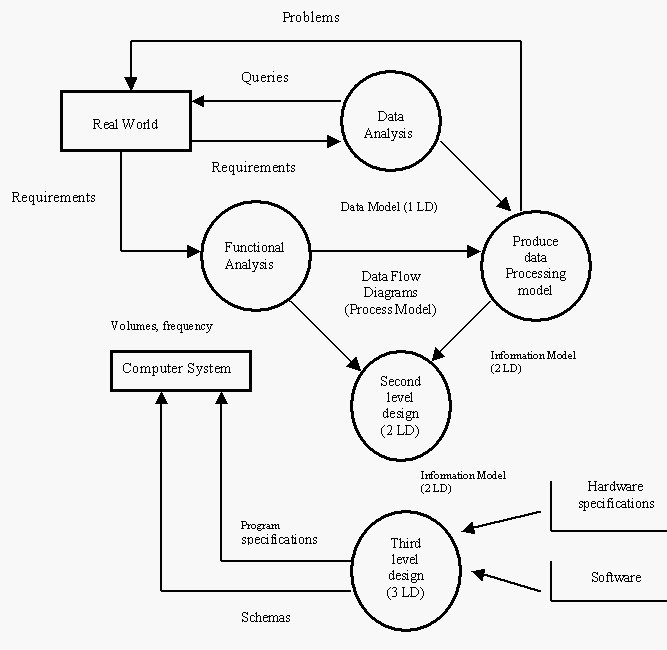

Information management system constitutes computers and other associated tools that help in data collection and processing to produce information. An example of information management is shown in figure 1.

Data is important in making various strategic decisions for an organisation. Its collection and storage followed by analyses require the availability of information management tools. Indeed, every organisation seeks strategic plans for growth in terms of size and productivity levels.

Growth increases difficulties in handling customer and supply chain complaints due to the large amount of data that requires analysis and synthesis. Different organisations deploy different types of information management systems to handle big data.

At medium-sized organisations, common information management systems include decision support systems, transaction processing systems, and integrated MIS systems.

Figure 1: An example of management information system

Source: (Turban, 2008, p.300).

Decision support systems facilitate the making of decisions based on the analysis of data and statistical projections. Transaction processing systems avail a means of collecting data, its storage, modification, and the cancelation of different transactions.

This type of system is perhaps important where big data is deployed in managing organisations’ operational systems that support its business.

Decision support systems create an opportunity for improvement of quality of the decisions that are made by organisations’ managers instead of laying them off. Through transaction processing systems, an organisation acquires the capacity to execute simultaneous transactions.

Data that is collected by the system can be held in databases. However, such data stores may not have the capacity to handle big data that relates to the entire customer population.

The data can later be deployed in report production, including billing, reports for scheduling manufacturing, wage reports, production and sales summaries, inventory reports, and check registers.

Both decision support systems and transaction processing systems share common challenges that make them unsuitable for meeting the needs of organisations that deal with big data.

Their security constitutes a big issue. For transaction processing system, the appropriateness of the dealings is overly dependent on the accurateness of the information that is stored in the databases. The decision support system is even slower in helping to arrive at concrete decisions, despite its limited capacity of data processing.

Decision support systems interact with human decision makers. This challenge makes it incredibly irrelevant for an organisation that generates several terabytes of data on a limited duration such as Argonne National Laboratory (Wright, 2014, p.13).

An example of information systems for managing big data successfully at an organisational level is the integrated information management system. One of such systems is the Enterprise Resource Planning (ERP) system.

However, more sophisticated systems are used for managing big data in science research institutions and internet-based organisations such as Amazon and Google companies.

The Roles of Information Management Systems in Handling Big Data

Information management systems are important to all organisations. Speiss, T’Joens, Dragnea, Spencer, and Philippart (2014) assert that some modern organisations’ information management systems depend on “traditional database, data warehouse, and business intelligence tool sets” (p. 4).

Such systems are only configured to serve one organisation using data resources that are only accessible to it (the organisation). This observation suggests that such tools do not possess attributes such as scalability and cost effectiveness, which are necessary while analysing large customer-related data.

Therefore, similar data from other organisations may be important in managing customer experiences better. Such data together with other facts from the organisation in question may be left unanalysed to arrive at more effective decisions on how to serve customers better.

To mitigate the above challenges, alternative information systems that are capable of effectively analysing and interrelating massive scales of data from different organisations have become important. Such massive data is referred as ‘big data’ (Speiss et al., 2014).

The systems for analysing big data are different, depending on the needs of a given organisation, group of organisations, or even a given nation.

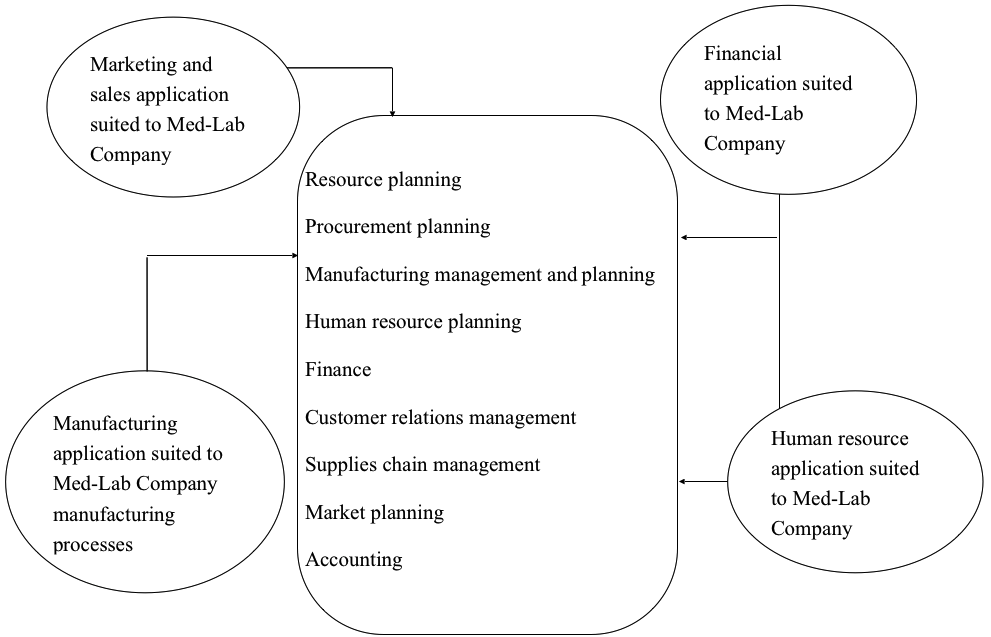

Integrated information management systems are important in managing large amounts of information. Once they are customised, they facilitate the generation of information that is critical for making decisions in different departments within an organisation.

For example, an organisation that deals with the manufacturing of products for shipping to customers in different geographical regions may customise integrated information management systems as shown in figure 2.

Figure 2: Example of a customisation approach for an information management system

Xiong and Geng (2014) assert that information management systems can be deployed in analysing big data to yield information that is necessary for making important policy decisions in a given state. For instance, the pro-poor policies that were implemented in China led to a reduction of poverty levels in 2010.

They were based on analysis of big data. Currently, income distribution differences among the poor and the rich people are rising in China and Cambodia. To come up with this deduction, they analysed big data using the Gini coefficient and the Theil Index (Xiong & Geng, 2014).

Although big data is important in making vital decisions upon its analysis, it brings with it an immense responsibility.

In an interview with MIT Media Arts expert, Alex “Sandy” Pentland asserts that organisations do not own data and that “without rules that define who does, consumers will revolt, regulators will swoop down, and the interest of things will not reach its full potential” (Smith, 2014, p.101).

This claim means that information should be availed to all people, instead of being preserved within an organisation’s premises so that other organisations and individuals do not share it or use it after its analysis to make important decisions that can influence their lives and businesses.

From this assertion, an emerging question is whether people should be given the freedom to determine whether their data should be collected and stored in super memory computers so that all people who are interested in it can access it and deploy their big data information systems to yield their required information.

Can people permit others to spy them in the disguise of collecting data?

Alex responds to the above question by claiming that transparency helps in creating trust, which allows people to share their information freely. He notes that people are currently not notified that other people are spying them to collect big data.

This move violates their rights just as they possess the right of control over their bodies (Smith, 2014). Transparency in data collection is incredibly important in ensuring that the information, which is derived from it, is helpful to an organisation.

Alex insists that data, which is collected and analysed through big data information management systems, should complete the whole picture about an individual.

The data is important when it is managed from a central place so that information from it can permit people to personalise their lives in terms of medicines, access to financial services, and insurance among other issues that are important for an individual’s living.

Lack of transparency in data collection and storage only introduces challenges to its security. Indeed, many information management systems suffer from the challenges of unauthorised access to its systems. Lack of trust on an organisation’s information compels people to hack into systems.

Alex confirms how this move influences negatively all critical systems since it paves a way for disasters to arise, including those that lead to the death of innocent people (Smith, 2014).

Considering the merits of maintaining open information management system, there arise questions on how transparency can be achieved. Alex provides an example of the way out.

The Open PDS software, which was developed by MIT experts, permits people to access and view data held in companies’ databases and ensures its safe sharing (Smith, 2014, p.103).

This provides reliability and dependability of the data held by the companies so that people are not temped to access it in unsecure ways leading to crimes like espionage. In fact, some of the important qualities of a big data information management system are accuracy, flexibility, reliability verifiability and dependability.

The need to handle and manage big data safely is important in all walks of life. Reliability in modern scientific research greatly depends on the capacity to present research findings reflecting a large sample size. Indeed, findings are more accurate when the entire population is studied.

In the past, studying population was almost impossibility due to limitation of the capability to collect and analyse big data. Modern technological approaches permit such an endeavour.

In a World of ever changing operational dynamics, more accurate forecasting of consumers and general industry trends is important in developing policies that increases an organisations’ competitive advantage. Therefore, generation of big data is almost inevitable.

The case of SLACK Laboratory evidences the inevitability of generating big data in modern scientific researches.

With its launch being anticipated in 2020, the “the Large Synoptic Survey Telescope (LSST) will feature a 3.2-gigapixel camera capturing ultra-high-resolution images of the sky every 15 seconds, every night, for at least 10 years” (Wright, 2014, p.13). During this period, big data will be generated. However, it requires real time analysis.

Therefore, information management systems for big data are inevitable since this data exceeds the human ability to analyse and interpret. Nevertheless, the existing information management systems still do not have the ability to store all data that can be collected in a scientific research.

For instance, Wright (2014) asserts that although more than 40 billion astronomical objects can be potentially viewed using an ultra-high resolution camera, only data that relates to specific objects of study can be stored. Thus, the data that is available is more than what several parallel super processing and storage computers can handle.

Although the amount of data that can be generated during research may be above the capacity of the current information management systems to handle, the systems continue to develop to meet the increasing capability to collect data.

For example, Wright (2014) reckons that scientists are now exploring and benchmarking from private sectors’ information management approaches such as cloud computing and quantum computing. The situation at SLACK Laboratory is replicated at Argonne National Laboratory.

Argonne gathers more than 11 gigabytes of data in every minute (Wright, 2014). Its head researcher, Jaconsen, claims that his organisation has been struggling with the problem of sharing data amongst its research staff people (Wright, 2014).

A traditional approach has been bringing in hard drives at the place of work to pick the data, which can be analysed at home. However, the improvement in data collection technology implies an increasing amount of data that can be collected per minute.

Consequently, to foster better data sharing Argonne has now resulted in information management systems that use the concept of cloud computing (Wright, 2014).

Although quantum computing may be considered the way to go, it may not meet future expectations.

Wright (2014) supports this line of thought by claiming, “for more traditional computing tasks such as combinatorial optimisation, airline scheduling, or adiabatic algorithms, it is not at all clear that quantum computers will offer any meaningful performance gains” (p.15).

Although the problem may have been in the district of scientific research, organisations that operate in the social media and other business lines that require internet connectivity have to cope with big data challenges.

For example, Google Company, eBay, and Amazon must gather and process huge amounts of data on a daily basis. Consequently, these companies cannot negate from continuous investment in research for better big data integrated information management systems.

Conclusion

Organisations that operate in the global business environment generate a huge amount of data that relates to their customers. Scientific researchers now use more improved data collection tools such as cameras that have immense pixel capabilities. The generated data exceeds the capacity for human decision makers to analyse and interpret it.

Computers that rely on a set of CPUs to optimise processing capabilities are also becoming less important to scientific research communities that are in need of processing interrelated data from super data storage and processing computers.

Apart from scientific researchers, organisations that operate in the internet sector such as Google, Yahoo, and Amazon are also seeking better ways of increasing data collection, storage, and processing to yield information.

While cloud computing and quantum computing are potential solutions, they have limits in terms of their application. Therefore, investment in research for better big data integrated information management systems is inevitable not only for these organisations, but also others that deal with big data as a source of information.

Reference List

Smith, D. (2014).With Big Data Comes with Big Responsibility: An Interview with MIT Media Lab’s Alex “Sandy” Pentland. Harvard Business Review, 1(1), 101-104.

Speiss, J., T’Joens, Y., Dragnea, R., Spencer, P., & Philippart, L. (2014). Using Big Data to improve Customer Experience and Business Performance. Bells Lab Technical Journal, 18(4), 3-17.

Turban, N. (2008). Information Technology for Management, Transforming Organisations in the Digital Economy. Massachusetts, MA: John Wiley & Sons, Inc.

Wright, A. (2014). Big Data Meets Big Science. Communications of the ACM, 57(7), 13-15.

Xiong, B., & Geng, Y. (2014). Practices and Experiences of GMS Countries Based on Big Data Analysis. Applied mechanics and materials, 687(691), 4870-4873.