Abstract

Evidence shows that military systems are often procured with hidden defects that often reveal themselves during system use. The call into question the reliability of the procurement process in determining the functional accuracy of the military systems in fulfilling their objectives as well as the veracity of the technical specifications and performance arises. The proposed framework is designed to solve the problem of scenarios where military equipment, procured with inherent defects not detected during the acquisition cycle leading to mishaps, expensive mistakes, and operational inefficiencies. A preventive approach defines the proposed framework, which is used for earlier detection of errors and corrective actions. Such actions are appropriate because of the decision making points in the system acquisition process phases of requirement analysis, systems architecture, system/subsystem design, systems integration and interfaces, verification and validation, life cycle support among other phases. An analysis of the data from case studies of system failures based on system acquisition concepts generates questions that need to be answered to avoid untested progression to the next phase, which is seen as an appropriate approach to preventing system failures. A mixed research method that factors both the qualitative and quantitative paradigms will be used as a research methodology. The qualitative approach will be used by analyzing existing literature while quantitative data will be collected using questionnaires and SPSS programs for data analysis to answer the research questions.

How Topic Falls Into the Scope of the Problems

This study falls into the scope of the topic by focusing on how notable system engineering failures among different defense products can be prevented at different stages of the procurement stages. Such occurrences lead to serious mishaps and associate risks. Examples include the failure of the Patriot Missile Defense System (1991), Aegis Combat System, Iran Air Flight 655 (1988), Delta 180 powered space interceptor to the X-33 reusable launch vehicle and Hubble Space Telescope among others. However, according to Wasson (2015), addressing the problem of system engineering failures is critical to ensure acceptable mishap risk that lie within the constraints of operational efficiency in terms of system performance and effectiveness.

Background and Rationale

Despite the varied causes of system engineering failures being addressed from different perspectives, in accordance with Meier (2008), the proposed solution is to develop a framework that can be implemented to address failures that happen at each of the acquisition stages to prevent untested progression to the next phase without appropriately answering questions that arise in the current phase. Typically, it leads to program efficacy, cost effectiveness, and according to Piaszczyk (2011), the fielding of the system within the required time frame. A case study approach will provide practical solutions of identifying the right questions to ask at each stage of the product acquisition life cycle.

Focus of the study

The proposed framework consists of the case of a contractor vs. the government. The framework will combine different case studies of system engineering acquisition process to analyse each acquisition phases and the resulting solutions to problems that could be associated with each stage and the guidelines on the key areas to address and prevent system failure (Board & National Research Council, 2008). The proposed framework will be constructed using the answers provided by the stakeholders to the acquisition of the defence products. The uniqueness with the proposed approach, according to Bahill and Chapman (1994) involves the use of case studies as a way of addressing failures that occur in the lifecycle phases..

Motivation of the Study

Engineering system failures especially in the department of defense where systems are supposed to perform up to the requirements and technical specifications is costly. Once the failure or a mishap has happened, it is usually difficult to trace and pinpoint the exact cause of the problem. Besides, corrective actions on other systems that might have similar engineering problems are sometimes elusive. To address the problem, there is a need to have a decision support framework that can be used early in the procurement process to identify and take corrective actions to avoid such errors. The motivation is to provide a solution that can be used as an industry standard framework to solve the problem once and for all.

Rationale and Justification of the Study

Military engineering systems failures is a serious problem that leads to unexpected mishaps and risk that needs to be addressed. However, the study will focus identifying the exact sources of problems and take corrective actions in the procurement lifecycle to prevent such failures from happening.

Key Problem

The key problems is that military engineering systems often fail without clear understanding on how and where the failure could be traced to.

- Literature Review

- Analysis of Framework Elements

- Requirement Analysis

Research by Wasson (2015) shows that the ability to create a product with accurate technical specifications is a system engineering process requirement that can be fulfilled by engaging both the contractor and the government to identify the problems and take corrective measures at an earlier stage (Wasson, 2015). The goal is to generate solutions that are consistent with the necessary product requirements for the acquisition process to be effective to avoid conflicting issues as well as any other defects and system failures. To achieve this, Friedman and Sage (2004) argue that by analyzing government requirements through a communication paradigm that involves the department of defense representatives in each phase of the process lifecycle provides specific answers to the question. Wasson (2015) argues that the requirements are analysed to verify the veracity and accuracy of the decision phases as a requisite step to allow for a smooth process flow before the next step commences. Wasson (2015) used a typical case study approach to demonstrate how requirements analysis can be used to demonstrate the technical specifications of a good system by clarifying how the requirements are collected from the customer.

Information Sharing

Wasson (2015) proposed an approach where the requirements analysis function, the government and the manufacturer should agree on appropriate elements to be integrated into the system being acquired (Li, Conradi, & Kampenes, 2006). According to Wasson (2015), a summary of the case where the government should agree with each other on the system to be acquired. However, the results of a typical case between two contractors and the government show that the contactors engaged the supplier of the stellar inertial system. In both cases, the system was poorly implemented due to the problems that happened at the procurement stages (Mitchell, 2007). It is imperative to note that the customer’s reputation was used to award them the contract.

Systems Architecture

Friedman and Sage (2004) proposed a case where the system baseline architecture was a critical component in producing a system that was consistent with user technical requirements for satisfactory operational efficiency and avoidance of critical system failures. Friedman and Sage (2004) note that by factoring each technical dimension into the framework at an early stage, the engineering process could be tailored to meet the operational requirements of the system. Schwartz (2010) investigated a case where system failures could be revealed due to system architecture to provide a solution in the prevention of unanticipated problems. Friedman and Sage (2004) and Schwartz (2010) argue that a typical case that demonstrates poor knowledge on human/machine interactions and other related situations among the machine technicians as well as their machine handling capabilities for a combat aircraft operating under strenuous conditions could enable system procurement process to identify critical points in the decision framework. The task of controlling the aircraft is in the hands of the pilot who supervises the task of automated functions to ensure the system performs effectively (Meier, 2008).

System. Subsystem Design

One of the sources of system failures that have been identified with the projects in the department of defense includes system and subsystem design problems. The proposed framework consists of concept definition which shows that the system design must be consistent and systematic and be in accordance with the system operational requirements (Bahill & Chapman, 1994). Processes should be defined by functional decomposition and design traceability logic. Bakshi and Singh (2002) argue that the concept is to ensure that appropriate framework elements ensure system robustness.

Bakshi and Singh (2002) conducted an assessment of system/subsystem design related problem to reveal how the government side of failure to communicate with the contractor appropriately. The resulting problems were problems were related to cost, risk, speed, and reliability, and performance specifications.

Systems Integration and Interfaces

An investigation by Bakshi and Singh (2002) show that integration can best used to assure complete support for system functionality in each case of system development lifecycle. A study by Li et al. (2006) using a case study approach of a contract between the government and the contractor shows the rules of engagement such as the assurance that the system components can work to fulfill the intended functionality is crucial. Typically, the case study revealed component failure due to the inability for the testing phase to reveal inherent weaknesses, there were subsequent system failures.

Verification and Validation

Validation and verification have been proposed and conceptualized as the basis for establishing the successful performance of each component in accordance with the system technical requirements and specifications (Morse, Babcock, & Murthy, 2014). This is based on validation and verification measures or metrics that show the degree of reliability, safety, and liability risk. The framework will be used to verify high volume production rates by ensuring that a low degree or probability of failure among manufactured components.

Life Cycle Support

A research study by Rezaei, Chiew, Lee, and Aliee (2014) established that life Cycle support is an appropriate framework concept that entails the testing of a product by focusing on the entire lifecycle perspective and not from the prototype viewpoint The study showed that failures occur due to the failure to factor the key performance parameters into the system design process lead to poor producibility and lack of well understood and properly written user manuals. However, Pohl (2010) notes that the concept requires a balanced blend of different methods and processes to avoid conflicting results. In addition, Rezaei et al. (2014) suggested program personnel should be trained to work within the product development lifecycle to ensure complete compliance with the product performance parameters.

Risk Management

According to Kruchten, Nord, and Ozkaya (2012), the concept requirements include risk prioritizations in product acquisition cycle. Kruchten et al. (2012) argue that this should be done in the context of cost, technical and appropriate lifecycle schedules. The case study shows that the most programmes fail to factor the formal risk management elements and often the risks are either handled by inexperienced managers without a risk matrix and solutions (Board & National Research Council, 2008). Kruchten et al. (2012) proposed an approach that factors analysis of the risk management process by both the government and the contractor to avoid failures. In this case, the responsibility of the government and the contractor is to ensure that risk management is factored into the acquisition lifecycle.

System and Program Management

Sauser, Ramirez-Marquez, Henry, and DiMarzio (2008) proposed a model that consists of a plan that enables the department of defense and the contractor to work effectively to achieve the overall objective of technical control and project planning. The rationale was to ensure that each component and projects were designed with unique technical specifications that were orders of magnitude different from each other. The result showed that the entire process could be managed with configuration control, scope, reviews, constraints, measurements, validations, verifications, and project schedules should be done appropriately (Sauseret al., 2008). Effective use of case study outcomes on the subject will provide the key decision questions to ask to prevent failures from occurring in the acquisition process. A typical approach is exemplified in a case where a SEMP report by an engineer that captured the customer requirements that was presented as a request for proposal document.

Sauseret al., (2008) studied a framework which consists of a working environment and factors related to system engineering processes that adversely affected contributions by the engineers to the System Engineering Management Plan (SEMP). By relying on a related case study involving the government and the contractor, the study revealed serious problems such as abrupt changes to the product requirements that caused drastic design and budget changes. Sauseret al., (2008) along with Pohl (2010) suggested that the underlying SEMP concept could be used to design the questions to ask at the system and program management phase. This could “address the question on what efforts the contractor has initiated to plan and control a fully integrated engineering effort” (Pohl, 2010, p.10). The following questions will need to be asked to prevent failure.

The goal was to establish whether the SEMP guidelines could be followed at each point of the acquisition process. In practice, Pohl (2010) noted that consistency checks were important and a must to be done to determine the milestones in terms of resource allocation, project costs, product assurance model, work flow control process, and self-assessments.

Deployment and Post-Deployment

In theory, Pohl (2010) argued that this concept could define the operational effectiveness of the system after tests have been conducted and the results analysed using test bed data.

Product Design and Development

A detailed study by Stouffer, Falco, and Scarfone (2011) on a decision framework that was used to prevent system engineering failures in the defense industry showed that it factored product design and development elements as critical factors into the process. In theory, product design and development is a system engineering process that requires effective loop linking with design outcomes that ate effectively implemented. Francis and Bekera (2014) identified the fact that the level of complexity of components should be integral to the system deployment. This could be possible by identifying system design specifications in accordance with the agreed upon specifications between the contractor and the government. Francis and Bekera (2014) noted that this phase was characterised by resource requirements and different levels of difficulty in product development and testing. In practise, the number and knowledge requirements as well as design complexity, quantity, and quality required can be used to estimate the sources of error. Besides, Francis and Bekera (2014) argue that testing for product failure modes were key elements in the decision making to avoid the occurrence or probability of the occurrence of system failures. The study concluded that by appraising the potential failure of the product through effective analysis, the key areas emerges using the right first design approach.

A typical case study emerges that involves the contractor and the government. In this case, the functions identified within the product were not appropriately analysed and tested and neither the severity of failure defined in the reporting test document. Besides, the degree and effects of each failure were not ranked as well as the determination of the levels of the severity. According to Katina, Despotou, Calida, Kholodkov, and Keating (2014) the test cases can be done in the context of risk factors without clearly defining the risks. Risk is identified and prioritised along with the failure modes and failure functions based on a formal review. To avoid errors before moving to the next phase, it is imperative to ask the following questions.

This could answer the question on whether product function analysis was done to determine the type of functions that failed to perform what they were intended to perform. The method used for analysis also matters to a great extent because it could provide the design changes that need to be introduced for corrective design and implementation. It will also provide the scores for occurrence, severity, and direction to follow based on the risk factor to make recommendations that will be appropriate to avoid failure.

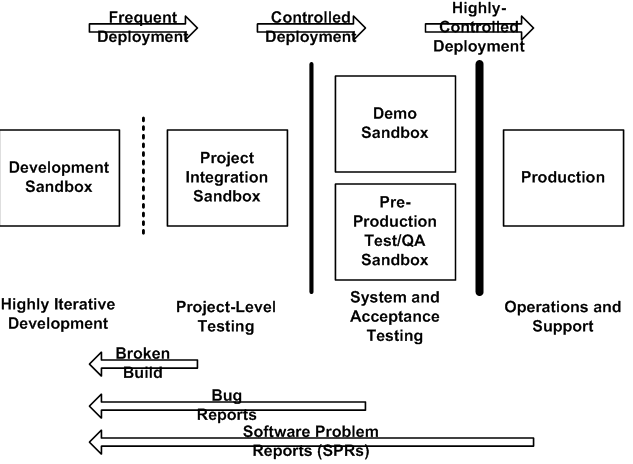

The sources of errors in this case include the use of inappropriate test methods that have not been agreed upon by the government and the customer. There is need to ensure effective coordination between the interested parties (Stouffer et al., 2011). In theory and practise, the deployment and post deployment of engineering systems is a complex exercise that requires due diligence. Based on the concept, the system deployment must be done according to equipment validation and testing procedures that are in accordance with military deployment standards and seamless integration of technology. According to Wasson (2015), various case studies have shown different sources of errors that will be investigated in the research. The proposed area of research includes identifying the frequency of research, controlled deployment, and high controlled deployment environments and the metrics applied in each case in a software system deployment environment.

Qualification Phase

Empirical evidence provided by Kapletia and Probert (2010) showed that the qualification phase consists of a detailed discourse of the level of compliance of each technical specification that guarantees absolutely reliable and error free system performance. According to Francis and Bekera (2014), the rationale was to test and verify product performance metrics that show the level of reliability of the system and the source of errors that could be identified and corrected earlier in the system development and overall acquisition cycle. According to Kapletia and Probert (2010), the entire process includes audits that cover system level operational functionalities to determine the level of compliance to the system requirements that meet the system specifications issued by the customer, who is the government in this case.

Process Qualification

The production process provides adequate information on the adequacy of a given system to perform according to and in compliance with specified standards that have been agreed upon between the contractor and the government (Kennedy, Sobek, & Kennedy, 2014). The importance of the paradigm is in the determination of the degree of probability of reproducibility of a system that can perform in the military context. The facility, utilities, and qualification of equipment as well as the process performance capabilities qualify to appropriately pass the process qualification test (Kalpakjian & Schmid, 2014). In the production context, the process must be qualified depending based on the proper design of the manufacturing utilities and equipment. Qualification in this context implies that the equipment and utilities must perform the intended production activities appropriately. Based on this, the following questions need to be answered to avoid failure or problems getting transferred to the next phase.

Process Performance Qualification

This framework element was shown to be a sub process that combines the equipment and utilities that have been tested and qualified for use along with highly qualified personnel that operate the machines. This is a critical step in the product lifecycle as the assurance that it will perform as per the agreement between the contractor and the government.

It is important to verify whether the product is a new weapons system or an old weapons system. Typically, there is a void that needs to be filled to ensure that errors are not carried forward in the case of system development and testing to new phases for complex military systems (Leveson, 2004). Typically, compliance to system requirements under the stakeholder requirements definition process, system architecture, and integration, and transition process as well as technical processes and technical management processes needs to be assessed (Kerzner, 2013). Errors arise because of the failure to address process qualification requirements.

There is need to ensure that the process qualification is done as a continuous assurance measure to ensure that the process phases remain within the specified parameters. The approach will validate each phase against agreed upon parameters to ensure that there are no planned or unplanned departures from the performance of the process. This is done continuously to identify problems that might happen during each process and take corrective actions to remedy the problem.

Production

This encompasses the production management of the system components by the effective utilization of resources to ensure on schedule production of engineering systems that comply with the performance, quality, and cost requirements are met. The entire process includes the producibility assessment, resource analysis, production engineering, and post planning engineering, productivity enhancement, and industrial preparedness planning (Katina et al., 2014). Production management techniques are essential for product development in the context of the determination of progress production program and the program that is an indicator of the resources used in the production process and the underlying constraints. The key production elements include the production plan based on the approved or rejected contractor plan and the production plan review which details the broad range of acquisition activities.

Sources of problems

Friedman and Sage (2004) presented a case study where the key framework elements include the requirement analysis concept and the responses between the contractor and the government and the key questions that could be answered to address the possibility of the occurrence of system defects. In this case study, the contractor stated that the flow of the requirements could be in a top down system. Besides, Friedman and Sage (2004) noted that a coherent approach could require traceability to be done through each acquisition phase. According to Stouffer et al. (2011), the case showed that the government requirements did not flow smoothly due to lack of lifecycle system modeling capabilities within the entire process flow. A focus on the requirements analysis phase were not fulfilled while the quantitative aspect of the requirements. In the above case, the deliverables or outputs include an accurate requirements traceability document as a milestone that could help the manufacturer and the government to effectively follow each phase of the process.

Justification and Conclusions

Case studies of system that have been developed and taken through the production process will provide adequate lessons to use in determining the appropriateness of the processes meeting the underlined product performance requirements. The production process data must be collected to determine the level of capability and stability and identify the degree of variability from the desired operational efficiency (Li, Rosenwald, Jung, & Liu, 2005). Data can be generated from the quality unit staff as well as line operators on system performance capabilities. The data could be sufficient in determining the problems or errors that arise in the production steps and provide decision points on how to take corrective actions and the nature of the actions to take. Examples include altering the operating conditions, specific process controls, material requirements and characteristics, and in-processes. There is need to ensure proper documentation of the changes.

A typical example to illustrate the findings of other researchers show that product documentation due to the removal of TBD lines that were in the program code led to a loss of $100M in profit due to the cancellation of the program (Sage & Cuppan, 2001). Typically, a study by Blanchard (2004) noted that failure to conduct tests in time by delaying or postponing the test process to the future or after a number of years or after a component or a product has failed or has been manufactured is a critical source of system failures (Piaszczyk, 2011). Blanchard (2004) noted that tests conducted by the contractor receive a better grade than the grade given by the government. The results indicate that indicates that the contractor should agree with the government on the test criteria, associated metrics, and validation and verification methods to use.

Purpose and Problem Statement

Research shows that engineering systems mishaps especially for the military present serious problems that need to be addressed. While many solutions have been suggested that focus on the problem itself, no interventional approaches have been suggested and implemented for identifying, mitigating, and preventing the transmission of errors to successive production stages. Such a problem makes the procurement and acquisition of military systems with the possibility of hidden defects a difficult and expensive exercise to identify especially when the discovery is made too late. The inability to identify the exact problems and sources of failure makes it impossible to identify the exact corrective actions to take to prevent failure at all levels of the procurement stages. The proposed framework addresses the problem of failure by based on the major decision points in the requirements analysis, concept exploration and definition, full scale engineering development, qualification phase, process qualification, and production phases. In creating the framework, the guiding objectives are detailed below.

Objectives

- Analysis of the effectiveness of the failure prevention framework elements

- Identification and analysis of sources of error in each framework element

Possible Theories and Practical Problems

In theory, accurate and precise evaluation of processes as well as validated system acquisition methods can be used as the baseline assessment approaches to determine the reliability, efficiency, and effectiveness of the acquisition of defense equipment. The system approach justifies the use of a framework with key defining elements that work together to achieve the desired objective. Underlying the theoretical framework is a collection of interrelated components based on the system thinking approach. The system could be interpreted as a whole body of elements that show or provide a detailed account of each system component. This approach uses the open system thinking approach where inputs are taken from the environment and outputs given back into the environment. In this thinking, each component must perform to the required level to qualify as a complete system. According to Markus, Majchrzak, and Gasser (2002), the proposed approach is to use a non-linear iterative system acquisition process, which is the system engineering acquisition model to identify system failures and associated problems. According to Kapletia and Probert (2010), different models provide varying milestone phases such as concept refinement, technology development, system development and demonstration, product deployment, and operations and support. According to Friedman and Sage (2004) and Liu et al. (2000), additional candidate methodologies include the acquisition phase, concept exploration, concept definition and demonstration, development, industrialization, and production, which is the system lifecycle framework. The proposed framework will constitute the requirement analysis, concept exploration, concept definition, Full Scale Engineering Development, Qualification Phase, Process Qualification, and Production phases. Goerger, Madni, and Eslinger (2014) note that the approach is a unique case study approach that answers the questions that allow for the process to go to the next phase. The fundamental concepts play the central role in problem identification.

Research Methodology

A mixed research method will be applied in the investigation to establish the decision framework designed to prevent system engineering failures that happen in the acquisition process. According to Trochim (2006), the mixed research method consists of the quantitative (inductive) and qualitative (deductive) methods for optimal utilisation of the benefits due to both paradigms to answer the research questions. The goal is to analyse the appropriateness of the proposed framework elements of requirements analysis, concept exploration, and full scale engineering among the other framework elements as well as identify the sources of errors so that mitigation approaches can be suggested to prevent failure from getting transferred from one stage to the next without being detected and corrective action taken at an earlier stage.

Data Collection Techniques and Instrument

Different instruments have been suggested and used in various data collection activities. The proposed data collection instrument will include the questionnaire for data collection, which will be subjected to statistical correlational analysis to answer the research questions on the key features of the decision frameworks to prevent system engineering failures in defense industry. The key benefits of the questionnaire are that it provides the respondents with anonymity and enough time to answer the questions.

Measurement Techniques

A Likert scale will be used to measure the respondent’s perceptions from the target population of engineers and military personnel. Measures of central tendency, pattern answering, and the order effects will be addressed to ensure that the results have the required validity.

- Strongly disagree………………………………

- Disagree……………………………………….

- Neither agrees nor disagree…………………

- Agree…………………………………………

- Strongly agree……………………………….

Cultural Competency of the Questionnaire

It has been established that the questionnaire is a widely used instrument for accurate data collection, which makes it appropriate for this study.

Internal Validity

To ensure that the questionnaire measures what it is intended to measure, an internal validity inspection will be done (Trochim, 2006). In theory, this is achieved by establishing the causal relationship between the dependent and independent variables. The method is appropriate for descriptive statistics, which will be used in this investigation.

External Validity

Typically, the degree or extent to which the results can be generalized across the target population defines the external validity. External validity is critical in this study because it signifies the validity of the framework in preventing military systems from failure (Trochim, 2006). It implies that the extent to which the framework can be adapted or integrated into each phase of the acquisition process is critical. External validity will be assured by verifying the accuracy and applicability of the framework elements in preventing faults in each system procurement process steps.

Construct Validity

Statistical measures such as the p-values, Skewness, and the Chi-square goodness of fit will be used to determine the variations with observed values, and case study values. Besides, the measures of central tendency will be tested on a two independent samples t-test to compare two mean values that are generated from the statistical test. According to Trochim (2006), construct validity is important because it provides accurate measures that are verified by theoretical concepts on the subject being investigated.

Research Design

True experimental research design will be the most appropriate design to use in this study. The rationale is that such a design allow for the accurate use of statistical data based on randomization of the subject under investigation into control and treatment groups. Trochim (2006) notes that by accurately representing the data, control groups will be required to shoe a high degree of homogeneity. The benefits of using this method include the ability to reduce noise and focus on accurate variables and data. The possibility of overcoming multiple inferences arises when this method is used as an additional benefit.

Besides, the use of this method is appropriate because researchers are able to come up with high levels of reliability. In theory, the threat to internal validity based on the method will be overcome because it allows the researcher to manipulate one variable alone, while the rest of the research variables cannot be manipulated. The threats to internal validity that can be overcome include ambiguities, issues with the data collection instrument, and the type of assumptions to make. Based on the use of group pre-posttest design, it is possible to ensure that the results are accurate by using a statistical program, preferably SPSS.

The proposed research has several benefits. The first benefit is that it is easy to implement, clear to understand, easy to read, easy to collaborate and cross validate, and easy to remove internal validity threats such as history, maturation, and election bias.

The Target Population and Sampling Approach

A high degree of probability combined with stratified sampling approach will be used to address the problem. Stratified sampling will be appropriate because it will allow the researcher to collect data from diverse subgroups, which consist of engineers, procurement professionals, and military experts.

Data Analysis Strategies

Once the data has been collected and checked for reliability and accuracy, it will be coded and entered into SPSS correlational and t-statics tests.

References

Bahill, A. T., & Chapman, W. L. (1994). Understanding systems engineering through case studies. systems engineering. The Journal of the National Council on Systems Engineering, 1(1), 145-154.

Bakshi, M., & Singh, S. (2002). Development of validated stability-Indicating assay methods—Critical review. Journal of Pharmaceutical and Biomedical Analysis, 28(6), 1011-1040.

Blanchard, B. S. (2004). System engineering management. New York, NY: John Wiley & Sons.

Board, A. F. S., & National Research Council. (2008). Pre-milestone A and early-phase systems engineering: A retrospective review and benefits for future Air Force acquisition. Washington, DC: National Academies Press.

Francis, R., & Bekera, B. (2014). A metric and frameworks for resilience analysis of engineered and infrastructure systems. Reliability Engineering & System Safety, 121(1), 90-103.

Friedman, G., & Sage, A. P. (2004). Case studies of systems engineering and management in systems acquisition. Systems Engineering, 7(1), 84-97.

Goerger, S. R., Madni, A. M., & Eslinger, O. J. (2014). Engineered resilient systems: A DoD perspective. Procedia Computer Science, 28(1), 865-872.

Kapletia, D., & Probert, D. (2010). Migrating from products to solutions: An exploration of system support in the UK defense industry. Industrial Marketing Management, 39(4), 582-592.

Kalpakjian, S., & Schmid, S. R. (2014). Manufacturing engineering and technology. Upper Saddle River, NJ: Pearson.

Katina, P. F., Despotou, G., Calida, B. Y., Kholodkov, T., & Keating, C. B. (2014).

Sustainability of systems of systems. International Journal of System of Systems Engineering, 5(2), 93-113.

Kennedy, B. M., Sobek, D. K., & Kennedy, M. N. (2014). Reducing rework by applying set‐based practices early in the systems engineering process. Systems Engineering, 17(3), 278-296.

Kerzner, H. (2013). Project management: A systems approach to planning, scheduling, and controlling. New York, NY: John Wiley & Sons.

Kruchten, P., Nord, R. L., & Ozkaya, I. (2012). Technical debt: From metaphor to theory and practice. Ieee Software, 29(6), 18-21.

Leveson, N. (2004). A new accident model for engineering safer systems. Safety Science, 42(4), 237-270.

Li, H., Rosenwald, G. W., Jung, J., & Liu, C. C. (2005). Strategic power infrastructure defense. Proceedings of the IEEE, 93(5), 918-933.

Li, J., Bjørnson, F. O., Conradi, R., & Kampenes, V. B. (2006). An empirical study of variations in COTS-based software development processes in the Norwegian IT industry. Empirical Software Engineering, 11(3), 433-461.

Liu, C. C., Jung, J., Heydt, G. T., Vittal, V., & Phadke, A. G. (2000). The strategic power infrastructure defense (SPID) system. A Conceptual Design. IEEE Control Systems, 20(4), 40-52.

Markus, M. L., Majchrzak, A., & Gasser, L. (2002). A design theory for systems that support emergent knowledge processes. MIS Quarterly, 1(1), 179-212.

Meier, S. R. (2008). Best project management and systems engineering practices in the preacquisition phase for federal intelligence and defense agencies. Project Management Journal, 39(1), 59-71.

Mitchell, J. A. (2007). Measuring the maturity of a technology: guidance on assigning a TRL. Sandia National Laboratories: Report SAND2007-6733.

Morse, L. C., Babcock, D. L., & Murthy, M. (2014). Managing engineering and technology. Upper Saddle River, NJ: Pearson.

Pohl, K. (2010). Requirements engineering: Fundamentals, principles, and techniques. New York City, NY: Springer Publishing Company, Incorporated.

Piaszczyk, C. (2011). Model based systems engineering with department of defense architectural framework. Systems Engineering, 14(3), 305-326.

Rezaei, R., Chiew, T. K., Lee, S. P., & Aliee, Z. S. (2014). Interoperability evaluation models: A systematic review. Computers in Industry, 65(1), 1-23.

Sage, A. P., & Cuppan, C. D. (2001). On the systems engineering and management of systems of systems and federations of systems. Information Knowledge Systems Management, 2(4), 325-345.

Sauser, B. J., Ramirez-Marquez, J. E., Henry, D., & DiMarzio, D. (2008). A system maturity index for the systems engineering life cycle. International Journal of Industrial and Systems Engineering, 3(6), 673-691.

Schwartz, M. (2010). Defense acquisitions: How DoD acquires weapon systems and recent efforts to reform the process. Washington, DC: Congressional Research Service.

Stouffer, K., Falco, J., & Scarfone, K. (2011). Guide to industrial control systems (ICS) security. NIST Special Publication, 800(82), 16-16.

Trochim, W. (2006). Research methods knowledge base. designs. Web.

Wasson, C. S. (2015). System engineering analysis, design, and development: concepts, principles, and practices. New York, NY: John Wiley & Sons.