Introduction

The use of Decision-Tree in classifying or predicting the outcome of statistical data and debasing of databases has had very appreciable acceptance lately as a tool. This is probably based on the excellence that the tree is capable of offering, as against other neural networks- since the decision tree is a representation of rules (which are usually more readily expressible for human comprehension).

Certain applications completely rest on classification/prediction; wherefore, the individual may not certainly need to evaluate the working pattern of the model (Kass, 1980, p.120). Few cases consider the need for explanation of the decision’s reason as quite vital (such as in marketing where an individual has to present a clear description of the customer-segment for more efficiently lunched marketing campaigns).

By definition, the Decision Tree (DT) may be said to be a tool for classification which relates data in a tree’s structure such that there are components like nodal leaves, and decision nodes.

This piece of work is intended to reflect the identification and application of a decision tree to a factual situation. The task involves development of an original work and a reflection on a project/issue for which the decision tree is effective for analysis. It is also required that 2 sensitivity analysis be conducted whereby their probability values are to vary significantly.

Task Considered: Customer Relativity

The most significantly considered attributes for the decision tree include:

- Value description: This requires that the issue considered is expressible by collection of properties/attributes;

- Predefined Classes: In this case, there has to be a forehand establishment of analyzed data;

- Class discretion: The considered case is assumed to be a member of a recognized class, and that the classes are less than the cases;

- Sufficiency of Data: The case is considered to have adequate availability of data (Rokach & Maimon, 2005, p. 479).

Generation of the Decision Tree

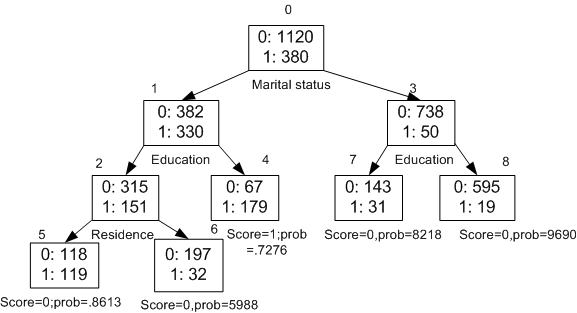

The Figure on Page 2 shows the developed Decision Tree containing 9 Nodes (which are a reflection of the 9 applying rules). A binary target has been considered such that: 1 implies the certainty of the customer having an increased spending; and 0 implies that the customer’s spending is absolutely restricted. Splitting of the DT Initially is dependent on CUST_MARITAL_STATUS attribute with the tree’s root (node 0) split into 1 and – whereby customers who are married have been related in node 1; and the third is for singles. Node 1 is defined based on the following rule:

Node 1 record-Count = 712, 0 Count = 382, 1 Count = 330.

CUST_MARITAL_STATUS is “Married”, surrogate: HOUSEHOLD_SIZE In “3””4-5″.

Node 1 constitutes a 712 record and the entirety of the 712 cases reflects CUST_MARITAL_STATUS attribute indicating customers who are married. Perhaps, out of the number, 382 of the customers have a 0 target (which implies their dormant increase potential), while 330 of the customers are certain to increase spending.

Splitting of the Tree

The DT algorithm was carefully found in order to realize the sub-nodes. In Oracle Data-Mining, this is achieved through the 2 homogeneity of metrics know as the entropy and the gini (where the later is usually considered to be the defaulted component). The earlier is used in assessing “quality of alternative split conditions and for selection of the one which results in the most homogeneous child nodes” (Hastie et. al., 2001, p.49). This (homogeneity) could also be regarded as ‘purity’ as it expresses the extent to which the resulted child-node could be generated (Breiman et. al., 1984, p.76).

In the cause of growing the decision tree in the figure above, consciousness is developed that it is a tool for classification which relates data in a tree’s structure such that there are components like nodal leaves, and decision nodes. Hence this piece of work is justified by the reflection on the identification and application of a decision tree to a factual situation.

Cost Matrix

The variously classified algorithms, with an inclusion of the DT, are considered to support adequately the cost-benefit-matrix during each application. A common cost-matrix was therefore used in the development and the scoring of the DT model.

Prevention of Over-Fitting

For the considered case, folding back of the DT is absolutely important for the indication of a preferred path and even for the identification of clearer expected values. Basically, the need for growing a DT’s depth is just appropriate can never be over emphasized, as this ensures perfection of results generated. Although the aforementioned could be considered an impeccable approach, it is rather strenuous and could posses quite a number of challenges (Breiman et. al., 1984, p.32); especially where the data is extremely discrete.

Consequently, a mildly used algorithm could be used in growing a tree which is over-fitting – over-fitting entail a situation whereby a model could precisely be used for predicting data that has been used for the creation of a tree; which could however generate complexities when fresh information is fed into it.

In order to ensure the prevention of over-fitting, Oracle Data-Mining was used for supporting the automatic pruning/configuration of the grown tree shown in the figure above. The limitation of the conditions for defining the growth of the tree was more so split as soon as the tree was appropriately grown, and non-significant branches were pruned.

The advantages of the application of the decision tree are thus numerous; ranging from easing the analysis and categorization of data to retracing of point of error in an established decision.

Conclusion

This paper considers the growth of a Decision Tree as a task for classifying or predicting the outcome of statistical data and debasing of databases to a significant degree of accuracy. The fundamental lesson learnt in this task is that the decision tree could be used for handling numerical/categorical data effectively.

The paper thus considers the application of DT as completely resting on classification/prediction; wherefore, the individual may not certainly need to evaluate the working pattern of the model. Few cases have been noted for consideration of the need for explanation of the decision’s reason as quite vital (such as in marketing where an individual has to present a clear description of the customer-segment for more efficiently lunched marketing campaigns).

In the cause of growing the decision tree, (DT) it was said to be a tool for classification which relates data in a tree’s structure such that there are components like nodal leaves, and decision nodes. Hence the piece of work is justified by its reflection on the identification and application of a decision tree to a factual situation. The task considered involved development of an original work and a reflection on a project/issue for which the decision tree is effective for analysis. This task addressed the requirement that 2 sensitivity analysis be conducted whereby their probability values were to vary significantly.

Reference List

Breiman, L., Friedman, J. H., Olshen, R. A., & Stone, C. J. (1984). Classification and regression trees. Monterey, CA: Wadsworth & Brooks/Cole Advanced Books & Software.

Hastie, T., Tibshirani, R., & Friedman, J. H. (2001). The elements of statistical learning: Data mining, inference, and prediction. New York: Springer Verlag.

Kass, G. V. (1980). An exploratory technique for investigating large quantities of categorical data. Applied Statistics, 29, 119–127.

Rokach, L., & Maimon, O. (2005). Top-down induction of decision trees classifiers-a survey. IEEE Transactions on Systems, 35, 476–487.