Introduction

Background and Objective of Study

Data mining is an umbrella term that has been adopted to refer to the methods manipulating various designs and techniques and statistical analysis tools to explore and analyze varying amounts of data as a way of establishing trends and patterns germane for making informed decisions. Data mining entails the examinations of given data in an automated manner in the aim of coming up with predictive information and projections. There are various sophisticated statistical and numerical designs which are made use of in the processes of assembling conceptual frameworks dependent on actual analysis data.

Data mining processes can be executed and completed with the historical data component. This discipline of data mining enriched with various analyses designs and models has become a valuable interesting area in research spheres since the assembling of composite analysis systems enables researchers to develop new insights and get a clue of what is likely to transpire in the short, medium and long terms.

The deep explorations and analyses of historical data in data mining procedures enable researchers to make better informed decisions. The designs and analysis models are useful to interpret patterns and relationships between historical data components. Data mining is becoming popular with various organizations which have challenges of arriving at proactive knowledge-driven decisions in order to be empowered to enhance organizational efficiency.

There are various data mining algorithms which are in use in contemporary statistical activity domains. The algorithms are applicable on a broad range of applications and specialists have the option of choosing the best algorithm combination to suit the generation of the desired predictive design. The list of common algorithms include Rough Set, Neural Network, Regression, Decision Tree, Clustering/Segmentation, Association rules, Sequence Association, Nearest Neighbor, etc.

There are various data mining applications in contemporary statistical activity. These include the likes of SAS, SPSS, micro strategy, etc. These applications are used for stock market analysis, fraud detection through purchase sequence, campaign management, new restaurant locators, television audience share prediction, and online sales improvement among other functionalities. The use of analysis applications in stock market evaluation helps brokers and/or investment managers to predict stock market trend movement. Today stock market prediction tools have become handy and accessible. These are used not only by security companies and investors, but also by the general public. Why would someone need a tool to predict stock market movement? This is due to the fact that the stock market is an extremely complex system. It can be affected by many factors – economic, political, and psychological. These interlinked factors interact in a very complex manner making the stock market very uncertain. Tools have been developed with equally complex algorithms that take these factors into consideration. These tools have made predicting the stock market trend possible. This has, to a great extent, helped brokers and the companies they represent make wise investment decisions.

Besides using the algorithm that is mentioned above, someone can build a stock market prediction model using a technical analysis approach, such as using Moving Average, Relative Strength Index, Moving Average Convergence Divergence, Rate of Change, and Stochastic Oscillator. Technical analysts look for a quick and easy summation by studying the stock market chart from the various approaches. Often chart analyses are not very precise because it uses only past data analysis instead of taking into consideration the external factors listed above.

Other approaches have been developed by researchers to predict stock market movement. Some are using Genetic Algorithm to choose an optimal portfolio and selecting the real-world stocks using Neural Networks. Others tend to hybridize several Artificial Intelligent techniques. These approaches have been designed to improve the performance of stock market prediction. Although many studies have been made, the results have not been gratifying because financial time data series are too complex and noisy to forecast accurately.

The securities issued by the companies primarily in the in the new issue markets are then available in the secondary market for buying. The secondary markets or stock market deals with the sale and purchase of already issued equity and debt securities of the corporate and others. Primary markets and secondary markets are interconnected as the securities in the primary market are then sending to the secondary market after a specific time period. Unless there is well developed secondary markets which are listed in the stock exchange, it becomes secondary security. Stock exchanges through their listing requirements, exercise control over the new issue market. The primary market is operating in an atmosphere of uncertainty over the market price for the securities. It is the secondary market which enables the investors in the primary market to identify the demand and market price for the listed securities. The important features of stock exchange are as follows:

Stock exchange is a well established organization. It provides a ready market for purchase and sale of old securities. The securities dealt in stock exchange have long and continuous existence. In stock market, companies are not involving directly. The stock exchange provides an opportunity to buy and sell any listed share at market rates on all trading days. Stock exchange is a market for the purchase and sale of listed securities and it is an essential component of a developed capital market. It is the market where the securities of joint stock companies and government or semi government bodies are dealt in.

Role of stock exchange

“The Stock Exchange, therefore, acts as a market that puts those wanting to sell shares in touch with those seeking to buy – effectively the Stock Exchange is a market for second hand shares! To facilitate this process the market has two main ‘players’ – stock brokers and market makers.” (The Stock Exchange- the Role of the Stock Exchange).

Stock exchanges occupy an important role in the financial system. Their role is significant in the capital formation of the country. The savings of the people are channelised through stock exchanges for the economic development of the country. The investor population has increased and the stock exchanges provide a variety of services to the investors. Stock exchanges provide a ready market for purchase and sale of securities. It enables the investors to assess the value of investments from time to time, provides corporate information useful for a scientific assessment of the fundamentals of the companies before making investment, organizes investor education and awareness programs and it attends to investor complaints against registered brokers and listed companies. The stock exchanges also help the companies to enhance their credibility by the listing of shares in stock exchanges

Functions of stock exchange

The important function of stock exchange is “to provide liquidity to the investors. The investor can recuperate the money invested when needed. For it, he has to go to the stock exchange market to sell the securities previously acquired.” (Functions of the Stock Exchange Market):

- Liquidity and marketability: Stock exchanges provide liquidity to the investment in listed companies. Savings of investors flow into the capital market because of their ready marketability and easiness for transfer of ownership of securities provided by the organized stock exchanges.

- Helps to procure capital: Stock exchanges help companies to procure the surplus funds in the economy. It helps the savers to make more profitable investments in corporate business.

- Fixation of prices: As a competitive market involving large number of buyers and sellers stock exchange play an important role in fixing the price. It is done through analyzing the’ demand and supply factors in the transactions.

- Safety of funds: The rules and regulations and bye-laws of the stock exchange impose several measures to control the operations of listed companies and members of the stock exchange. As the dealings in stock exchange are transparent, stock exchanges ensure safety of investors’ money.

- Supply of long term funds: Once the securities are listed in the stock exchange, the fund generated by the companies through public subscription can be treated as long term funds of the company. Even when one investor holds the securities for a short period and sells them, the company need not repay the amount because the same are purchased by another investor.

- Motivation for improved performance: Generally the performance of a company is reflected in the market price for its shares. As the stock market quotations are analyzed by the academic journals and mass media, this makes the corporate more concerned with its public image and always tries to improve their performance.

- Motivation for investment: Stock exchanges are market places for investors from which they can purchase any listed securities at the market price. Even a shareholder with a single share is treated as the owner of a company and will be entitled to voting right, dividend etc. As the status of the investor will be elevated to the status of an owner of a reputed company, people are motivated to save and invest in the capital market.

- Reflect the common position of economy: Stock exchanges will reflect the common condition of economy through the range of indices. The stock market indices best reflects the movement of stock in the economy. Indices give a broad outline of the market movement and represent the market trend. Index may be used as a benchmark for the evaluation of the portfolio. The stock Market may either reflect the price movement or reflect the wealth movement. On the basis of the changes in the stock market index, the government can formulate suitable monetary and fiscal policies.

Investors in stock market

The investors in the stock market can be classified as genuine investors and speculative investors. The difference between investors and speculators are as follows.

- Risk: An investor generally commits his funds to low risk investment, whereas a speculator commits his funds to high risk investments.

- Time period: Genuine investor plans his investments for a longer time horizon. But the speculator commits his funds for a very short period.

- Return: Genuine investors expect moderate return for their low risk investments. Speculators aim to get higher return for assuming greater risks.

- Nature of income: Genuine investors make investments in expectation of a regular return in the form of dividend. The objective of the speculators is to earn quick capital profit arising from the fluctuations in the price of securities.

Trading procedures and settlement investment system

In the stock exchange transactions, there are mainly two methods. It includes manual trading and online trading system. A prospective investor who wants to buy or sell securities in the stock market cannot enter the floor of the stock exchange directly to transact business. He can transact business only through the stock broker. The broker will insist for a letter of introduction of the client or a reference from the banker about the financial soundness and financial integrity of the client. The manual trading system is adopted in case the investor put an order to purchase or sell securities in any one of the following methods:

- at best or market order

- limit order

- immediate or cancel order

- discretionary order

- stop loss order

- day order

- open order.

Orders are executed through the trading ring of the stock exchange. The trading ring or floor of the stock exchange is divided into a number of markets or trading pit or trading post on the basis of securities dealt by that market. All the bargains in the stock exchange under the manual system were done by word of mouth.

Online trading transactions involve buying or selling securities electronically through internet. Online trading has eliminated the huge volume of paper work related to processing of share certificates. The main advantages of online trading are greater transparency of transaction, quick trading, elimination of bad delivery of share certificates, proper matching of orders buy and sell, easy and paperless trading through depository accounts.

Stock market prediction: Nowadays very large amount of capital are traded through stock market all around the world. The economies are powerfully related and prejudiced of the development of the stock market. However, today’s stock market has become very easy investment tool for common people. It is associated with one’s everyday life. So stock market represents a device which has significant and direct social impacts. The important feature of stock market is uncertainty. This uncertainty is raised from the short and long term future transactions. This characteristic is disagreeable for the investor while it is inevitable whenever the stock market is selected as the investment tool. Reducing uncertainty is essential for making accurate stock market prediction. The prediction of stock market is divided into two. One is those who believe that it is a plan or invention of a complex procedure tool to predict the market, and the other is who believe that the market is capable and whenever innovative information comes up it reduces the effect by correcting itself. So there is no gap for prediction. In addition stock market is known as a random walk model. Random walk indicates good forecast about tomorrow’s value as today’s value. So many methods have been related to the prediction of stock market. Four important methods for stock market prediction involving technical analysis, fundamental analysis, traditional time series forecasting and machine learning. Technical analysts are also called as chartists. Technical analysis method is very important method in which the analysts forecast the market through charts which explain data relating to the market. For predicting the stock market technical data are used. Technical data consist of price related highest and lowest price using the trading period, volume, etc. Fundamental analysis method consists of the study about the intrinsic value of stock. After that the intrinsic value and current value of the stock are compared. In case of lower current value than the intrinsic value, that time analysts will adopts this choice. Traditional time series method consists of the prediction of stock market through linear prediction model. It makes careful investigation in historic data. Linear prediction models are of two types. They are uni-variate and multivariate regression models. These two models are determined depending on whether they apply one of more variable to estimate the stock market time series. Machine learning method takes place from group of samples and draw patterns like linear and non-linear to estimate the function that generates the data.

Stock market predictions are subjected to various complexities and challenges. It is a highly risky venture. Market fluctuations risks are to be considered while taking stock market investment decisions. The return on investment cannot be predicted accurately. Returns are always variable in nature. In the fundamental analysis of stock market the available market data related to a particular company are analyzed. And it will help to derive conclusion regarding value and price of the stock issued by them. Basically in this analysis, the intrinsic value of a company is taken into account for the calculation of the value of stock issued by it. In technical analysis, the forecasting is made on the basis of the statistical methods. In this method, the historical movement of the stock price of the company is analyzed for making prediction on future movement.

Stock market prediction using Neural Networks

Neural network means “computer architecture in which processors are connected in a manner suggestive of connections between neurons; can learn by trial and error.” (Meaning and Definition of Neutral Network).

The stock market develops so many neural network arrangements of parts. Neural network systems are developed to determine the strength of efficient market hypothesis. Efficient market hypothesis consists that at any time the share price depicts all known information about the share. The neural networks are applied for analyzing the inputs, output and network organization. Using neural network to predict stock market prices will be an ongoing part of research as researchers and investors attempt to outperform the market, with the important objective to attain maximum return. Even though motivating outcome and confirmation of theories will take place, neural networks are useful to more difficult problems. For example, the impacts of the implementation of financial neural network explain the two very important researches such as network pruning and training optimization. “Neural network appear to be the best modeling method currently available as the capture nonlinearities in the system without human intervention. Continued work on improving neural network performance may lead to more insight in the chaotic nature of the system they model.” (Zhang, 117).

Even though neural network will be used as a perfect prediction device in the stock market movement, it reflects the factors influencing a complex system which require long term analysis such as stock market.

Genetic algorithms using stock market prediction

“A genetic algorithm (GA) is a search technique used in computing to find exact or approximate solutions to optimization and search problems. Genetic algorithms are categorized as global search heuristics. Genetic algorithms are a particular class of evolutionary algorithms (also known as evolutionary computation) that use techniques inspired by evolutionary biology such as inheritance, mutation, selection, and crossover (also called recombination).” (Genetic Algorithm).

Rough set theory

Rough set theory is a well-accepted theory in the data mining requirements. This theory was formulated by Zdzislaw Pawlak in the early 1980s. This theory can be applied by individuals for the automated transformation of data into knowledge. This method is relatively straightforward and appropriate in different data mining areas including information retrieval, decision support, machine learning, and knowledge based systems. The data bases for making the decision attributes are subjected to imperfection and in order to deal with such situations like unknown values or errors rough set model is effective. It does not require assumptions about the independence of the attributes as well as any external knowledge about the data.

(Rough Sets). It is a powerful mathematical tool for taking decisions in uncertain conditions. The basis of this theory is that each object in the universe occupies some information. Rough set theory solves some of the problems by “finding description of sets of objects in terms of attribute values, checking dependencies (full or partial) between attributes, reducing attributes, and generating decision rules.” (Tay, and Shen, 1).

We can get into some of the important concepts of rough set model.

- Information systems: It represents the knowledge. For example, an information system S = (U, X, Vq, fq) where U is the universe set, X represents attributes.

X = C U D, where C is the finite set of condition attributes and D is the decision attributes. Vq is the domain of q and fq is the function of information.

- Lower and upper approximations: There may be conflict among the objects because of the imprecision among data. The conflicting objects is called inconsistent which may belong to different decision classes called inconsistent decision table. So the approximation in the rough set model is used to deal inconsistency. The lower and upper approximations of X could be as follows:

Here U/IND (B) is the family of all equivalence classes of B and IND (B) is the B-indiscernibility. It is defined as:

- Quality of approximation: It refers to the percentage of objects classified into class of the same attribute.

- Reducts and core: The rough set theory reduces the attributes providing the same quality of approximation compared with the original attributes.

- Decision rules: Different stages of rule induction and data processing also adopt rough set theory because of the aspect of aggregation of the inconsistency of the input data which could be described by the lower and upper approximations. IF and THEN are the logical statements used in the decision rule.

The rough set theory is based on description of knowledge and the approximation of sets. This theory was developed on the basis of the certain concepts relating to approximation spaces for the models of sets. The data collection is carried out by way of decision table. In the rows of the decision table data objects are placed and the columns its features are placed. The examples in the data set consisting of class label are assumed as the class indicator. The class label is considered as a decision feature while the remaining features are treated as conditional.

“Let O, F denote a set of sample objects and a set of functions representing object features, respectively. Assume that B _ F, x 2 O. Further, let [x] B denote [x] B = . Rough sets theory defines three regions based on the equivalent classes induced by the feature values: lower approximation BX, upper approximation BX and boundary

BNDB(X). A lower approximation of a set X contains all equivalence classes [x]B that are subsets of X, and upper approximation BX contains all equivalence classes [x]B that have objects in common with X, while the boundary BNDB(X) is the set BX BX, i.e., the set of all objects in BX that are not contained in BX. So, we can define a Rough set as any set with a non-empty boundary.” (Al-Qaheri, et al).

The rough set theory is based on the concept of indiscernibility relation of _B. _B is representing the objects group having matching descriptions.

There are many methods for analyzing the financial markets. Rough set theory holds a dominant place among them due to the following advantages:

- The analysis is based on the original data without accessing any external information.

- In this model analysis of both quantitative and qualitative attributes are carried on.

- Hidden facts of data could be identified and could be expressed in the decision rules.

- The theory eliminates redundancy of original data through the decision rules.

- The rough set model is constructed on the real facts supported with real examples and thus the decision rules generated from this are also should be real.

- The results are easily understandable.

Applications of rough set model in different areas

Economic and financial predictions are the main areas of application of rough set model and it is used for predicting business failure, database marketing and financial investment. Financial investment prediction comes under the scope of this study. So many researches have been conducted for building a good trading system with the help of rough set model. Research by Golan and Edwards established strong trading rules with the help of rough set model. Past data from the Toronto stock exchange was used for the analysis. The study is conducted by them by analyzing five major companies listed in Toronto stock exchange. As a result, generalized rules were extracted by this theory and this made rough set model a good candidate for knowledge discovery and stock market analysis. Bazen has also studied the same with 15 market indicators with price changes of stock for a month. The study aimed to deduce rules for financial indicators. Another important application of rough set theory is for building S&P index trading systems. It helped for creating a generalized rule for predicting the buy and sell timing in the S&P index thus helping the user to identify the risks of the market. Another application of rough set model is over portfolio management. Many researchers have made a study on rough set model and have made modifications for the advanced applications in economic and financial prediction. In the modifications made, some of the important derivatives are Variable Precision Rough Set Model, LERS, TRANCE etc.

In order to predict the future movement in stock prices for the sake of investors with appropriate efficiency, rough set is an adoptable tool. The reducts in the data can be established by applying rough set reduction technique. Through this the extracted data should contain minimal subsets of attributes with close resemblance to the class label which increases the accuracy of prediction. The rough set dependency rules are derivative of the generated reducts in a straight line. The rough confusion matrix is valuable for evaluating the presentation of the forecasted reducts and classes. When compared with other forecasting tool, rough sets model is providing more accurate prediction for undertaking the decision. It creates more compacts and limited rules comparatively to other forecasting models such as neural network.

Rough set is now increasingly used for the prediction of the fluctuations in the stock market prices. Rough set produce prediction rule scheme by which information can be extracted using the analysis of daily stock movements. Investors can adopt these rules for making the investment decisions. The Boolean reasoning discretization algorithm can be used in conjunction with the rough sets and thus the prediction process can be enhanced. The rough set dependency rules are created from the generated reducts from the data analysis. Rough confusion matrix is a technique in the rough set theory which is applicable for the appraisal of the performance of the estimated reducts and classes. Rough set is highly reliable prediction technique as it shows higher accuracy in the prediction process that is over 97% and developing more compact rules.

Rough sets and data mining: rough set theory helps in identifying the hidden patterns of data. It also helps in identifying the cause effect relationships thus eliminating the redundant data. The analysis of approximations derive the concepts such as relevant primitive concepts, similarity measures between concepts and operations for construction. So using rough set approach following problems associated with the steps can be eliminated.

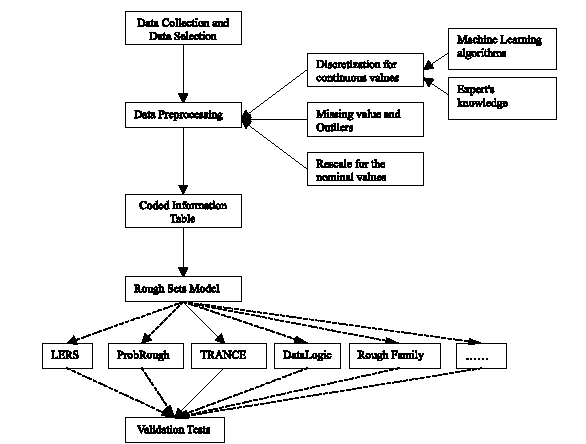

For example, using rough set theory, stock forecasting is done with the help of historical time series data. The historical data is collected, analyzed and the relationships are built for producing a system, which helps in the prediction of future. There may be conflicting and redundant data in the time series. In this situation, we can use rough set model for dealing the contradictory and superfluous records. Here the data collected from the Toronto stock exchange is analyzed using rough set model due to the uncertain and redundant data. Knowledge Discovery is used in the Database process for analyzing the missing data. There are mainly three steps in the Rough Set Data Analysis Model. Data preparation is the first stage, which includes data cleaning, completeness, correctness, attributes creation, attribute selection and discretization. Rough set analysis is the second stage, which generates decision rules. The third stage is the validation stage, which confirms and filters knowledge in the data set. The data used for the study is the time series data over a 10-year worth of stock market from the Toronto stock exchange.

Data Preparation: a decision table should be created for the successful analysis of data, which could be done with data preparation. Data conversion, data cleansing, data completion checks, conditional attribute creation, decision attribute generation, discretization of attributes and data splitting are included in the data preparation task. Technical analysis techniques like moving average convergence/divergence, price rate of change and relative strength index are used to discover conditional attributes for obtaining the statistics. Decision attributes are the closing price of the stock with values -1, 0 or 1. Equal frequency algorithm is used for attribute discretization. The data for the study could be 2210 objects with twelve attributes.

Rough set analysis: Preliminary information is obtained with the help of approximation, reduct and core generation, and classification. Then decision rules are generated based on the data. In this step, reducts are obtained from a subset of original data and rules are generated from it. The method used for acquiring reduct is Rosetta Rough Set Toolkit. To generate those rules meeting a minimum threshold support, a filter is used thus helping to produce a subset of decision rules.

From the 2210 original decision rules, ten decision rules are generated by keeping the average distribution of grouping from ten objects. An example for the rule obtained from the reduct can be the following.

- Rule 1: IF MA5 = 1 and Price ROC = [*, -1.82045) and RSI = [*, 37.17850)

- THEN Decision3 = 1 or 0

Validation: in this stage, the preliminary results obtained are verified to ensure the accuracy. So the decision rules are compared against the validation data set to ensure the accuracy. Those rules are selected with similar measures in analysis and validation sets with stability and accuracy. Thus, we can obtain a subset of knowledge.

Result analysis: From the 2210 objects, an assumption of 1200 analysis sets and 1010 validation sets we can analyze the results. There are two decision classes: a combination of decrease and neutral, a combination of increase and neutral. For the increase and decrease combination, there is only null value. Neutral and either increase or decrease rules help to take a decision as to whether there is large gain or loss. Ten rules could be formulated and some are as follows:

- Rule 1: rule with measurement of analysis and validation sets.

- Rule 2: analysis set higher than the validation set.

- Rule 3: analysis set lower than validation set.

After the formation of rules, to find the strongest rules a comparison of reaction of rules with the data should be made. A ranking system is used to analyze the rules. According to the strength and stability individual rules are ranked. The strong rule will be the rule with low ranking measure of support, accuracy, change in support, and change in accuracy. The worst rule is one having high rankings. Lastly this rule could be verified and appliedd for predicting the stock market. (Herbert and Yao).

Rough set prediction models: RSPM

Rough set prediction model involves three distinct phases. They are as follows:

- Pre processing phase: In this phase functional activities such as addition and computation of extra variables, assignments of decision classes, data collection and classification, its sorting and analysis, completing and correcting the data variables, developing of attributes, and selection and discretization of attributes etc are involved. The decision table is created by performing data conversion, data cleansing, data completion checks, etc. The whole set is divided into two subsets like data set containing 75% of objects and validation set containing 25% of objects. Then the rough set tools are applied to convert the data.

- Analysis and rule generating phase: This is the second phase of the rough set prediction model. It involves the task of developing the preliminary knowledge. In this phase, computation of objects reducts from data, assimilation of rules from reducts, assessment of the rules, and finally the prediction process. In this pre-processing phase, data conversion, data cleansing, data completion checks are involved.

From the data set, important attributes are extracted by eliminating the redundant attributes which do not affect the degree of dependency of the remaining data. The conditional attributes should be removed from the decision tables in order to reduce the complexity in the decision table. Thus after reducing the redundant, the rule set is generalized through rough set value reduction model. Finally concise rules could be generated by reducing the redundant values.

- Classification and prediction phase: This is the final stage in the RSPM model and it involves utilization of rules developed in the second phase for the prediction of stock exchange movements. A reduct is transformed into a rule by binding the condition feature values. Training set is used to generate rules and these rules are referred as the actual classifiers which help in predicting the new objects. Finally voting mechanism is used to choose the more matching decision value.

In the pre-processing phase, data splitting is carried out for formulating two subsets consisting of objects. The specific rough set tools are applied on the converted data. Data Completion and discretization of attributes with continuous valuation:

Missing values are very common in data set. In the rough set model the data mining may be affected by the presence of missing values. The rules created from the data mining can be unduly affected by these factors. Through the data completion procedure, such defaults relating to the data mining rules can be eliminated. Under this process the objects with one or more missing values are completely avoided. Incomplete data and information systems in the data analysis are made up through data completion process. For this various completion methods are applied in the pre processing stage. This may result in distorting the unique data and knowledge and the mining of the original data can be negatively affected. In order to overcome these defaults the decomposition tools are effective. The importance of different attributes is varying. By applying the pre-experience and knowledge about the significance of the attributes, adequate weights can be provided to each attributes. In the rough set approach, information external to the information table are avoided while applying classification. In the rough set approach, available data are used for the decision making and its degree of significance is not considered.

Discretization strategy is applicable to ensure the authenticity of data classification. In the data discretization process, data transformation is carried on with applying cuts for dividing the data into intervals. The data included within the interval are mapped by giving same value. The size of the value attribute data set can be minimized through this process. And an approximately broad approach is possible.

Reducts

Reducts is the minimum subset of attributes safeguarding the degree of dependency. In a decision table, the calculation of core and reducts is applied for choosing relevant attributes. Conditional attributes having no additional information about the objects are required to be eliminated for avoiding the complexity and cost of the decision making process. In a decision table there may exist more than one reducts. By selecting the best reduct on the basis of the cost function or with minimum number of attributes, it is replaced with the original data. In the analysis and rule generating phase, extraction and reduction of relevant attributes are carried on. Redundant variables are extracted from the attributes without affecting the degree of dependency. (Al-Qaheri, Hassanien, and Abraham).

The rough set approach is applicable to identify and analyze the trading rules in order to assess the discrimination between bullish and bearish condition in the stock market. Rough set theory is significant for extracting trading rules in stock exchanges since it facilitates to determine dependences in the attributes together with dipping the consequence of superfluous contents in the noisy data.

The above figure shows the procedure for predicting the economic and financial condition using rough set model.

Variable Precision Rough Set Model

The rough set theory is based on the derivation, optimization and analysis of decision tables developed from the data. The variable precision rough set model is the direct simplification of the Pawlak rough sets. The main objective of the rough set theory is to develop and analyze the appropriate definitions from the indefinable sets. The definitions developed on approximate basis and in the boundary area of the set, allow the resolving of the objects with varying degree of certainty. The definitions with lower approximation allow for determination with certainty and in case of the boundary definition, objects with uncertainty are included in the decision attribute set. The applications of VPRSM are based upon these ideas parametrically in which the positive region in the data set is defined with relatively high uncertainty degree of an object’s membership in a set. In the negative region, uncertainty degree of an object’s membership is higher.

Conditional probabilities are used for the development of decision criteria under the VPRSM model. In this model the prior probability of the decision set are considered among the all collected data. The prior probability is represented by P (X) is taken as the reference value for the decision making purpose. The probability of occurrence of the X in case of absence of any attribute based information is represented by the P(X). In the perspective of representing the attribute value of sets of the universe U, it is assumed that groups of interest are decision categories X E U/D. In this model two precision control parameters are applied which include the lower limit and the upper limit. The lower limit is represented as 1, 0 < 1< P (X) <1, which indicates the utmost satisfactory degree of the conditional probability P (X/E) for considering in the basic group E in the negative region of the set X. The upper limit is represented as u, 0 < P (X)

“The l- negative region of the set X is denoted as NEG1(X)is defined by:

NEG1 (X) = U( E: P(X/E) < 1) ……….. 1 The l-negative region of the set X is a collection of objects for which the probability of membership in the set X is significantly lower than the prior probability P(X). The u –positive region of the set X, POSu (X) is defined as POSu(x) = U (E: P(X/E) > u) ……….2

The u positive region of the set X is a collection of objects for which the probability of membership in the set X is significantly higher than the prior probability P(X). The objects which are not classified as being in the u- positive region nor in the l- negative region belong to the (l, u) – boundary region of the decision category X, denoted as

BNRlu(X) = U (E:l < P(X/E) < u) ………3.”(Peters, Skowron, and Rybiński, 447).

The boundary level in the decision attributes is representing the related probability of including or excluding in the decision category X. The VPRSM model helps to reduce the standard rough sets in time the l = 0 and u = 1.

Variable Precision Rough Set Model

The Variable Precisions Rough Set Model is used in intelligent information retrieval based endeavors. In typical intelligent information retrieval methodologies VPRSM is presented through a combination of rough sets and fuzzy sets. The methodology components of the intelligent data retrieval process the documents are represented as fuzzy index term while the queries are defined through a rough set. In this procedure each document or data set must have estimation in the defined set. The experimentation outcomes which are compared with the vector space model are presented.

The use of Variable Precisions Rough Set Model has grown popular in contemporary data retrieval and usage domains characterized by the overwhelming widespread of the cyber interface and the World Wide Web. The three known classic models and methods for retrieving information are the Boolean, vector, and the probabilistic respectively. The Variable Precision Rough Set Model propagated by Pawlak is a simplified version of rough sets that adopts the entirety of the basic mathematical properties of the initial rough set design or model. Rough set theory presupposes that the universe in context is known and that all the derivations and conclusions from the model are applicable exclusive to the particular universe in focus. Nonetheless, data retrieval methodological realities indicate that there is an incontrovertible need to generalize derivations and collusions obtained from a smaller group of data representatives to huge population. The VPRSM enables the controllable proportion of misclassification. What can be emphasized here about the VPRSM is that a partly incorrect categorization regulation provides valuable trend or pattern information about future test case scenarios in case where the majority of available to which such a regulation is applicable can be accurately classified.

The classification analysis has been the sticking loophole with the initial Rough Set Theory. On this aspect somewhat incorrect classification has to be considered due to the fact that real-world data are always polluted with noise and other defects.

This mold was developed by W. Ziarko with its generalization particularly aimed at dealing with uncertain pieces of data.

The model functions as a development of the initial rough set model without any additional hypotheses. This model spawns upon the initial rough set design in its use of the statistical data within the partial data and vague data. The VPRSM is designed within precincts of a world of objects of interest U. In the sue of the VPRSM the underlying assumption is that objects are represented in terms of some observable properties linked to predetermined domains. These properties are known as attributes.

The Variable Precisions Rough Set Model has been tailored for Inductive logic programming. Inductive logic programming (ILP) is one research which has been developed at the confluence of logic programming and machine learning. ILP makes use of background knowledge as well as positive and negative examples to induce a logic program which will describe the examples. VPRSM is part of the Rough Set ensemble which is designed to define an indiscernibility relation in cases where particular subsets of examples cannot be distinguished. In this conceptual paradigm, a concept is rough if it holds at least one such indistinguishable subset which also holds both the positive and negative examples. The Variable Precision Rough Set (VPRS) model is a generalized framework of rough sets which adopts all basic mathematical properties of the initial rough set framework. Rough Set Theory supposes that the universe for which considerations are made is known and that all the conclusions obtained from the model are applicable only to the universe. What has been in use of the Rough Set is that there is an apparent need to generalize conclusions obtained from a limited set of examples to a huge population. The VPRSM allows for a regulated and moderated degree of misclassification.

In the implementation of the model procedure, the properties (called attributes) are fixed-valued functionalities which distribute distinctive property values to the individual elements. The attributes are classified into the following sections;

The section of condition attributes a, which stands for the ”input” material on elements for which the information can be obtained by means at the student’s disposal. This information is often used in developing model for making projections on the values of decision attributes.

There is also the decision attributes d ∈ D classification which stands for the section of target. On this dimension suppositions can be made with respect to that there is only one binary-valued decision feature with two values vd1 and vd0, which are untying the universe into two classifications: set X which stands for the prediction target (vd1) as well as its match U -X (vd1). The VPRSM presupposes the knowledge of a correspondence link known as the indiscernibility relation IND(C). In this association C is a collection of condition characteristics or properties which are used to stand for objects of the domain of interest U. The indiscernibility connection represents the pre-existing knowledge about the universe of interest U. This is indicated by the identity of values of condition qualities C of the objects. In the variable precision model, each associative set (called an atom or C -elementary set) E of the association IND(C) is related with two measures:

The probability P(E) of the root set normally approximated using given data by P(E) = card(E’)/card(U’), where card presents a set cardinality and U’ ⊆ U, E’ ⊆ E are respectively fixed subsets of the domain analogous to the available compilations of sample information. In this design probability P (X |E), stands for the probability of an event such that an object of the C -elementary set E would also belong to the set X. The conditional probability P (X |E) is normally projected by calculating the relative degree of overlap that exists between sets X and E, derived from available data, i.e. P (X |E) = card(X ′ ∩ E ′)/card (E ′), where X’ ⊆ X, E’ ⊆ E are respective fixed subsets of the domain analogous to the available assortment of sample information.

The asymmetric VPRSM simplification of the initial rough set model is performed through the use of values of the possibility function P and two lower and upper perimeter certainties threshold parameters l and u such that 0 ≤ l < P(X) < u ≤ 1. The VPRSM is symmetric if l = 1 – u. The constraint ‘u’ presents u-positive segment or the u- lower inference of the set X in the VPRSM. The figure of ‘u’ indicates the smallest suitable degree of the conditional likelihood P(X—Ei) to take account of in the basic set Ei in the positive region, or u-lower inference of the set X. These can be perceived as quality threshold of the probabilistic information that is linked with elementary sets Ei. Only those elementary set with sufficiently high information quality are to be incorporated in the positive segment.

Probabilistic Dependencies in Hierarchical Decision Table

There are numerous ways of defining the dependencies that obtain between attributes. In Pawlak’s works, functional and partial functional dependencies were explored [30], referred as γ -dependencies. The probabilistic oversimplification of the dependencies was outlined and examined in the framework of the VPRSM [53].

The probabilistic generalizations have a bearing on the average degree of transformation of the certainty of incidence of decision section X which is relative to its initial probability (X) called λ-dependencies. This is done under the auspices of the certainty gain measure.

Linear hierarchical decision table

Decision table are widely applied in different fields such as business forecasting, circuit design, stock market forecasting etc. Decision tables are static in nature because it lacks the ability to automatically learn and apply the structures on the basis of new information.

The decision tables involving the classification of collected data are developed by Pawlak. According to the view of Pawlak, decision tables are capable of adjusting with the new information as it has a dynamic structure. Thus it can be greatly used for the reasoning from the data through data mining, machine learning and even complex data recognition.

It can be effectively used for the prediction of future values of the target decision attribute. Along with advantages it has certain drawbacks. The computation in the decision tables is based on the subset derived from all possible objects. The decision table may occupy undue decision boundary and it may lead to undue erroneous predictions. The poor quality of the subset variables may produce poor quality decision attributes. Secondly it is incomplete in nature. In order to overcome these fundamental difficulties in the decision tables, hierarchical decision tables are developed. In the hierarchical decision tables, the forecasting is based on the hierarchical structures instead of individual tables. It is subjected to reduction of learning complexity constraints.

The linear hierarchy decision table is prepared by taking into account the condition attributes and their values. A significant total decision boundary reduction is possible in this approach through the reduction of condition attributes on each layer.

The quality of the hierarchy of decision tables is comparatively higher as a potential classifier of new observed data. In this model, the functional dependency between the attributes and its probabilistic observations is measured. ” (Peters, Skowron, and Rybiński, 445).

Pawlak developed rough decision tables on the ground of rough set theory. A rough decision table consists of the broad and non-functional relationship existing between the two groups of objects attributes such as condition and decision attributes. For the decision making purpose, rough set tables are applied by learning from the data. For attaining better coverage over the data collected, it may be structured into hierarchies. To attain the better convergence of the learned hierarchy to a constant variable for the decision making, the total renewal of the learned structure is avoided when new objects are added external to the hierarchy. The constitution of rough decision tables is rationalized through an incremental learning process after new objects are added. (W, Ziarko).

In the rough set approach the available set of attributes are used for making the decision attributes. “The basic concept of RST is the notion of approximate space, which is an ordered pair A = (U, R) Where:

- U: nonempty set of objects, called universe;

- R: equivalence relation on U, called indiscernibility relation. If x, y ∈ U and xRy then x and y are indistinguishable in A.” (Rough Set Theory – Fundamentals and an Overview of its Main Applications).

In the rough set model, each equivalent data are persuaded by R and thus each of the element set R~= U/R, is called an elementary set in A.

Stock market movements can be predicted by analyzing the past movements. Rough set theory is a reliable and accurate prediction model applicable in the financial and investment fields. In the past years, researches were carried out for analyzing the utility of rough sets for the development of trading systems. Ziarko et al, Golan and Edwards adopted the rough set model to identify and analyze the strong trading rules consisting of highly repetitive data pattern. Under this model the basic concept is that from the daily stock movements, knowledge extraction is possible for the decision making purpose.

The rough set model consists of approximate spaces and models of sets and concepts. The rough set decision table is prepared by presenting the data sets in rows and columns. The rows represent the objects and the columns represent the features of the data set.

Probabilistic decision tables

The probabilistic decision table is representing the probabilistic relation between the condition and decision attributes through a set of constant size of probabilistic rules correlated to the rows of the table. The probabilistic decision tables are widely applied for prediction or decision making in different fields in which there exists a larger non-deterministic relation between the condition and the decision attributes. Evaluation of the degree of probabilistic dependency between any elementary set and decision could be done on the basis of probabilistic information. The degree of dependency measure is called absolute certainty gain which represents the degree of occurrence of an elementary set E has on decision category X. Thus methods for the computation of conditional λ-dependency in the hierarchical arrangement of probabilistic decision tables are provided by it.

Result analysis

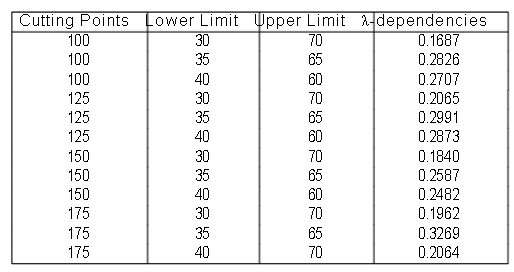

Using normalized factor dependencies for the entire objects are calculated. 100, 125, 150 were the different cutting points used for the calculation. Each cutting points has three different control points- 30 and 70, 35 and 65, 40 and 60 where 30, 35, 40 represents the lower limit and 70, 65, and 60 represents upper control limit. From the result obtained from the hierarchical decision table, we can summarize the relative degree of improvement for different cutting points and parameter values as follows:

From the above table it is very clear about the control limits having higher dependencies for each cutting points. We can draw the following conclusions:

- For the cutting point 100, the lower and upper control limit 35 and 65 has the highest peak outcome of 0.2826.

- For the cutting point 125, 35 and 65 are the lower and upper control limit having the highest peak outcome 0.2991.

- For the cutting point 150, 35 and 65 are the lower and upper control limits having highest peak outcome 0.2587.

- For the cutting point 175 also, 35 and 65 are the lower and upper control limit having highest peak outcome 0.3269.

- Among the different dependencies for the lower and upper control limit 30 and 70, 125 is the cutting point having highest peak outcome of 0.2065.

- For 35 and 65 lower and upper control limits, 175 is the cutting point having highest peak outcome of 0.3269.

- For 40 and 60 lower and upper control limits, 125 is the higher peak outcome cutting point-having 0.2873.

The stock market investment is subjected to fluctuations as a result of changes in the market conditions. These fluctuations are not accurately predictable in nature. Thus in order to make secured and profitable investment decisions, long term analysis over the real market fluctuations is essential. The movements in the stock market are not predictable in nature. It depends upon unexpected movements in the economic as well as general environment. For the successful decision making on the basis of analyzed results, technical analysis over the stock market movement is useful. Technical analysis would help the potential trade investors to forecast the future trends in the stock market movement and thus they can take optimum investment decisions.

Technical analysis rests on the assumption that history repeats itself and that future market direction can be determined by examining the past price levels. Hundreds of indicators are in use today, and new indicators are being formed every week. Technical analysis software programs come with dozens of indicators built in, and allow the users to create their own. The concept of technical analysis is that share prices move in trends dictated by the market and economic conditions, which constantly change the actions of investors. Using inputs such as price, volume, and open interest statistics, the technical analyst uses charts and graphs to predict future movements of the market and particular stocks. Statistics, technical analysis, fundamental analysis, and linear regression are all tools an investor can use to predict the market in general and stocks in particular in order to benefit by buying and selling at the right times. These techniques have been proved to be not consistent all the times and provided mixed results as far as prediction of the market is concerned, and many analysts warn against the usefulness of many of the approaches. However, they are commonly used in practice and provide a base-level standard upon which an investor could decide on the course of action. Despite the widespread use of technical analysis, it is criticized for its highly subjective nature. Different individuals can interpret charts in different manners. There are a variety of technical indicators derived from chart analysis, which can be formalized into trading rules or used as inputs to drive the investors. There are indicators such as filter indicators, momentum indicators, volume indicators and analyses such as trend line analysis, wave analysis and pattern analysis. All these provide short or long term information, help identify trends or cycles in the market, or indicate the strength of the stock price using support and resistance levels.

For example, moving average is a technical indicator. The moving average, averages stock prices over a period of time. This shows the underlying trends to be more visible. Several trading rules have been developed which pertain to the moving average. Investors usually consider when closing price moves above a moving average, it is a buy signal. The most important criticism of the indicators is that these indicators often give false signals and lag the market. That is, since a moving average is a past estimate, a trader misses a lot of the opportunity before the appropriate trading signal is generated. Thus, although technical analysis is useful in getting valuable insights into the market, it’s highly subjective in nature with inherent time delays. (Lawrence).

While selecting an indicator for analyzing purpose, it has to be done carefully and moderately. Some of the popular technical indicators, which help us to forecast the market directions, include Dow Jones industrial average, S&P 500 etc. This market index is a most commonly used one in the financial world; it includes a large sector of the market.

S&P 500: S&P 500 is involving a large sector of the stock market representing the most extensively traded stocks. This market index reflects the stock price movements in larger companies. It is a more accurate indicator of the stock price movements.

Dow Jones industrial average: It represents the stocks of only larger companies and thus changes in the market prices of small and mid sized companies are completely ignored under this industrial average. This is a price weighted market index. Changes in the market are reflected in the Down Jones industrial average. The structural change in the stock market indices have influence on the market movements reflected by them. A single market index is not enough to predict the stock market movements in future years. For this purpose a combination of different market indices have to be considered. It would help the investor to draw an accurate prediction regarding the market movements and better awareness about the influencing factors. (Bigalow).

In analyzing the stock market investment security and profitability, investment returns, as well as market return has to be calculated. Investment return is the sum total of the EPS growth plus dividend yields that can be expected from investment in a certain stock. In the market return along with the EPS growth and dividend yields, speculative return from the investment is also considered. Thus it can be concluded that speculative return is the difference between the speculative return and the market return from investment in a security. While taking investment decisions the investor has to consider the speculative return from the investment. Through the identification of the difference between the investment return and the stock market return the long term movements in the stock market can be analyzed. Through the identification of the difference between the investment return and stock market return, it is possible to predict the unpredictable short term movements within the stock market. In case of long term investment, the long term movements in the stock market have to be analyzed. Unpredictable boom and bust conditions arising in the stock market will not affect the long term investments. This could be in order to avoid the critical conditions in the stock market investment arising from market manipulation and profit over the long term. The shares of a well functioning company always show rising trend, even in times of market recession. The changes in the stock price should be better controlled.

Market manipulation

An investor is required to understand the market movements in different business conditions and its reflection in the market indices and its interpretation. It is a part of the technical analysis of the stock market. It helps to identify the potential trend in the stock market.

The following are the general conditions leading to market manipulation:

- “Rosy analyst recommendations

- One time profitable events

- Overanxious speculators.” (Pierce).

The policies and actions of the government have direct influence on the sock price movement in the market. Over the long run the real business gains of the company will reflect in its shareholders’ gain. The relationship between interest rates and the stock market movement is indirect in nature. With the reduction of the interest rates in the economy the stock market can be positively influenced. Through the reduction in the interest rates, people who wish to borrow money can be supported with interest rate cut. But it negatively affects the lenders of the money and the investors in the bonds through reducing the return from the lending process through reduced interest rates. In case of rational investors, the decreasing interest rate will force them to follow equity market investment rather than bond market. This will facilitate the stocks-related businesses through availability of funds from the investors. This will improve their future earning potential and thus booming trend in the stock prices can be seen. Thus the lower interest rate will improve the financial position of the business firms through availability of relatively cheap financial resources. “Overall, the unifying effect of an interest rate cut is the psychological effect it has on investors and consumers; they see it as a benefit to personal and corporate borrowing, which in turn leads to greater profits and an expanding economy.” (Investment Question).

The market reflects all information that is available and prevalent at any point of time through its price. Price of the market is the sum total of all the emotions of the participants, rate of interests, inflation, international happenings, reported earnings, projected revenues, general elections, new product launches and anything one can think of, are already priced into the market. It is said that even the unexpected is involved in the pricing. Over any period of time, all events of the past, present and future are priced into the market. This does not mean that the future events or the price can be predicted by mere analysis of the market movements. Predicting the future of the market accurately is an impossible task no matter how deep the analysis is. As conditions change or events occur, the market adjusts through the prices, reflecting that bit of new information.

This idea is useful for technical analysis of individual stocks because by the study of the price movements of a particular stock will provide more important insights rather than other factors such as the profile of the top management or balance sheet of the company. Dow Theory however is more applicable to analyze the trends of the broad markets, rather than individual stocks. This way, a prospective investor could make the investment decision by looking at the movement of the Dow market indices. If the investor can see a primary upward trend, the investor could decide to buy individual stocks at fair values for long-term appreciation.

The technical analysis of securities and individual stocks uses Dow Theory to find out long term direction of the markets. Called the primary trend, Dow Theory says, it is unaffected by rates of individual stocks, manipulations and unexpected happenings. Even if short-term reverse rends occur every now and then the upward or downward trend of the market will continue till it reaches a certain level.

Technical Indicators & forecasting the market direction

Identification of stock market manipulation versus normal stock market movement.

In order to identify market manipulation from normal stock market movement the investors have to identify the speculative return from the investment which is termed as speculative return. A stock market investment is subjected to unpredictable short term movements. Prediction of individual stock price would require detailed information regarding those particular stock movements. The prediction can be done on probability basis.

Understanding stock market movement

Investing in stock market requires research and analysis of the stock price movements. There are mainly three decision making scenarios in stock market investment on long term basis. In the buy decisions, the investor requires to allocate the investment options currently available as equity and income securities depending upon the investment objectives. Within each category of security, adequate quality measures should be selected. In making adequate sell decision, rational targets for profit from each security in the portfolio have to be fixed. In times of severe market down turns, risky investment options should be avoided. Identification of the market forces that lead to shrinkage of the market value of securities is significant for better controlling of the investment decisions. In taking hold decisions, strong equities and income securities are to be selected for exploiting the normal cash flow from the securities. In times of weaker market positions of the securities, it is better to hold on the securities until the market conditions get changed offering good positions. (Selengut).

There are three types of theories; genetic algorithm theory, rough set theory and neural network theory. Genetic algorithm theory is a part of evolutionary computing, which is a rapidly growing area of artificial intelligence. There are computer programs which create an environment where population of data can be complete. Genetic algorithm is a research method that can be used for both solving problems and modeling evolutionary systems.

While considering genetic algorithm, optimization is the art of selecting the best alternatives among a given set of options. In any optimization problem, there is an objective function or the objective that depends upon a set of variables. Genetic algorithms are excellent for all tasks requiring optimization and are highly effective in any situation where many variables interact to produce a large number of possible outputs.

There are some advantages and disadvantages in genetic algorithms. Genetic algorithm can quickly see large number of solution set. Bad proposals do not affect the end solution negatively as they are simply discarded. The genetic algorithms have to know any rules of the problems. Genetic algorithms work by their own internal rules. This is very useful for complex or loosely defined problems. Genetic algorithms have drawbacks too. The great advantage of genetic algorithm is the fact that it finds a solution through evolution. This is also the biggest disadvantage. Evolution is inductive. In nature, life does not evolve towards a good solution; it evolves away from bad circumstances. This can cause a species to evolve into an evolutionary dead end. Likewise, genetic algorithms risk finds a suboptimal solution. This can be described with the help of one example; a genetic algorithm must find the highest point in a landscape. The algorithm will favor solution that lies on a peak (a hill or whatever). As the individuals gradually begin to propose solutions in the same vicinity (somewhere on the peak), they begin to look alike. In the end you may have individuals that are almost identical. The best of these suggest the top of the peak as the solution. But what if there is another, higher peak at the end of our map? It is too hard for the individuals to venture away from their current peak. The ones that do will be eliminated because, their solution is worse than the ones we have. An individual might get “luckily” but what would mean its “genes” are very different from the rest of the population, so this is unlikely. In other words, the algorithm produces a suboptimal solution and we even know it.

Genetic algorithms are invented by John Holland and developed by him and his students and colleagues.

Rough set theory has become a popular theory in the field of data mining. The advantages of rough set theory are as follows; the rough set theory is mathematically relatively simple. Despite this, it has shown its fruitfulness in a variety of data mining areas. Among these are information retrieval, decision support, machine learning, and knowledge based systems. A wide range of applications utilize the ideas of this theory. Medical data analysis, aircraft pilot performance evaluation, image processing, and voice recognition are few advantages. Another advantage of the rough set theory is that no assumptions about the independence of the attributes are necessary or any background knowledge about the data.

There are some drawbacks also while considering rough set theory. Sometimes the data mining contain imperfection, such as noise, unknown values or errors due to inaccurate measuring equipment. The rough set theory comes handy for dealing with these types of problems, as it is a tool for handling vagueness and uncertainty inherent to decision situations.

Comparison between rough set theory and network and rough set theory and neutral network theory give a clear idea regarding these two aspects. The rough set theory based classifier operating is highly discretized feature space. This is the important consequence of the nature of knowledge representation in the theory of rough sets. In case of neural network, it processes information taken from continuous feature space. The paper deals with the issues which arise when these two types of feature space coexist in one pattern recognition problem. In particular, these issues are illustrated in the system used for recognition. The feature execution is performed with the use of holographic ring wedge detector, generates the continuous features.

Data Mining Business Solutions

There are wide range of different data mining business solutions and each of them could be used in a wide range of applications. Many eminent researchers have been making efforts designed to modify the solutions to improve the results and predictions in an effort to make sense of sifting large masses of information that may throw relevant light and direction on the activities of stock exchange. The very unpredictability and volatility of movements of shares and instruments in the stock exchange has assured the need for data mining be continued and improve the results and predictions attendant to stock transactions.

It is very necessary that the right attributes are effectuated in order to achieve good results. It is seen that financial time series are set into motion to possibly predict area of applying Rough Set Method that needs to be produced. By applying the techniques that are used in this model, one still can improve the prediction result by setting required parameters. The parameters that are needed to be set are cutting point, upper limit and lower limit values. After setting these parameters it is to be seen whether RSM could create the right inroads for forecasting stock exchange price movements.

By using the rough set model, the operational parameters could be fully stretched, which is undesirable, and therefore there is need to seek a safer and less risky option. Two options come to mind to fill the vacuum and they are Variable Precision Rough Set (VPRS) and Linear Hierarchical Decision Table (LHDT). VPRS Model could be used successfully at all levels of LHD to optimize performance and results of the implementation of theory. The main aspect that is the optimization of the project and forecasting process envisages various critical components, including choice of the suitable mathematical solver and also the correct determination of the upper and lower limits of the stock analysis design.

The grown analysis design that utilizes RST and its annexure makes efforts to establish comparative improvement of stock market projectiles. The considerations of setting up required parameters can be done in such a way to increase the projection results through the usage of model techniques. The present models of RST have to be further distilled by way of addressing rising challenges in the statistical spheres and connected bodies of knowledge in which forecasting and data mining methods are decisive and of serious use.

Prospects may be focused at finding out ways and means by which RST models could be used as facades in having to deal with doubt, indecision and ambiguity. Other complimentary researches at par with foregone must focus on the contributions and validity of the RSM in decision support and pattern cognition movements. Upcoming work would also need to highlight upon the professed, or reasonableness between the RST and the other theoretical design such as fuzzy set theory and DS evidence theory. This would help towards correcting multiple challenges impeding the use of the model despite its major mathematical premise. It is widely believed that such theories are bound to end up in propositions.

However, it is seen that the stock exchanges in the current scenarios are subject to unexpected and sudden developments which may not be amenable to prejudgment, or pre-determination, and could thus be necessary to take steps to remedy the situation. It is seen that in the event stock exchange movements could be forecast with even a reasonable degree of accuracy, or predetermination, the world economy would not have taken such a battering that has almost brought it to its lowest ebb, and the economic melt down has been the worst in recorded history.

In the event estimable theories could predict, or even propound, future of financial and stock market operations, or even provide early warning signals as to the lowering of stock prices or other malfunctioning, it is seen that perhaps the economy would not have been so badly hit as it has been in the present context, and the economic order would have been more controlled.

Again, the emergence of data-mining techniques and computational techniques like neural networks, fuzzy set and evaluative algorithms, rough set theory, etc have paved the way for better use of these techniques for the study of financial and economic research. (Al-Qaheri, Hassanien, and Abraham).

It is seen that main focus of this paper has been to present a stock price prediction model that could access knowledge and useful information to investors on a daily basis that could provide them with guidance on whether to buy, sell or hold stocks.

In this case it has been seen that rough set with Boolean reasoning discretization.

Algorithm is used to discretize the data and make applications suitable to achieve the objectives. It is also seen that the rough set reduct is basically done to arrive at the final reducts of the data, and the minimal subsets of the attributed data that are associated with a class used marking for prediction purposes.

It is seen that reliance on rough set dependency rules evolve straight from all generated reducts. Rough confusion matrix is used to appraise the movements and characteristics of the predicted reducts and classes. The results of Rough Sets are distinguished with that of neural networks for the objective of comparison. It is seen that the RST is an acceptable method of predetermining or forecasting stock exchange price movements.

It is seen that rough set theory has gained momentum and has been widely nominated as a viable and intelligible data mining and knowledge enhancing tool.(Abraham, 13).

Its application has been sought in economic, financial and investment areas and its usefulness has been validated in the following areas:

- Database mining

- Rough set theory and business failures caused by external or internal sources

- Financial and investment decisions.

Database mining

It is seen that main idea behind use of RST in data mining is in terms of looking for common matching patterns among existing profiles and targeting behavioral aspects of customers. It is also based on the assumption that one could address organizational issues of costs and revenues through proper use of data mining and significantly reduce costs and enhance revenues through empirical use of RST.

Rough set theory and business failures

Practical applications of RST points out that such kind of failures have certain traits or patterns. By identifying and discerning such patterns, it is possible to avoid future business losses and failures. (Abraham, 13).

Again, it is seen that business failures could result due to plethora of reasons- longstanding financial problems, heavy debts, top management incompetence, stakeholders’ actions, creditors’ actions, governmental regulations creating permanent issues for business operations, etc. The list may be almost endless, but it is possible that RST may be useful in detecting the pattern and frequency of issues that may impinge upon the business prospects and if possible, initiate action that could address the issues efficiently and effectively. RST could also be used in combination with other theories to determine ways and means, by which the adverse aspects of business could be controlled, if not cured, thus giving it another lease of life, by which time losses could be recouped and it could stage a turnabout in its fortunes and future. Thus, it is seen that RST in combination with other useful theories may be instrumental in overseeing that stock valuation could be used in pre-determining stock transactions and ensuring that glitches could be identified before it could cause major damages.

Financial and investment decisions

RST could be seen in context of making correct and evidence-based assessment of critical and significant financial and investment decisions. The main aspect lies in forecasting and predetermining qualitative and quantifiable data with respect to future impacts. For example, it may be necessary to pre-determine the movement of a company’s shares, in terms of moving upwards, downwards over a period of 1 month, 3 months, 6 months or even a year. Short, medium and long term stock movement assessment need to be done, especially in the context of volatile and seemingly unpredictable movements over periods of time. While no rational basis could be seen, it is often felt that statistical determination, especially with the use of Models like RST could provide fundamental basis for seeking solutions to such imbroglios.