A simple understanding of how social media platforms function and how people use social media sites reveal the need for additional computer hardware usage in order to satisfy a growing demand for computing power. There are at least two major requirements in order for a computer system to function properly and support a cloud platform. First, the computer hardware must have the capability to run a particular operating system or OS. Second, the computer system must interpret the commands and request that users make via the OS. As a consequence, a greater demand for functional computer systems requires the purchase of a greater number of computer hardware. In order to reduce the cost of buying more expensive hardware, a process known as virtualization was developed for the purpose of reducing the cost of meeting certain requirements and the increasing demand for greater computing power.

The Essence of Virtualization

In a nutshell, virtualization comes from the root word “virtual” meaning to simulate the appearance of an object or idea. For example, when people talk about “virtual reality” they are discussing a process of simulating a real-world setting, such as, a person’s bedroom or living room. As a result, when images of these areas are made available, one can see objects and shapes that resemble the said areas. However, these are all virtual images or simulated images of the actual rooms. In a book entitled, Virtualization Essentials, the author stated that this particular process is a form of technology that enables the user to create simulated environments or dedicated resources using a single computer system (Portnoy, 2015). Red Hat, a company renowned for developing world-class software, described the procedure as the utilization of a specific software that allows the user to connect directly to the hardware, and at the same time, splitting the system into separate units known as virtual machines or VMs (Red Hat, Inc., 2017, par. 1). The different VMs are not only separate, they are also distinguished from each other, so that a single VM is one distinct system.

The separation and the distinction of each individual VM explain the cost-efficiency advantage of using virtualization technology, because each VM functions as if it owns a dedicated computer system. However, it has to be made clear that there are several VMs that are operating within one virtual environment, and all of these machines are using only one computer hardware device. According to Matthew Portnoy, businessmen prefer to use this configuration, especially if they are not yet sure how many servers are needed to support a project (2015). For example, if the company accepts to work on new projects, the server needs are going to be satisfied by using virtual servers and not by purchasing new physical servers. Thus, the appropriate utilization of the said technology enables a business enterprise or a corporation to maximize the company’s resources.

The Virtualization of a Computer Hardware

The need for virtualization came into existence because of the computer system or personal computer’s original design. For the purpose of providing a simplified illustration of the concept, imagine the form and function of typical personal computer. A basic system requires a hardware and software combination. The software handles the commands coming from the user, and then the same software utilizes the computer hardware to perform certain calculations. In this configuration, one person typing on a keyboard elicits a response from the computer hardware setup. In this layout, it is impossible for another person to access the same computer, because it is dedicated to one user.

The virtualization of a computer hardware requires the use of a software known as hypervisor, for the purpose of creating a mechanism that enables the user or the system administrator to share the resources of a single hardware device. As a result, several students, engineers, designers, and professionals may use the same server or computer system. This setup makes sense, because one person cannot utilize the full computing power of a single hardware device. As a result, an ordinary person without the extensive knowledge of a network administrator can use a computer thinking that it is linked to a dedicated computer powered by its own processor. Sharing the computing capability of a single computer hardware maximizes the full potential of the said system.

It is important to point out that virtualization is not only utilized for the purpose of reducing the cost of operations. According to Red Hat, the application of the virtualization technology leads to the creation of separate, distinct, and secure virtual environments (Red Hat, Inc., 2017, par. 1). In other words, there are two additional advantages when administrators and ordinary users adopt this type of technology. First, the creation of distinct VMs makes it easier to isolate and study errors or problems in the system. Second, the creation of separate VMs makes it easier to figure out the vulnerability of the system or the source of external attacks to the system (Portnoy, 2015). Therefore, the adoption of virtualization technologies is a practical choice in terms of safety, ease of management, and cost-efficiency.

The Virtualization of the Central Processing Unit or CPU

A special software known as the hypervisor enables the user to create virtualization within a computer hardware system. The specific target of the hypervisor is the computer’s central processing unit or the CPU. Once in effect, the hypervisor unlocks the OS that was once linked to a specific CPU. After the unlocking process, the hypervisor in effect creates multiple operating systems or guest operating systems (Cvetanov, 2015). Without this procedure, the original OS is limited to one CPU. In a traditional setup, there is a one-on-one relationship between the OS and the CPU. For example, a rack server requires an OS to function as a web server. Thus, when there is a need to build twenty web servers, it is also required to purchase the same number of machines. By placing a hypervisor on top of a traditional OS, one can enjoy the benefits of twenty web servers, but using only the resources of one rack server. However, the success of the layout depends on the quality of the RAM and the processors. Thus, the quality of the VMs are dependent on the quality of the CPU.

Specialized software like the hypervisor primarily functions as a tool that manipulate’s the computer’s CPU, in order for the CPU to share its processing power to multiple VMs. Thus, it is not the natural design of the CPU to share its resources to multiple virtual machines. However, in the article entitled “How Server Virtualization Works” the author pointed out that CPU manufacturers are designing CPUs that are ready to interact with virtual servers. One can argue that cutting-edge technology in new CPUs can help magnify the advantages that are made possible through virtualized environments. On the other hand, the ill-advised or improper use of deployment strategies created to virtualize CPUs can lead to severe performance issues.

It is imperative to ensure the flawless operation of VMs. One can argue that a major criteria in measuring the successful implementation of a virtualized CPU is to have a system that functions the same way when its core processor was not yet shared by multiple VMs. In other words, it is imperative that users are unable to detect the difference between an ordinary computer and the one that utilizes shared resources via the process of virtualization. In Portnoy’s book, he described the importance of figuring out the virtualization technology that is the best fit to the organization’s needs. Three of the most popular virtualization technologies available in the market are: Xen, VMWare, and QEMU (Portnoy, 2015). The following pages describe how network administrators use Xen’s hypervisor.

Organizing a Xen Virtual Machine

Xen’s virtualization software package directs the hypervisor to interact with the hardware’s CPU. In the book entitled, Getting Started with Citrix XenApp, the author highlighted the fact that the hypervisor manipulates the CPU’s scheduling and memory partitioning capability in order to properly manage the multiple VMs using the hardware device (Cvetanov, 2015). In organizing a Xen virtual machine, the network administrator must have extensive knowledge on at least three major components: the Xen hypervisor; Domain O; and Domain U. Although the importance of the hypervisor has been outlined earlier, it is useless without the Domain O, otherwise known as Domain zero or simply the host domain (Cvetanov, 2015). In the absence of the Domain O, Xen’s virtual machines are not going to function correctly. It is the job of the Domain O as the host domain to initiate the process, and paves the way for the management of the Domain U, known as DomU or simply as underprivileged domains (Cvetanov, 2015). After activating Xen’s Domain O, it enables users of VMs to access the resources and capabilities of the hardware device.

Xen’s Domain O is actually a unique virtual machine that has two primary functions. First, it has special rights when it comes to accessing the CPU’s resources and other aspects of the computer hardware. Second, it interacts with other VMs, especially those classified as PV and HVM Guests (Cvetanov, 2015). Once the host domain is up and running, it enables the so-called underprivileged VMs to make requests, such as, requesting for support network and local disk access. These processes are made possible by the existence of the Network Backend Driver and the Block Backend Driver.

All Domain U VMs have no direct access to the CPU. However, there are two types of VMs under the Domain U label: Domain U PV Guests and Domain U HVM Guests. The Domain U PV Guest is different because it was designed to know its limitations with regards to accessing the resources of the physical hardware. Domain U PV Guests are also aware of the fact that there are other VMs utilizing the same hardware device. This assertion is based on the fact that Domain U PV Guest VMs are equipped with two critical drivers, the PV Network Driver and the PV Block Driver that the VMs employ for the purpose of network and disk utilization. On the other hand, Domain U HVM Guests do not have the capability to detect that the presence of a setup that allows the sharing of hardware resources.

In a simplified process, the Domain U PV Guest communicates to the Xen hypervisor via the Domain O.This is known as a network or disk request, and activates the PV Block Driver linked to the Domain U PV Guest to receive the request to access the local disk in order to write a specific set of data. This procedure is made possible by the hypervisor directing the request to a specific local memory that is shared with the host domain. The conventional design of the Xen software features the less than ideal process known as the “event channel” wherein requests go back and forth between the PV Block Backend Driver and the PV Block Driver. However, recent innovative changes enables the Domain U Guest to access the local hardware without going through the host domain.

An Example of a Virtual Environment

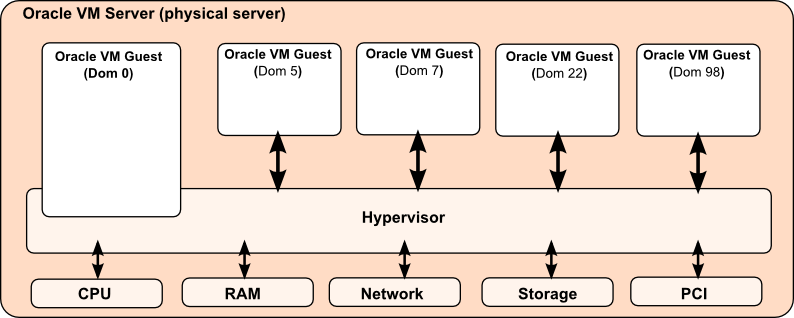

Oracle’s use of Xen technology in running a number of x86 type of servers provides an example of a virtual environment. In this example, the hypervisor and the Domain O virtual machine function like a lock and key. The Oracle VM labeled as domain zero initiated the process, and the hypervisor acts as the mediator that processes the requests from other VMs to utilize a single computer’s CPU, RAM, network, storage, and PCI resources. Based on this illustration, one can see how a single server became the host to multiple VMs.

In the said configuration, one can see three additional benefits of using virtual environments. First, the user benefits from the power of consolidation, because a network administrator is able to combine the capability of several VMs using only one server, reducing the need for more space, and eliminating the problem of excess amounts of heat that are emitted from a large number of servers cramped in one area (Strickland, 2017). Second, it allows the administrator to experience the advantages of redundancy without the need to spend more money in buying several systems, because the VMs made it possible to run a single application on different virtual environments (Strickland, 2017). As a result, when a single virtual server fails to perform as expected, there are similar systems that were created beforehand to run the same set of applications. Finally, network administrators are given the capability to test an application or an operating system without the need to buy another physical machine (Strickland, 2017). This added capacity is made possible by the fact that a virtualized server is not dependent or linked to other servers. In the event that an unexpected errors or bug in the OS lead to irreversible consequences, the problem is isolated in that particular virtual server and not allowed to affect the other VMs.

Virtualization in Cloud Platforms

The advantages of VMs in terms of cost-efficiency, redundancy, consolidation, and security are magnified when placed within the discussion of cloud platforms. Cloud platforms are physical resources provided by third parties or companies that are in the business of cloud computing. In simpler terms, an ordinary user does not need to buy his or her own storage facility in order to manage and secure important data. In the past, businessmen and ordinary individuals had to acquire physical machines to store data. The old way of storing information is expensive and risky, because the user had to spend a lot of money in acquiring the physical devices and compelled to spend even more in the maintenance of the said computer hardware devices. In addition, users had to invest in the construction of an appropriate facility in order to sustain the business operation that depended on the said computer systems. Nevertheless, in the case of man-made disasters and other unforeseen events, the data stored in the said devices are no longer accessible or useful. It is much better to have the capability to transmit or store critical data via a third party service provider. However, in the absence of virtualization technologies the one handling the storage facility has to deal with the same set of problems described earlier.

Cloud platforms utilize the same principles that were highlighted in the previous discussion. Cloud platforms were created to handle the tremendous demand for storage space and the use of added resources. The only difference is that the server is not located within the company building or the building that houses the company’s management information system. The servers that hosted the VMs are located in different parts of the country or in different parts of the globe. Although the configuration is different because the user does not have full control of the host computer or the VMs, the same principles that made virtualization cost-efficient, reliable, and secure are still in effect. Consider for example the requirements of companies like Google and Facebook. Without the use of virtualization the demand for storage space and additional computing power becomes unmanageable. However, with appropriate use of virtualization technology, it is possible for multiple users to share resources when sending emails and accessing images that they stored via cloud platforms. It is interesting to note that when users of Gmail or Facebook access these two websites, they are not conscious of the fact that they are utilizing a system of shared resources via a process known as virtualization technology.

Conclusion

The existence of virtualization technologies came about after realizing the limitations of a conventional computer design. In the old setup, one user has limited access to the resources of a computer hardware device. However, a typical usage does not require the full capacity of the CPU, RAM, storage, and networking capability of the computer system. Thus, virtualization technologies enabled the sharing of resources and maximizing the potential of a single computer system. This type of technology allows user to enjoy the benefits of consolidation, redundancy, safety, and cost-efficiency. The technology’s ability to create distinct and separate VMs made it an indispensable component of cloud computing. As a result, network administrators, programmers, and ordinary users are able to develop a system that runs the same set of applications in multiple machines. It is now possible not only to multiply the capability of a single computer hardware configuration, but also test applications without fear of affecting the other VMs that are performing critical operations.

References

Cvetanov, K. (2015). Getting started with Citrix XenApp. Birmingham, UK: Packt Publishing.

Oracle Corporation. (2017).2.2.1.2 Xen.Web.

Portnoy, M. (2015). Virtualization essentials. Indianapolis, IN: John Wiley & Sons.

Red Hat, Inc. (2017).Understanding virtualization.Web.

Strickland, J. (2017). How server virtualization works. Web.