Abstract

Data and information storage, access, manipulation, backing up, and controlled access forms the backbone of any information system. Solid knowledge and understanding of the information architecture, access, storage mechanisms and technologies, internet mechanisms, and systems administration contribute to the complete knowledge of the whole system architecture. This knowledge is vital for procuring hardware and software for a large organization and effective administration of these systems. The discussion provides a detailed view of data storage, access, and internet applications and takes us through systems administration for large organizations. It ends with a discussion on large organizations and their application in large organizations.

File and Secondary Storage Management Introduction

Introduction

An aggregation of software applications, data access control, and manipulation functions and mechanisms in files and secondary storages sum up to file management systems, commonly referred to as FMS. The database and operating systems share file management system functionalities occasionally (Burd, 2008).

Components of a File System

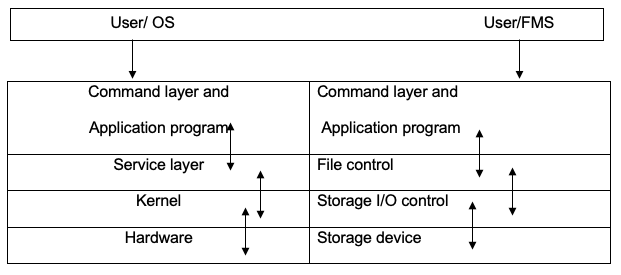

Graphically, the functions of the operating system and file management systems can be demonstrated based on the layering structure defining both systems, as shown below.

The file structure represents physical data storage mechanisms and data structures defined by bits and bytes and contiguous memory blocks. The operating systems interfaces device controllers and device driver applications. Data storage, access, and control are achieved through the kernel, which manages the transfer between the memory and other storage locations (Thisted, 1997). The kernel software is modularized with buffers and cache managers, device drivers that manage input and output devices ported to the system, device controllers, and modules that handle interrupts in the whole system architecture (Burd, 2008). Data access and control are defined in the files through logical access mechanisms whose architecture is independent of the physical structure of a file. File contents are defined by different data structures and data types which can be executed through file manipulation mechanisms integrated into the file management system at the design stage.

Directory Content and Structure

A directory is tabular storage of files and other directories in complex data structures whose information and data are accessed through graphical command lines, as exemplified in the UNIX file system. However, command lines are made transparent to users for other systems since the FMS manages access to the directory (Burd, 2008).

The hierarchical structure of files and directories has the unique attribute of being assigned unique values whose data structures point to each specific directory through the directory hierarchy (Thisted, 1997. Thus a file access path is specified in UNIX and Windows in different ways. Each of the access mechanisms takes the user to a specific file (Burd, 2008).

Storage Allocation

Controlled secondary data and information storage is achieved through input and output mechanisms to files and directories. The systems efficiently identify data and information storage blocks defined by efficient data structures which emphasize smaller units of storage for more efficient space utilization. Thus allocation units vary with varying sizes of allocation (Burd, 2008).

Storage allocation tables define data structures that contain information about allocations, entries, varying allocation units and sizes, access mechanisms that may include sequential and random access mechanisms, among others, such as indexing that makes access to a data item quite efficient.

Blocking and buffering provide a mechanism for accessing and extracting data from physical storage. Blocking factors determine the size of buffering that can be done on any size of the buffer. Buffer copying operations at times intervene where physical blocks of data cannot be copied in their entirety into the buffer locations.

File Manipulation

An open service call prepares a file for reading and writing operations through the mechanisms of locating, searching internal table data structures, identifying and ensuring privileged access is provided, identifying buffering areas, and performing file updates. The FMS ensures controlled access and prevention to file data from manipulations from other programs. Once the process is complete, the FMS issues a close file call that ensures the resident program is flushed into secondary storage, the buffer memory is de-allocated, and the table-data structure and file data stamp are both updated (Burd, 2008).

Update and deletions of records are achieved through the file management system. Mechanisms are integrated into the FMS to enforce data integrity and access privileges. Microsoft used the FAT file but earlier on developed the NFTS that provided higher speeds operations that were more secure, highly fault-tolerant, and incorporated abilities to handle large file systems (Burd, 2008).

File Migration, Backup, and Recovery

Utilities, integrated features, and other file protection and recovery mechanisms such as file migration through mechanisms such as undo operations and configurations for automatic backups. On the other hand, file backups are done periodically, constituting full backups, backing up incrementally, and through the differential approach. Recovery is also achieved through utilities incorporated into the FMS through consistency checking and other mechanisms.

Fault Tolerance

Hardware failure has the potential to cause loss of sensitive data, particularly for large organizations causing undesirable consequences. However, data and information recovery mechanisms are acceptable and reliable. Optical, magnetic, and other devices such as the hard disk are managed through disk mirroring and RAID technologies (Burd, 2008).

Mirroring is achieved through data stripping across a number of disks through smaller parallel read operations and RAID redundant read operations. A round-robin approach through regenerations contents to new disks.

Storage Consolidations

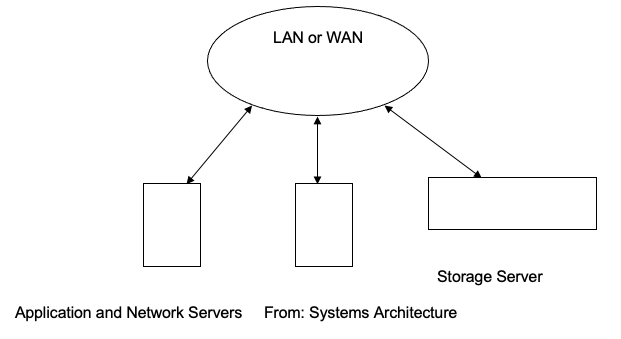

Large organizations find the DAS-Direct attached access inefficient and expensive in a shared environment. Thus, they opt for Network Access Storage, whose architecture is defined by network access and connectivity. The network server concept is hereunder illustrated.

Access Controls

The read, write, and file manipulation mechanisms and operations are controlled through the FMS. The FMS and Operating systems structure endeavors to implement and enforce security at different data access levels. Data integrity is enforced to ensure controlled access, authentication, and authorizations mechanisms before service requests are granted by the FMS. Data and sequential access operations are access mechanisms dependent on the physical organization of data. That includes a data structure such as graph directory structures. The directory data structure can be hierarchical, logical, physical directory presentations, and other file control mechanisms (Burd, 2008).

Internet and Distributed Application Services

Introduction

Data and information transfer through the internet architecture follow specific network protocols, operating systems, and network stacks. These enable resource access and interaction through hardware and software application interfaces. The chapter provides a detailed view of network architecture, network resource access approaches, the internet, emerging trends in distribution models, directory devices, and the software architecture of distributed systems.

Architectures

The client-server architecture is layered with other variations, such as the three-layered architecture commonly referred to as the three-tier architecture. These divide the application software into the database layer, the business logic layer that defines policies and procedures for business processing, and the data presentation and formatting view (Burd, 2008). The peer-to-peer architecture improves scalability with roles of the client-server and other ported hardware well defined.

Network Resource Access

Access to network resources is provided through the functionality of the operating system that manages user requests through the service layer. The resident operating system, therefore, explicitly distinguishes between foreign applications and remote operating systems. Thus, communication is managed through established protocols.

The Protocol Stack

The operating system configures and manages communication through a set of complex software modules and layers. It establishes and manages static connections on remote resources (Burd, 2008). The management of these resources is defined on the premise that resources are dynamic, resource sharing through the networks is possible, and that there is minimized applications and operating system complexity of a local system. Resource sharing is achieved through resource registries, particularly on the p2p architecture.

Dynamic resource connections are achieved through various mechanisms, one of them being the domain name system (DNS). This technique is defined by an IP address that incorporates destination address information at its header, which, however, changes dynamically with changing network platforms. A domain name serves for each network connected to the internet consists of two servers, one defining the registry and IP addresses that are mapped into the DNS and the local registry DNS. Standard container objects are defined on LDAP for different destinations such as countries. These objects are uniquely identified based on their domain name attributes (DN). These can be used for administrative purposes across large organizations.

Interprocess Communication

Processes communicate when they are local to an application or when they are split executing on different computer platforms. These processes coordinate their activities on standards and protocols. A specific focus on the low-level P2P processes across network communications is defined on application, transport, and internet layers. Sockets ported to these devices are uniquely identified through port numbers and IP addresses (Burd, 2008). Data communication takes in either way. The packaging and unpackaging of data packages are achieved through these layers. Remote Procedure call

A machine can invoke a procedure in another machine with a remote procedure call technique through the mechanisms of passing parametric values to the called procedure, holding on until the called procedure completes executing its task, accepting parameters from the process that had been called, and resuming task execution subsequent to the call. At industry levels, tickets and other mechanisms are used to access and pass data from one procedure to the other.

The Internet

The internet is a technology framework that provides a mechanism for delivering content and other applications to designated destinations. It consists of interconnected computers that communicate on established protocols. The model is defined by HTTP protocols, Telnet protocols, and mail transfer protocols SMTP, among others. It is an infrastructure that provides teleconferencing services among a myriad of other services (Burd, 2008).

Components Based Software

The component-based approach in software design and development provides benefits similar to those provided by complex software applications such as grammar checkers. Decoupling and coupling mechanisms when building or maintaining the components are unique attributes of this approach. Interconnection of companies based on different computers is achieved through a protocol such as those developed by COBRA, Java EE, among others (Burd, 2008).

Advanced Computer Architecture (n.d) and Burd (2008) both note that software can be viewed as a service that focuses on web-based applications through which users interact with applications and in which services are provided in large chunks. Infrastructure can also be viewed as a service with the advantage of reduced costs and other related issues. Different software vendors and architecture present potential risks that need to be addressed. These include cloud computing architectures and software applications that define the cloud computing frameworks.

Security spanning authentication, authorization, verification, and other controls and concerns such as penalties and costs associated with security bridges.

Emerging Distribution Models

High-level automation, ubiquitous computing, and decades of unsatisfied needs in various industries have led to new approaches to be adopted by business organizations today. These enterprises include Java enterprise editions, COM, which is a computed object model, SOAP, among other trends.

Components and Distributed Objects

These components are autonomous software modules characterized by a clearly defined interface, uniquely identifiable, and executable on a hardware platform. These interfaces are defined by a set of unique services names and can be compiled and run or executed readily (Burd, 2008).

Named Pipes

Data between executing processes occupying a similar memory location are identified as named pipes. These pipes provide communication services for requesting and issuing service requests.

Directory Services

This is middleware descriptive of service provided, including directory updates, storage of resource identifiers, provided a response to directory queries, and synchronizes resources. Middleware provides an interface between client-server applications (Burd, 2008).

Distributed Software Architecture

This architecture provides a link to distributed software components across multiple computer platforms geographically spread across large areas (Burd, 2008).

System Administration

Introduction

This chapter takes one through the process of determining the requirements and evaluating performance, the physical environment, security, and system administration.

System administration

System administration identifies strategic planning process for acquiring hardware and software applications, the user audience, determining requirements for system and hardware acquisition. All the integration, availability, training, and physical parameters such as cooling are identified in the process and documented on a proposal. System performance is determined by hardware platforms, resource utilization, physical security, access control mechanisms that were discussed earlier, virus protection mechanisms, firewalls, and disaster prevention and recovery mechanisms (Burd, 2008).

Software updates are integral in creating a large organization systems infrastructure. Security service, security audits such as log management audits, password control mechanisms, overall security measures, and benchmarking are core activities in building the infrastructure. Protocols are evaluated prior to the acquisition process that incorporates the identification of new and old software and vendors and standards.

System administration may also constitute acquisition, maintenance, and developing software and security policies (Burd, 2008).

Applying the Concepts for Large Organizations

Large organizations demand software applications and hardware platforms that support their activities on a large scale. That is the case with large organizations such as Atlanta, GA. The software and hardware infrastructure is defined by file access mechanisms that are characterized by high-speed access to information, large backup facilities, fault-tolerant systems, backup methods such as mirroring, and RAID technologies. Storages are consolidated, and access is provided through networked infrastructure on a wide or local area network. Internet access for these systems and institutions provides resource access mechanisms through controlled access protocols. These protocols include web protocols, among others. Software platforms are defined by components that interact and interface with other applications. The infrastructure in these large organizations is interconnected through cables, and security mechanisms span firewalls, system audits, security levels, including privileged controls, among others. Hardware and software acquisitions for large organizations are made through proposals and vendor identification strategically tailored to meet the organizations’ goals.

References

Advanced Computer Architecture. (n.d). The architecture of Parallel Computers. Web.

Burd, S. D. (2008). Systems Architecture. New York. Vikas Publishing House.

Thisted, R. A. (1997). Computer Architecture. Web.