Abstract

The need for Data replication has grown a lot and in a large organization, different data replication techniques and systems are implemented in order to recover data in case of any disaster. The paper has three sections: The first section deals with the introduction, the second deals with existing problems in data replication via WAN among geographically remote sites, Third section deals with the proposed solution for data replication Via WAN.

Data Replication

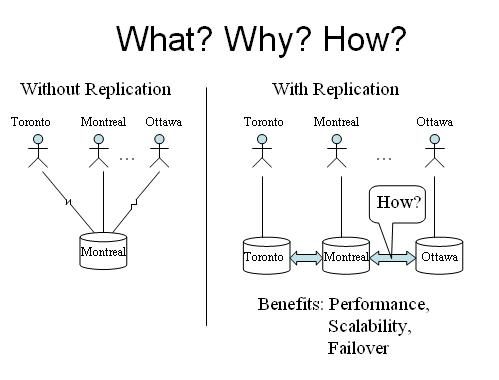

Replication is a common term widely used in information technology. In order to improve reliability, fault tolerance and accessibility data replication is done. Replication is a process of sharing information to ensure consistency between redundant resources (Data Replication, 2007). Replication is a process of sharing information in order to make a connection or network reliable and effective. Software and hardware components are also shared by the replication process. Replication could be in form of data which is widely known as data replication, i.e. same data is stored in multiple locations for later access. A computational task could be replicated in a number of forms i.e. replication by time, replication by space, replication by separate disk. There are two types of replication one is active replication and the second one is passive replication. Active replication is a process of processing the same request at every replica and in passive replication, every single request is processed on a single replica and its state is transferred to other replicas. Many people do not differentiate between data replication, load balancing and backup. Load balancing and backup are totally different from replication. Load processing is a process of sharing data or balancing data into one machine for recovery in case of any failure. Backup is a process of saving data for a long time for recovery. Back up can be updated from time to time.

One common terminology that is also used in computer systems is database replication, database replication is a process widely used in database management systems this term is used in a master-slave relationship with original and copies of original data. In a database management system, data replication is done in numerous ways. Most logs the update which then ripples to the slaves. Database replication is difficult when the database is large; sometimes it becomes difficult to deal with a huge amount of data. Usually, when the database system scales up database replication becomes difficult. Scaling is done in two dimensions, vertical and horizontal. More replicas are involved in horizontal scaling; vertical scales have replicas located over distances. Multilayer access protocol is widely used for solving problems associated with horizontal scale-up. Data replication is very common in a distributed system, Data replication is the oldest term associated with the database management system, in distributed systems if data replicates on the distributed system the process would be passive and operation operated only in order to maintain stored data reply to read request and apply updates. The developer’s main responsibility is to deliver fast, scalable and best solutions to customers. The business world is shifting to an increasingly distributed workplace featuring remote satellite offices and mobile employees, customers demand applications that can easily be scalable and adjusts dynamically on a low budget.

Benefits of Data Replication

Data replication has many benefits that need to be realized by users as well as by developers. The following are the benefits of data replication.

- Data Consistency

- Data warehousing

- Distributed data

- Secure Data

- High availability

Replication allows the ability to maintain the copy of an identical database at multiple locations. Banks and other sales companies daily work on the distributed system but by the end of the day data needs to be updated at the central server; with the aid of the replication process, this process has become effortless. Replication improves the speed and performance of reporting without affecting day-to-day operations. Replication offers scalability, security and stability while providing data at distributed locations. Replication also reduces time and delays to enable clustering capabilities. Replication provides easy access to data at all sites. Replication provides easy access in case of local data unavailability users can access a remote copy of data. Replication is widely used to increase power. With the aid of replication, the power of the database server is enhanced by distributed data management capabilities. The advantage of replication is based on an asynchronous push model which offers scalability of systems and also minimizes latency in the delivery of updates from the source database to the target database. Following are the few data recovery tips which help a lot in case of disaster: Data categorization, Data access, Data synchronization, documented procedures, monitoring, notification and testing. By implementing these steps users can take full advantage of the replication process over the network. In the market, there is a number of low-cost software available for data replication but all of them are not effective, low bandwidth causes have also contributed to technology’s gain in popularity.

Different organizations do not realize the need for data replication over the network at the early stages and when a disaster occurs then they feel the need for replication which highly increases their recovery cost. In past decades, data replication was done only by large companies but with the growth of information systems and dynamic access to systems have increased the demand for replication in small organizations too. However, still there are many organizations that keep updated backup instead of replication at multiple locations. Today, a replication system is easy to adopt, implement and use. The ultimate goal of data replication is to create a full and same copy of original data from a source location to multiple destinations.

The main goal of replication is fault tolerance. For example, if a replicated service is used to control a telephone switch with the objective that if the main circuit gets fails then the backup can take over its function. The primary goal of replication in distributed systems is to provide fault tolerance, reliability and stability. There are a number of models available for distributed systems are state machine replication, transactional replication and Virtual Schcorny. The virtual Schcorny is popular as it gives ease to developers to use either active or passive replication. There are different implementations for disk storage replication; a few of them are as follows:

- DRBD module for Linux.

- EMC SRDF

- IBM PPRC and Global Mirror (known together as IBM Copy Services)

- Hitachi True Copy

- Symantec Veritas Volume Replicator (VVR).

3 Seven Steps involved in Data replication

There are seven basic steps to data replication or the data replication approach can be determined by following seven steps.

- Amount of data to be copied

- Network bandwidth

- Network Location

- Number of servers involved

- Use of clustering

- Available space at remote sites

- Available budget.

All seven steps are associated with each other.

Amount of data

The amount of data that needs to be copied will determine the bandwidth of the network which requires the amount of data to be copied. This is the basic theme of data replication and it is usually the most extensive part. This is the most important step in determining the bandwidth required for the amount of data to be copied.

Network Bandwidth

Having dial-up connections between sites there is always a need to keep updated back up in case of any disaster or data loss. Usually, 10M bandwidth for each Mbyte of data is required to copy on a per-second basis. For instance, a T3 link can handle 5M bytes of data per second.

Network Location

The distance over the network is really important and plays a pivotal role in determining the bandwidth of the network. The distance will determine the type of data you need to copy on remote sites. There are two types of replication: synchronous and asynchronous. Under Sync replication usually, I/O is not completely written to both sides. Writing Complete I/O is beneficial for transactions as they remain consistent with this action. Every I/O is written in order to remote side before the application sees “I/O completion” message. The main problem is associated with this issue is fiber channel protocol as fiber channel protocol requires four complete trips in order to get an I/O message in case of sync. Replication. Whenever dark cable fibers are used between sites speed of light is highly affected because of fiber protocol four round trips. For about 25 miles a millisecond is lost due to four round trips. Sync replication is valid for 100 kilometers. Async replication copy is more popular because of no distance limit; it can go around the planet.

Number of Servers involved

There are a number of software-based replication products that work well. The basic advantage of software-based replication products is they are not tied to specific hardware brands. The need of using software-based replication products arises when there are a number of servers from which data needs to be copied. A license is usually required to copy from the primary location i.e. server and buying a server on remote sites can get very expensive and everyone could not purchase such an expensive license. software-based replication products are specially designed to keep an eye on the need of copying data from different locations. This software helps a lot and save users time and money.

Use of Clustering

Cluster solution is really effective and a number of problems can be solved by using these solutions. A cluster is a group of linked computers that work closely and form a single computer. Most cluster solution requires real-time connectivity in order to determine heartbeats and quorum forces. Clustering software like MSCS works well but sometimes it gets fails due to distances, in that situation user needs to be within Sync replication distances. If a user uses clustering software i.e. MSCS, it works well but when the user expands the network application transparently failover. In such cases, cluster software gets fails so there is a strong need to identify the need of using a cluster solution on the network or not.

Available space at remote sites

Own data center for the remote site is a good idea. In the case of leasing space from a provider, there is always a need to make the system as compact as possible. Server and storage issues must consider in order to recover whole data in case of disaster. Space and storage issues are very important and need to be considered while setting up a network.

Budget

The budget has great significance in any case. Setting up a network budget plays an important role. In case of disaster real-world cost is usually high. There is a need to determine the following cost in order to keep you on the safe side. Floor space, recovery server, the pay of recovery staff, hardware-software licensees, cost of implementation of solutions, cost of servers which are needed to determine which data needs to be copied or not., network links (the most expensive part need to be considered), SAN extension gear. A heavy budget always results well. There is a number of approaches available that guide resources and cost allocation. CTAM is always a wonderful idea in case of disaster. Software-based solutions work well and are very helpful; some of them are listed below:

Host-based mirror sets over MSCSI, Legato Octopus and RepliStor, Topio SANSafe (this also does a sync with write-order fidelity), NSI Doubletake, Veritas VVR and VSR (Veritas VVR does async with write-order fidelity), Fujistu Softek TDMF, Peer software’s PeerSync, Diasoft File Replication Pro, Insession replication suite,

SoftwarePursuits SureSync, XOsoft WANSync, Synopsis Java replication suite.

For the past few decades, many organizations is using data replication solutions to overcome the disaster and recover data in case of any loss. A number of companies relied on tape solutions for the recovery of data in case of disaster. Mainframe replication appears in open system environments in the past few years. Nowadays, most companies have realized the need of copying data or keep their backup in case of any disaster. The advantages of data replication are as follows:

Client sites at which data is replicated feel performance better as they can access data locally instead of connecting to a remote database server over a network. Client at all sites experiences better performance of replicated data in case of unavailability of local data client can still access a remote copy of data.

These advantages come along with cost; data replication requires more storage, replicating a huge amount of data can take more processing time. Always implement data replication according to the needs of client and explicitly specify where data must be updated. This method of data replication is costly and it’s also difficult to maintain. The process of data replication is often coupled with replication transparency. Database transparency is built into a database server and it handles automatically details and maintenance of data replicas.

Snap Technologies

The primary goal of Data replication is to save a complete copy of source data. There are many ways of data replication, few of them are listed below: Snapshot is one of the most common technologies used for data replication. Using snap technology there is minimal effect on the host application. There are many ways of performing snapshots:

Snap copy, pointer-based snapshots.

Snap copy:Snap copy technology exactly saves the complete copy of source data. The main advantage of this technology is a copy of saved data can be stored locally on different disk drives as it provides a full copy of the source file. There is a lot of advantages of this technology and the big disadvantage involved in this technology is creating a number of snapshots of source data can be time-consuming and also affects the performance while being created; additionally, it requires 100% disk storage for an original copy as well as for snapshots.

Pointer based snapshots

Pointer-based snapshots are snapshots that do not contain a full copy of original data, pointer-based snapshots contain a set of pointers that point to original data. A block of data is usually written to the snapshot original data and the revised block is written in order to preserve the snapshot area, the pointer used for the snapshot block is then changed pointing to the copied block and the newly formed block is written to the original source the whole process is widely known as” copy to write”. Subsequent rights of original data are not usually copied as original data has already been moved. Pointer-based snapshots require just a fraction of the disk drive and this method is popular among all others. In this method only changed blocks are copied. This method is cost-effective and can be adopted easily. Only one advantage is linked with this technology is that the source is written intensive and this copy-to-write method can affect performance.

Remote Replication: If there is a need to have a copy of data from a remote location then the remote replication method is used. There are two main options for doing it.

“Synchronous replication has been around for a long time in the high-end, mainframe-class storage devices and more recently in the higher-end open-systems storage devices. Due to cost and complexity, synchronous replication has traditionally been implemented by larger enterprises”. (Data Replication, 2008).

In Synchronous replication, every bit of copied data is sent to a local disk system, which sends it to the remote location. The write is not acknowledged back until the remote location receives the write and acknowledges that a write process is completely done. This takes little time as the application has to wait for acknowledgment and can’t continue further without it. The double write penalty is effective and can do a number of writes. As the distance between the local system and remote location grows, the time or delays also increases with it. The networking between two systems must be fast in order to minimize delays. Synchronous replication is very expensive and it reduces the risk involved in the recovery of data in case of any disaster. Nowadays, sync replication has promoted a lot to much more affordable and empowered many more companies to adopt solutions they would not have been able to deploy in past years because of high cost. Asynchronous replication creates an exact copy of original data on a remote system. There is no distance limitations involved in asynchronous replication. The disadvantage in asynchronous replication is remote data cannot be used necessarily because writes occur out of order and there is no sequence of data. In some cases, the asynchronous implementation provides the facility of writing data in order. There are three basic types of replication; Host-based replication, appliance-based replication and storage-based replication.

Uses of Database Replication

There are numerous uses of data replication i.e. disaster recovery, maintenance and backup.

- Disaster recovery: data replication is widely used in order to solve disaster issues. Disaster recovery is still a difficult case behind replication. In some cases, remote replication is easy to implement and can be implemented from the production of the original site to one or more remote sites across the network, company or country. When a disaster occurs at the original location data can be bought at remote sites and continue its processing against the replicated copies. Nowadays, there is a strong need to implement preventive measures for data recovery in case of disaster.

- Maintenance: When a disaster recovery system is in place there is no difficulty in retrieving original data in case of disaster. In such a case of the remote application, downtime can be minimal. Data replication can be used to shift data centers with minimal downtime.

- Back up: Back up is still a big challenge in this world for an IT person. There is a need to maintain updated backup daily which is quiet a difficult task for large organizations. Data replication and snapshot technology has solved this issue up to high extent.

WAN is a computer network that covers a broad area for sharing rescores and communication purposes. It uses routers and communication links (McQuerry & Steve, 2003). As, WAN is a large network comprised of numerous systems, the need of data replication is growing over WAN. Nowadays large companies prefer to have data replication in order to recover data in case of any disaster. The need of data replication over WAN is growing as the demand for geographical resiliency increases. There are countless new advancements has taken place in this domain. There is a number of cost-effective technologies and software available to replicate data over WAN according to business needs. Data replication technologies are specially designed to keep an eye on business needs. Data replication technologies duplicate or copy data from one high available storage disk array to another. The movement of data usually occurs in real-time, over high dedicated bandwidth network. A data protection scheme is more expensive than tape back due to the need of multiple disk arrays which are a necessary asset of an intelligent storage system. Data replication via WAN can take place in three forms: synchronous, asynchronous and semi-synchronous mode of operation. In synchronous replication is done in three steps: Copy data to the primary storage system, data is written on a remote site, the write operation is complete and acknowledgment comes up from the host that all data has been written (Rene, 2003). The host application is not allowed to continue work until complete data is copied to all storage systems. For this, the synchronous mode is one of the best methods according to network latency and bandwidth. Synchronous data replication ensures that all data storage systems are identical at every point in time. In order to prevent transactions from complexity and timing issues no more than two nodes are allowed simultaneously in synchronous data replication. Asynchronous replication is also one method of replication data on remote sites. Asynchronous is also known as adaptive copy and point-in-time copy of replicated data. This model is considered ideal for disk to disk backup or for snapshots of offline processes. In this mode of operation, I/Os are needed to be written on the primary storage system and then sent to multiple storage systems at some point of time. The host application is free in this model whether data has been written or not, no acknowledgment is involved in this operation mode. Semi synchronous approach is a hybrid approach where first I/O are written to primary storage and after that application is allowed to proceed at the same point data is synchronized with the remote storage system. In this approach network structure is of great significance. The geographically dispersed storage system is one of the best ideas for disaster recovery. The following characteristics are involved in inter-data center storage infrastructure for data replication: Low latency, Packet loss, Control mechanisms, scalable bandwidth.

Keeping these characteristics in mind there are few application that offers perfect data replication over WAN, few of them are Coarse/Dense Wavelength Division Multiplexing (C/DWDM) and Synchronous Optical Network /Synchronous Digital Hierarchy (SONET/SDH). These both provide excellent data replication over WAN and MAN. C/DWDM is also known as lambda which offers best data replication over WAN, this technology maps data from multiple resources and protocols together on an optical fiber each signal carries its own light wavelength. C/DWDM is used in order to connect data centers via different protocols for example Fiber Channel, FICON, and ESCON. There are a number of advantages of data replication over Wide area networks few of them are, failover, scalability and performance. Group communication is very important in wide area networks, each group member can multi-cast messages over the network to all members and the member receives messages in specified order such as FIFO and TOTAL.

- FIFO: Messages receives at the recovery end in the same sequence as they were sent from sender‘s side.

- Total: if two members receive messages m1 and m2, they both receive messages in the same order.

Data replication is one of the most difficult tasks over wide area networks. There are numerous factors involved in data replication over WAN, few challenges are as follows: a large amount of data needs to be handled, the number of simultaneous sessions, is time-sensitive. Many software and algorithms are available in order to minimize these challenges and solve issues involved in data replication over WAN. This software improves data transfer time; they maximize data efficiency, reduce packet loss and recovery errors, and ensure data security. All these systems is cost-effective so anyone can adopt these systems for data replication over WAN. This software also reduces bandwidth cost, they improve application performance over WAN. These systems are specially designed to keep an eye on today’s market and business values. In large organizations, it’s difficult to maintain a large amount of data and in case of disaster recovering of data is also a big challenge. These approaches solve these issues and provide convenience to its users. A few advantages of data replication over WAN are illustrated in Fig 1:

Data replication strategies in WAN

There are many strategies by which a transaction can be made effective which involve communication choices, Optimistic delivery, Early Execution variation and total order. Communication choices have great significance in data replication process over WAN (Baldoni, 2005). The proper choice of communication medium makes the connection strong and reliable. For message delivery time accounts for 70-80% of txn response time in contrast of execution time is usually 5-10%, in order to solve this issue symmetric protocol is considered best over wide area networks, passing of local token is also adopted in some cases which is proposed by fast total order algorithm. Few assumptions are made in TOTAL order algorithm are:

- On every site there is one FIFO queue for one sender.

- All queues form a ring.

- At single point of time one queue holds a token.

- Every new received message appends in the queue.

- After receiving the message, token is passed to next queue.

Data Replication Models

In a distributed system, data replication is a common term used in a large organization. As, it has been observed that it is difficult to deal with the huge amount of data in the replication process, in order to resolve these issues number of software, solutions are available in market at economical rates. In distributed systems, different models are available to make replication reliable and safe. Three of them are as follows:

- Transactional replication

- State machine replication

- Virtual Synchrony.

Transactional Replication

Transactional Replication is a model which is used for the replication of transactional data. For instance, in large database systems, use a transactional storage structure. In this case, one copy of the serializability model is employed. This copy defines legal outcomes on transactions of replicated data. With the combination of ACID properties, the transactional system becomes guaranteed and safe.

State Machine Replication

This is one of the most famous and common models used over distributed databases. As it is easy to implement and cost-effective among all other models. The models assume to provide definite transactions on finite state machines and automatically broadcast every possible event. This model is one of the best models among all others but still in some cases, it gets fail due to active replication over distributed systems.

Virtual Synchrony

This model is used for the replication of data on short intervals; the model is used when a group of processes coordinates to replicate data in memory or in to coordinate actions. This model uses a newly defined entity which is known as a process group. A process can join a group which is for opening a file, the process is added to a group providing a checkpoint containing pointers or states of the current transaction. Processes can send events and can see the upcoming messages in identical order even they are sent concurrently.

The performance level may vary depending upon distributed system type, network status and transactions order. Transactional replication is slower as one copy serializability is required which slows down its speed and performance. Virtual Synchrony is the fastest among all but the handling of faults is less rigorous than the transactional model. The state machine model relies on in-between as its neither a bad nor good model this model is faster than transactional but slower than virtual synchrony. Virtual synchrony is more popular as it gives the opportunity to developers to use either active or passive replication. By using the Virtual synchrony model, the developer is free to choose any replication according to the requirements of the system. There is no restriction of active and passive working with virtual synchrony. A virtual synchrony system allows programs to run over the network in order to arrange themselves in process groups and to send messages over the network to multiple destinations. There are numerous advantages of virtual synchrony few of them are as follows: Event Notification, event locking, unbreakable TCP connections, fault tolerance and cooperative caching (Maarten, 2002). Among three models of data replication over distributed systems virtual synchrony achieves a high level of performance and it is widely used by a number of organizations over large distributed systems but this comes at cost virtual synchronous fault tolerance is weaker.

Automatic Data replication for web applications

GlobeDB is one of the best methods for data replication for web applications. This method offers an autonomic replication process in less time. GLOBEDB is a system or approach for hosting Web applications are highly used for a number of online transactions worldwide. Globe DB system for Web application performs autonomic replication of application data. GlobeDB provides a data-intensive approach for Web applications which benefits of low access latencies, short time delays and minimizing update network traffic (E. Cecchet, 2004). The major difference in the GlobeDB system can be compared to existing computing techniques i.e. distribution process and data replication is handled by an automatic system effortlessly and with little administration. This approach shows that major performance achievements can be obtained this way. GlobeDB reduces the risk factor involved in data replication and latencies. GlobeDB Performance evaluations ensure that GlobeDB is one of the most time-saving approaches for data replication on web applications. Performance evaluation with the TPC-W benchmark on an emulated WAN proves that GlobeDB minimizes latencies by a factor of 4 than non-replicated systems and it also reduces network traffic by a factor of 6 as compared to fully replicated systems(Z. Fei,1998).

Edge service architecture has become so popular and now it is one of the most widespread platforms for the distribution of web content over the internet. There are a number of commercial content delivery Networks(CCDN) that implement edge server around the internet and locally provide data to clients. Typically, edge server store static WebPages and then replicates data and static pages. Nowadays, a huge amount of web content is generated dynamically and replication becomes difficult in this case. Forms, sign up forms, feedback forms generate data from user input which replication is sometimes difficult GlobeDB solves this problem up to high extent. Data placement is one of the most common terms used in the replication process (Smith, 2008). Data placement requires a setup of edge servers in order to host replicas of data cluster according to whole criteria. Performance of a network can be measured by a number of ways, one can easily measure system performance by matrices i.e. average read latency, averages write latency, update traffic. For instance, a system can be optimized by minimizing read latency with the aid of data replication to all replica servers (Pierre, 2002). Selecting a replication strategy is the most important step for proper and effective data replication.

Multi-view access protocols for large-scale replication

This method proposes scalable protocols for large replicated systems for replication. The approach proposes protocols that organize sites and replicas in form of tree. These protocols arrange sites and replicas in a tree hierarchical architecture (GOLDING, 1993). The main idea behind the protocol approach is to provide a cost-effective efficient solutions for replication management. The primary theme of the protocol is to perform the most complicated task replicated data updates with a huge amount of replicas related by the related sets but independent transactions. Every transaction on this network is responsible for updating replicas at every step on one cluster and invoking extra transactions for clusters member. The first step is to primarily copies data are updated it with a cross-cluster transaction. The second step involves the independency of clusters by separate transactions. The decoupled approach helps in updating the propagation process which results in multiple views of data replication in a cluster (ADLY, N. 1995) As compared to different replicated data protocols; the proposed protocol approach has numerous advantages and is beneficial for low budget organizations. This approach is specially designed to keep an eye on the market’s need and increasing demand for web application content. The primary role is performed by a small number of replicas on each transaction which needs to be updated in a form of a cluster, the protocol approach significantly minimizes the transaction rate of abortion, and it highly tends to soar over heavy transactional systems. The protocol approach improves user-level transaction response time in order to commit for replicas updating. The secondary objective of this approach is to read queries to have flexibility in order to view multiple database sections on different degrees of consistency and data currency. This approach ranges from local to global and has consistent views local. The protocol approach is designed to maintain scalability by using dynamic reconfiguration of a system for the growth of the cluster into two or numerous smaller clusters. The last objective of this approach is the preservation of the autonomy of the clusters in order to update replicas via a specific protocol as no specific protocol is within the same cluster (AGRAWAL, 1992). Cluster is free to use and this approach presents a wonderful sketch of data replication for large-scale systems with the aid of multiple access protocols.

Middleware-based database replication: the gaps between theory and practice

Due to the growth of web applications, the need of high availability systems and efficient performance management systems is required in the long-run interest in database replication for a number of purposes (Amza, 2005). However, in academic scenarios replication is quite often and an academic group often faces replication attacks in an isolated environment for overlooking the immediate need for the completion of solutions, on the other hand, numerous commercial teams use holistic methods which often miss chances for primary innovation. This difference is due to the gap between academic and research practices created with the passage of time

This approach has a number of goals, the main goal of this aims to minimize the gap on three axes: performance, availability, and administration. This approach builds on researchers’ experience in developing and deploying replication systems the approach is based on a number of experimental facts and figures from the past decade of open-source, academic, and commercial database replication management systems and this approach mainly propose two basic agendas one includes academic research and second includes industrial R&D within 5-10 years (Chen, 2006). This approach is specially designed keeping in mind the needs and challenges of replication data over large systems as middleware-based database replication is more relevant to each other.

Optimistic solution

The need of data replication is really important in order to recover a large amount of data in case of any disaster or failure. In past decades, most organizations does not recognize the need of data replication. With the passage of time, data replication has become necessary in order to save time, effort and money in case of network failure. Data replication is an important technology in distributed database systems that offer high availability and network performance (Rene, 2003). The approach is based on an optimistic replication algorithm. Optimistic replication algorithms allow replica content to shift in the short term in order to favor simultaneous work practices and increase fault tolerance in low-quality communication networks. The need and importance of such methods are increasing with the growing use of web applications and distributed systems. Data replication over wide area networks and on mobile networks is also very popular. The optimistic replication approach is based on algorithms that are not common in traditional “pessimistic” systems. With synchronous replica coordination, an optimistic algorithm offers a number of changes and discovers conflicts after its deployments. This approach has explored a new and unique solution space for optimistic replication algorithms which help a lot and perform the replication process effectively. This approach points out key challenges faced by optimistic replication systems by operations order, detection of conflicts and offers a pack of solution in order to deal with data replication over large networks (Baldoni, 2005).

Ganymede: scalable replication for transactional web applications

Over large distributed system data grids are of great significance as they maintain data in the proper format for further processing. Nowadays, data grids, large scale web applications are generating dynamic data and database services for minimizing the effect of significant scalability challenges to database systems. Replication is one of the most common solutions for data recovery in case of failure or any disaster but numerous trade-offs are also associated with this approach. The most difficult trade-off associated with this approach is the choice between scalability and consistency for large networks. In a Commercial system, consistency does not involve directly and usually, the commercial system gives up consistency issues. Numerous Research solutions and techniques offer a compromise (limited scalability in exchange for consistency) or some assumptions are usually made on the database and network field in order to get accurate results. This approach is now gaining popularity because of its effectiveness. This approach is named Ganymede; it’s a database replication middleware system which highly intended to provide scalability without any consistency and it also avoids problems associated with existing approaches and methods (Cristiana, 2003). The basic theme behind it is to use a novel transaction scheduling algorithm which is used to separate updates and read-only transactions. Ganymede uses JDBC driver for transaction submission. After receiving data Ganymede routes & updates a main server and queries to an unlimited number of read-only copies. This approach assures that all transactions pose a consistent data state. This approach describes the scheduling algorithm, the architecture of Ganymede, and illustrates an extensive performance evaluation which is important in order to prove the system’s potential.

An adaptive replication algorithm

This approach highly focuses on replication over distributed databases and presents an algorithm for the best results of data replication over large networks. This approach addresses distributed database systems performance. The approach specifically presents an algorithm for the dynamic replication of data over large distributed systems. The algorithm or method is adaptive in a number of means as it offers changes in the scheme replication of the object at this stage is a set of processors are involved at which the object in replicated as changes occur in the read-write pattern of the object these processors issues number of reading and writes, The algorithm moves the replication scheme towards an optimal one. For better performance, the algorithm can be combined with the concurrency control and recovery mechanisms of a distributed database management system which results in form of the best output. This algorithm has been tested over a number of networks and experimental results proved it one of the most time saving schemes among all others. This algorithm provides a lower bound of any dynamic replication algorithm performance.

Current issues in Data Replication

When the distributed system had begun to deploy at different locations and data become larger than RDMS experts realized the need of replication of data at multiple locations. They felt that in case any disaster or data loss occurs then there must be something in order to recover complete data. The replication process copies data as the original. It generates copies of original data and stores them at multiple locations. In the 1980s there were only a few RDMS experts who realized the need of replication. Those experts have experienced IT professionals with sound knowledge working with a large system that had already faced challenges and problems that can arise when shared data is distributed over a large network. These experts realized that users can only access data residing on a local server, if the local server goes off then there is nothing to recover. In such a situation, the user had to wait until batch processing recover all data from remote locations, using data replication techniques user can access data at all times. A number of investors realized this fact and invested a lot in data replication techniques and started saving their data at multiple locations to be on the safe side. By the late 1980s number of small organizations had moved over the distributed system so this industry grew a lot and many investors invested their money in it. There were many replication software available which offers data replication in simple steps. AFIC was one of the best replication software seller, they developed the product for sun base and later ported it into other RDMS. While development team realized that this is not feasible and it would not fit in every environment for this, distributed system must be heterogeneous; unfortunately, by the end of development it had been observed that the team was right. At the end, every customer needed something different, according to which product was not customizable. They spent lot of time to fix these issues. By the end of 1994, they had developed a number of systems according to each company requirement. Data replication products need huge changes and customization; even products by Oracle, sun, Sybase often require a large amount of customization to fit in to each company’s environment. Customization, maintenance and updates fees are usually much high than original coast. In many cases, a number of organizations could not meet high cost for such products. Due to complexities involved in cases, AFIC stopped selling its replication products. The Replication solution highly depends on the customer’s business needs and goals. Things that need to be considered before developing a replication solution include:

- What are the goals?

- What are the primary requirements?

- What is the customer Budget?

- What type of replication do customers need?

- Is there a single source or multiple sources?

- How tightly data is synchronized?

- What kind of communication lines do customers use?

- What is required bandwidth?

- How many license customer need to buy?

- Is team is experienced in developing such system?

- What would be the total cost of complete solution including research, license and both internal and external support?

While selecting a replication solution, one must consider all above facts, these facts help a lot in choosing right product for an organization.

Side by Side comparison of replication solution is a little difficult but there are some facts which every customer must keep in mind while evaluating replication softwares. Over past five years, replication solutions available in market has grown a lot and industry has gained so much popularity (Current issues in Data Replication, 2007). But there is still “one size fits all” solution is unavailable. Before beginning to shop or develop data replication software individual must gain clear understanding of the issues need to be consider before setting up a final network. Never expect to have standardized solution that fits your organization’s requirement automatically. Finally, it very important to choose or develop a replication product keeping an eye on customer’s requirements, needs and bandwidth allocation, resources provided, amount of data, available budget, communication medium, status and growth of the organization, availability of remote servers etc. these all factors help in producing cost effective and efficient solution.

There are numerous algorithms and solutions are available which provides full ease of data replication over large distributed systems and offer ease of retrieving complete data in case of network failure or any disaster. Every proposed approach has some pros and cons and existing approaches work well under some limitations. As, the need of data replication over large network has gained so much popularity and many organizations are using these themes in order to make their system best and recoverable in case of disaster. In early decades, no one was interested in spending an amount on replication techniques as it was considered as tie and money-wasting effort. With the passage of time, this trend has changed a lot and almost every organization needs to have a replication system on its network to be on the safe side in hard times. There are many approaches available in the market but almost all of them need customization according to the client’s business requirements and need. There is no solution available that automatically fits in every business environment so there is a strong need to introduce an approach that easily suits all business environments.

Proposed Solution

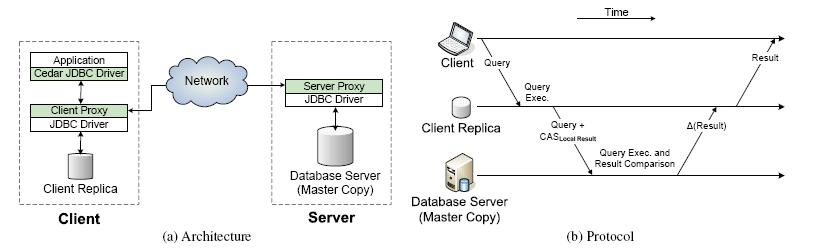

Improved Access over WAN with Accurate Consistency

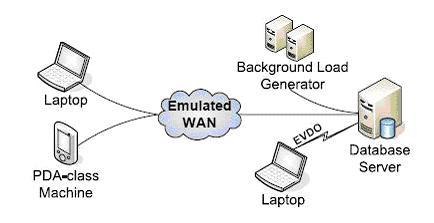

This approach proposes a model for providing improved database access over large networks over low bandwidth. This model keeps strict eye on good performance over low bandwidth. The proposed model is named as “CEDAR” which enables improved database access than any other approach. Cedar exploits the disk storage and provides ease and flexibility of accessing data without degrading consistency. It exploits data storage & processing power of the client-side to accommodate weak connectivity. Cedar’s main principle is the use of stale client replicas in order to reduce data transmission volume from the database server. Content addressable storage and elide commonalities between client-server helps in achieving a reduction in data transmission volume. The cedar principle allows the use of an optimistic approach to solving database problems. This approach has been tested on a number of application which includes Laptop, PDA, WAN and other networks and experimental results show for laptop class cedar improves the throughput of read-write workloads by 39% to 224% with reduce time by 28% to as much as 79%.

Large organizations usually use relational databases according to their business needs. Over large networks, data replication is important and saves money effort and time in case of any disaster. Relational databases lie at the core of business needs like inventory system, order entry, customer relationship, asset tracking and resource allocation. A consistent view of shared data is an ability of the database which contributes a lot in achieving high results. Maintaining consistency with great performance is the main challenge under conditions of weak connectivity in this domain. It has been accepted globally that in the early days there was a great trade-off between consistency, good performance and tolerance of poor network quality. Failing to preserve consistency makes the database attractive and appealing for many applications.

This model proposes a new approach to mobile database access without degrading consistency. This approach shows how to achieve great performance results under weak connectivity and low bandwidth without degrading consistency. A critical insight of this approach is disk storage and processing power of mobile clients can be used to accommodate weak connectivity.

Cedar uses simple client-server architecture in which the server holds a master copy of the database. Cedar’s ruling principle is that a stale client replica is used to reduce data reduce volume. The volume reduction is greatest when the client replica and master copy are identical. The ruling principle allows Cedar to use an optimistic approach for solving database issues. The main advantage of this approach is there is no need of any code to access the database (KISTLER, 1992). The advantage is due to an optimistic approach which helps a lot in connecting to the database easily within seconds.

Background

Thousands of users use multiple networks for satisfying their business values and needs and many users use their own personal computing devices for accessing queries and updating data stores over a wireless network. The increasing popularity of wireless networks is due to the easy access of data from anywhere and GPRS also facilitates its client by many ways. With the use of wireless networks, user can access their required data anytime from anywhere. In wireless networks, there is no limitation on bandwidth, distance etc. WAN (Wireless wide area network) will remain important in upcoming years ad the popularity & WIFI technology does not affect on performance and use of the wireless wan network. WWAN requires much less dense infrastructure that can be piggybacked on the existing mobile structure. On the other hand, WIFI coverage is limited and can be economically sustained. Secondly, WWAN has a great ability to meet business requirements and scalability while WiFI could not meet scalability in some cases. WIFI structure may gets fail in such cases as a disaster, failure of network and recovery of data is difficult in such situations. Cedar improves performance by using the optimistic approach for easy database access (LARSON, 2003). Cedar uses Content addressable storage by cryptographic hashing to discover commonality. Earlier CAS efforts assumed that real-world data is collision-resistant in respect of cryptographic hash function. Trust in collision resistance in CAS-based systems treat the hash of the data item as tag. Remote database access is widely supported through java drive i.e. Java database connectivity (JDBC). JDBC defines a java API that enables vendor-independent access to the database. API helps in connecting with databases easily in less time.

Design & implementation

Cedar improves the performance of database access over low bandwidth without degrading consistency. The focus of cedar is to improve database access in a limited time; it assumes that weak and limited connectivity is available. Cedar central principle is used to reduce data transmission volume. In its design, there is no expectation that a result from a client is always right therefore, no data from the client side is accepted without first checking with the server. Cedar uses “check on use” theme i.e. always checks client data and gets confirmation from the server before accepting any data from the client side. Cedar rejected “notify on change” approach i.e. whenever the data is modified, the server confirms its status. It highly focuses on checking on use (LAWTON, 2005). Cedar rejected notify on change approach as it reduces wasteful invalidation traffic in situations where invalidation is due to weak connectivity and irrelevant update activity. Check on use approach simplifies the implementation on both client and server-side. Cedar implementation is done in Java and it runs in a Linux server, it is also compatible on windows. A key factor involved in cedar design was because of the need of cedar transparency to both application and database. This step lowers the hurdles for the adoption of cedar and enhances applicability. The proxy-based approach is used to achieve the desired goal. Cedar is designed in such a way that it has no concern with application source code, the most application uses database are written according to standardized rules and the use of API. APi & JDBC combination is a convenient interposition point for new code. At the end of the application, the native database driver is replaced by a cedar drop in the driver which implements the same API. Cedar uses proxy based approach, proxy first executes a query on the client replica and generates a compact CAS description in result. Cedar currently interposes on Java but it has the ability to support ODBC based, C++ and C# applications by using JDBC to ODBC approach.

Database Transparency

Both client and server-side cedar transparency is maintained, by this way database is totally unaware of client replica only the server proxy is aware of client replica. The differences received from the server cannot be used to update the client replica. This would be infeasible to update the replica and tracking partially without requiring extensive database modifications (MANBER,(1994). Cedar uses JDBC API to access both client and server applications. The design architecture of cedar allows it to be completely independent of the underlying dataset. This independency gives a number of advantages to the overall architecture. Increased diversity is very useful in case if the mobile client has sufficient resources to run a heavily weighted database in order to run an application. In such a situation, a light weighted database can be used on My Sql and oracle database bridge (ZENEL, 2005). Cedar ignores the failure of the replica to interpret databases queries and forward them verbatim to the database server. This design makes the cedar layout completely transparent to the database server. This approach provides the feasibility of scaling the database base server without modifying cedar in a number of cases. Cedar is designed in such a way to update itself according to database needs, hashing in cedar plays an important role in such situations. Sometimes it requires a dynamic substation of the native JDBC server for Cedar’s JDBC driver. Stale data is like to produce a tentative result with little commonality with the authoritative result. Rabin fingerprint is the most commonly used technique for detecting commonality across opaque objects. Rabin fingerprinting is the best tool for the detection of commonality across opaque objects according to need. It makes insertion, deletion easy in different situations(MENEZES,(2001). Cedar is designed to keep a strict eye on different facts and changing market requirements, cedar is designed in such a way to fulfill all needs & requirements of the business world. Cedar uses an approach better than fingerprinting it uses the end of each row in database results as a natural chunk boundary. Cedar’s use of tabular stature in results only involves the shallow interpretation of Java result data type (TERRY, 1995). Hoarding at the cedar side involves database hoarding profiles. Hoarding at cedar client is done by database hoard profiles which expresses hoard extensions within the framework of the database schema. Cedar gives the feasibility of creating a database hoard file manually created by the user (GRAY, 1996). On the cedar framework, a user can also create a database hoard profile manually. Each hoard attribute is associated with weight and cedar uses this weight to prioritize what should be hoarded on the mobile client-side and what should be on the server-side. A number of tools is used in the cedar framework.

Cedar hoards data at the granularity of tables and table fragments. Cedar’s approach is based on the long-established concepts of horizontal fragmentation and vertical fragmentation. Horizontal partitioning preserves the database schema. It is likely to be most useful in situations that exploit temporal locality, such as the sales and insurance examples mentioned earlier. Hoarding in Cedar is done through horizontal fragmentation. If a query references a table that is not hoarded on a mobile client, Cedar forwards that query directly to the database server. Cedar offers two mechanisms for bringing a client replica in sync with the database server. If a client has excellent connectivity, a new replica can be created or a stale replica updated by simply restoring a database dump created using the client’s hoard profile. Although this is bandwidth-intensive, it tends to be faster and is our Preferred approach. (NAVATHE, 1984).

Cedar also provides a log-based refresh mechanism, the database server always maintains time-stamped update log. This can be done in a number or ways without disturbing the shape of cedar (Tech. Rep, 1995). Cedar provides high performance on low bandwidth. When a client detects available bandwidth it can obtain the log from the server for further processing ad accessing the data. The bandwidth detection mechanism can ensure the log fetch does not impact foreground workloads (Tech. Rep, 2002). The resources have a finite amount of storage and also provide the facility of recycling data as needed. It is the responsibility of the client to obtain log entries before recycling. Once a log entry is recycled, it is difficult to recover data from the previous stage.

Results

Cedar technology was implemented on a number of networks and the result shows that cedar provides feasibility and cedar performance is a linear function of the amount of data usually found on client replicas. Cedar latency overhead is only visible when the amount of data is found greater than 95%. If cedar finds no useful data at client replica it still transfers slightly less data than My SQL. This is due to the difference in coding over the wire as cedar and My SQL uses different themes was slightly different. Cedar encoding is more efficient for the microbenchmark dataset. As cedar uses Java which enables it to work in different environments by using ODBC & java bridge. This approach can also use over a gigabit Ethernet link. Cedar is a factor of almost 10X than MY SQL. Cedar technology was implemented on a number of networks which proves the best & efficient results than other network results.

Conclusion

Replication is a theme of sharing information & resources over a network in order to make a connection or network reliable and effective. Replication is widely used for recovering data in case of any disaster or network failure. In the early decades, no one realizes the need of data replication over large networks so they had faced lot of difficulties when the failure occurred. Software and hardware components can also be shared by the replication process. Replication could be in various forms it can in form of data which is widely known as data replication, Data replication is a process of storing the same data is stored on multiple locations for later access. A computer-based task could be replicated in a number of forms i.e. replication by time, replication by space, replication by separate disk. Basically, there are two forms of replication active replication and passive replication. Inactive replication processing of the same request is done at every replica and in passive replication processing of every single request is done on a single replica and other replicas use its states. Database replication is one of the most complicated tasks when the database is large; sometimes it becomes difficult to deal with a huge amount of data. There are a number of benefits of data replication few of them are listed below: Data Consistency, Data warehousing, Distributed data, Secure Data, High availability.

There are seven basic steps involved in data replication which is as follows: Amount of data to be copied, Network bandwidth, Network Location, Number of servers involved, use of clustering, Available space at remote sites, Available budget.

The primary aim of Data replication is to save a complete copy of source data. The best method of data replication is snap technology which includes snap copy and pointer based snap shots. The main advantages of Data replication are: Maintenance, back up and Data recovery. Many solution uses TOTAL Algorithm in the data replication solution. TOTAL algorithm is based on the following facts: FIFO queue for one sender at both sides

Ring formation, at a single point of time one queue holds a token, receiving of message appends in the queue, issuance of token to next queue. The paper presents different models of data replication used in real-world industry in order to meet challenging market requirements. The paper discusses the following models with respect to their approaches & methods for data replication via WAN: Transactional Replication

State Machine Replication, Virtual Synchrony, Automatic Data replication for web applications, Multi-view access protocols for large-scale replication, Middleware-based database replication: the gaps between theory and practice, Optimistic solution, Ganymede: scalable replication for transactional web applications, An adaptive replication algorithm. Finally, the proposed algorithm is designed to keep an eye on rapidly changing market needs and requirements. Cedar approach is beneficial for numerous situations and it also allows scalability of the network without modification in Cedar. AFIC was one of the leading replication in past years; they had a team of high skill developers which worked on numerous data replication software in 1984 they developed the product for sun base and later ported it into other RDMS. While at the time of development team realized the fact that it’s not easy to develop a solution which fits in all business environment. No matter solution is efficient but still it needs to be customized according to the client’s business requirements. The team realized that this is not feasible and it would not fit in every environment for achieving this goal distributed system must be heterogeneous; by the end of development the team was proven right. At the end, every customer needed something different for achieving their business goals. There are a number of solution available in market which offers data replication via WAN in simple steps but there are some facts which need to be considering while shopping any replication product. There are many different algorithms and solutions are available in the market that offer the feasibility of data replication over large distributed systems every approach and solution available in the market has some pros and cons under some assumptions and limitations. During past few years, the need of data replication over a large network has achieved lot of popularity and nowadays numerous organizations are taking advantages of these methods to meet their business requirements. In the early decades, no one realizes the need of data replication over wide area networks, but with the growth of information technology data replication need has become important. There is some factors that need consideration while developing a data replication model. Few of them are listed below:

Goals of organization, primary requirements, customer Budget, type of replication do customers need, kind of communication lines customer uses, required bandwidth, The total cost of the complete solution includes research, license and both internal and external support.

References

Data Replication,2008, Data replication. Web.

McQuerry, Steve,(2003). ‘CCNA Self-Study: Interconnecting Cisco Network Devices (ICND), Second Edition’. Cisco Press. ISBN 1-58705-142-7.

Rene Dufrene, 2003, Data replication over the wan. Web.

Data Replication, 2007. Web.

Distributed Systems: Principles and Paradigms (2nd Edition). Andrew S. Tanenbaum, Maarten van Steen (2002). The textbook covers a broad spectrum of distributed computing concepts, including virtual synchrony.

E. Cecchet. C-JDBC: a middleware framework for database clustering. Data Engineering, 27(2):19–26, 2004.

Z. Fei, S. Bhattacharjee, E. W. Zegura, and M. H. Ammar. A novel server selection technique for improving the response time of a replicated service. In Proceedings of INFOCOM, pages 783-791, 1998.

G. Pierre, M. van Steen, and A. S. Tanenbaum. Dynamically selecting optimal distribution strategies for Web documents. IEEE Transactions on Computers, 51(6):637–651, 2002.

Smith. TPC-W: Benchmarking an e-commerce solution. Web.

ADLY, N. 1995. Performance evaluation of HARP: A hierarchical asynchronous replication protocol for large-scale systems. Tech. Rep. TR-378, Computer Laboratory, University of Cambridge.

AGRAWAL, D. AND ABBADI, A.E. 1992. Dynamic logical structures: A position statement for managing replicated data. In Proceedings of the Second Workshop on the Management of Replicated Data (Monterey, CA, 1992), 26-29.

GOLDING, R.A. 1993. Accessing replicated data in a large-scale distributed system. Ph.D. Thesis, Department of Computer Science, University of California, Santa Cruz.

Amza, A. Cox and W. Zwaenepoel – A Comparative Evaluation of Transparent Scaling Techniques for Dynamic Content Servers – 21st International Conference on Data Engineering (ICDE), Washington, DC, USA, 2005.

Chen, G.Soundararajan, C.Amza – Autonomic Provisioning of Backend Databases in Dynamic Content Web Servers – IEEE International Conference on Autonomic Computing (ICAC ’06), June 2006.

Data Replication, 2008. Web.

McQuerry, Steve,(2003). ‘CCNA Self-Study: Interconnecting Cisco Network Devices (ICND), Second Edition’. Cisco Press. ISBN 1-58705-142-7.

Rene Dufrene, 2003, Data replication over the WAN. Web.

R. Baldoni, 2005, Total Order Communications: A Practical Analysis. In EDCC, Lecture Notes in Computer Science, Vol. 3463, pages 38–54.

Current issues in Data Replication, 2007. Web.

NAVATHE, (1984), CERI, S., WIEDERHOLD, G., AND DOU, J. Vertical partitioning algorithms for database design. ACM Transactions on Database Systems 9, 4 680–710.

KISTLER,(1992) Disconnected operation in the coda file system. ACM Transactions on Computing Systems 10, 13–25.

LARSON, (2003) J.Transparent mid-tier database caching in sql server. In Proceedings of the 2003 ACM SIGMOD International Conference on Management of Data pp. 661–661.

LAWTON,(2005) What lies ahead for cellular technology? IEEE Computer 38, 6 ,14–17.

MANBER,(1994), Finding similar files in a large file system. In Proceedings of the USENIX Winter 1994 Technical Conference (San Fransisco, CA, 17–21 1994), pp. 1–10.

MENEZES,(2001) A. J., OORSCHOT, P. C. V., AND VANSTONE, S. A. Handbook of Applied Cryptography. CRC Press, Inc.

GRAY,(1996), The dangers of replication and a solution. In SIGMOD ’96: Proceedings of the 1996 ACM SIGMOD International Conference on Management of Data pp. 173–182.

Tech. Rep. FIPS PUB 180-2, NIST, 2002.

TERRY,(1995) THEIMER, M. M., PETERSEN, K.,DEMERS, A. J., SPREITZER, M. J., AND HAUSER, C. H. Managing update conflicts in Bayou, a weakly connected replicated storage system. In SOSP ’95: Proceedings of the Fifteenth ACM Symposium on Operating Systems Principles pp. 172–182.

ZENEL,(2005), A. Enterprise-grade wireless. ACM Queue 3, 4 30–37.