Introduction

Mobile Communication Devices have undergone vast changes in the way that they function, their appearance and the technologies on which they are based. There has been a constant innovation all around and the current generation of devices allow users to carry out multiple functions. Some functions available as talking, transfer of data, browsing the Internet, connecting to the home or office computers, SMS, downloading songs and movies with functions of movies and still pictures cameras. Just a couple of decades back, these devices were very huge weighing more than 40 kilograms and they had a limited range.

The paper researches the history of innovation and development of mobile communication, right from the 0 generation devices to the 4 generation devices. As with other technologies, mobile communication devices took some time before they were diffused into society and turned into mass consumer goods. The paper has examined the relation between technology innovation and commercialisation and attempted to answer how one influences the other. Some innovative case studies of applications covering mobile phones, radiophones such as GIS and GSM, anti theft applications and livewire detection instruments for helicopters have been discussed.

Technology Innovation Drivers and Paradigm shifts

Ashton (1998) has written about the paradigm shifts in England where the industrial revolution uprooted the British culture and norms and in a short span of 50 years, centuries of the Agrarian society was changed into an industrial one. While changes in society have occurred since ancient times, the changes were gradual and took centuries to impact the farmer in a rural farm. However, the industrial revolution ‘had a great urgency and allowed no time for people to adapt to the changed circumstance’ and for many families this was very traumatic. “Overnight, farmhands left their homes and left the old and the sick and abandoned the fields and moved to the great city slums to work in fetid and unhealthy factories where the only certainty was death, either by overwork, by accident, by starvation or by disease”.

The advent of mobile communication devices and the Internet brought a paradigm shift in the way that people worked, interacted and lived and changed the speed with which communication was conducted. The devices freed people from being tethered to a fixed land line telephone and one could talk to friends, family members and contacts from anywhere and at any time. With increase in demand, technology companies suddenly realised that mobile communication devices were no more technology items but had turned into consumer goods that could be positioned and branded as a lifestyle devices and this increased the demand. With increase in demand, companies were forced to innovate and research new technologies that would increase the voice quality and applications. Organisations that introduced new products stayed afloat while those that persisted in using old technologies and processes soon closed down and organisations had to innovate or perish.

However, innovations and technologies are not always readily accepted by large section of the populace and it takes time for technology and devices to be diffused into society so that mass consumerism sets in. The section will attempt to understand how technology is diffused into the society and whether it is applications demand that drives innovation and invention or is it innovation and invention that make people create applications or is it public demand that acts as a driver for both.

Innovation and Commercialisation

Researchers often argue the chicken and egg case of whether new technologies are made available to people simply because they have been invented or they are made available because they become commercially viable. The relation between consumer demand and technology innovation can be regarded as push-pull with demand pushing innovation and innovation in turn pushing demand. This brings in the whole new concept of marketing and how organizations push forth products and technologies that would create a need among the public for the product and who buy the products in ever greater numbers and this provides funding so that new innovative technologies are developed.

This is an endless cycle where corporates feed the public hunger for new products and in turn, the public pushes organizations to innovate and produce better goods. The watchword is ‘faster, smaller, cheaper’ and the industry is filled with such innovations and examples abound in automobiles, electronics, cosmetics, computers and Information Technology and so on. Many of the devices are add-ons to existing products but with enhanced functionality. This brings up the wider question of innovation, its needs, utility factors and customer indulgence. Following table gives details of the factors and outcomes that influence buying decisions (Brown, 2003).

Table 1.1. Example Factor Definitions (Brown, 2003).

As can be seen in the above table, the decision to buy an innovative product depends in its perceived advantage to the customer and the factors determining the purchase are: Hedonic Outcomes, Utilitarian Outcomes, Social Outcomes, Social Influences and these are subjected to certain barriers. The question that emerges from the above table is – when a new technology is introduced, how do people receive it and are there any segmentation among customers who go ahead and buy a product based on the marketing hype or do people wait and see to find how the product performs before buying it. This argument is further examined in the section.

Diffusion of Technology

Rogers (1962) in his book ‘Diffusion of Innovations” speaks of the manner in which new technology is diffused in a society. He speaks of diffusion as “the method used by an innovation to be communicated by using certain channels among different groups in a social system”. This method is very important when it comes to forming models of consumption and to a certain extent, it has given trend to the ‘keeping with the Jones’ syndrome. The author has attempted to explain the manner in which innovation and new technologies are spread through different social groups and cultures.

The findings hold true for technologies, consumer goods, food items, automobiles, apparel and other consumer goods. The author undertook the study in 1950 to find the effectiveness of advertisement used in broadcasts. In those times, many products could be called as innovations and very few people had actually used them. The study brought up a number of facts and these are still used in marketing. The author noted, “Contrary to expectations; print media or TV advertisements were not the main reason why people bought products”. In a society, there are different types of adopters of the technology or product and these are: early adopters or innovators, secondary adopters, tertiary adopters, quaternary adopters and then the laggards.

Roger asserted it would be innovators that would first try out a product. This group was often well educated, came from well to do families and had a high net worth. The group also commands respect in their society and it is this group that first buys a product or adopts a new technology. Next in line were the secondary adopters who would wait and watch to see what the innovators had to say about a product and this group would buy a product only if the innovators endorsed it.

The tertiary adopters would further wait and watch to see how the innovators and the secondary adopters felt about a product ascertain that the quality and price was right and if everything was acceptable, they would in turn adopt the product. The quaternary adopters were the next in line and would require further reassurance that the product was indeed suited for their needs and then make their decision. The laggards were the last in line and they were frequently the oldest among the group and the last ones to buy or adopt a technology.

Roger pointed that in a closed society, it would be much more effective if manufacturers first targeted the innovator group and encouraged them to buy a product or adopt a new technology and then endorse the product. This method of advertisement is still being utilised widely by sports goods manufacturers, fashion products and others and celebrities such as sport stars or film stars are seen to be using a product so that sales can pick up. But overuse of this method or multiple endorsements by a star would lead to overexposure and loss of efficiency of the medium.

Technology Adoption Lifecycle

Brown (2003) has provided a good review of the technology adoption lifecycle, based on the Diffusion of Innovation model as described by Rogers. The author has based her article on the lower than expected sales of PCs in the US domestic market in 2003. Leading manufacturers such as Gateway, Dell, Apple, AMD and others had assumed that the sales would increase once the cost of PCs was lower than 1000 USD.

These predictions had been made by a number of leading marketing agencies who were assuming the people who were interested in technology would buy more products once the technology became affordable. The author reports that the market researchers did not consider if price was the only barrier for those interested in buying a PC or if there are any other additional barriers. The author calls this the ‘non-adoption phenomenon‘ and this is very important when the question of mass consumption of a technology or removing the digital divide has to be considered. The model proposed by Rogers was slightly modified and an illustration is as given below that shows the technology adoption life cycle:

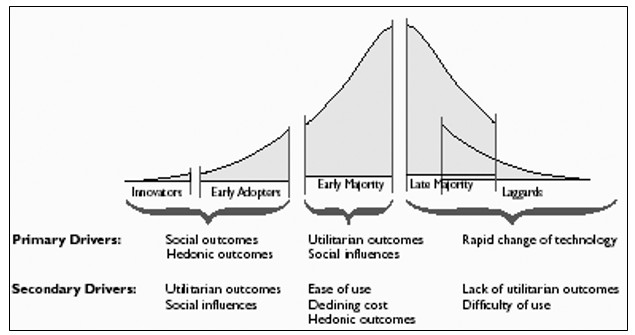

There are five categories of technology adopters and they are innovators, early adapters, early majority, late majority and the laggards. According to Brown for any innovation, 16 percent of the adopters are made of innovators and early adopters while 34 percent is made of the early majority, 34 percent is made by the late majority and the remaining 16 percent by the laggards. Non-adopters are not included in this model since it is assumed that everyone would ultimately adopt the technology. As shown in the illustration, there are a number of primary and secondary drivers for each category.

Bass Diffusion Model

Bass (1969) introduced the Bass Diffusion model that details the mathematical process to show how new technology and products are adopted when users of the product interact with novices or potential users. The theory has been built on the Rogers Diffusion of Innovation Model. The Bass model is used by researchers and marketing organisations when they would want to introduce a new type of product in the market. While Rogers model relied on fieldwork and practical observations of the agricultural industry of US in the 1950’s, the Bass model is based on the Riccati equation.

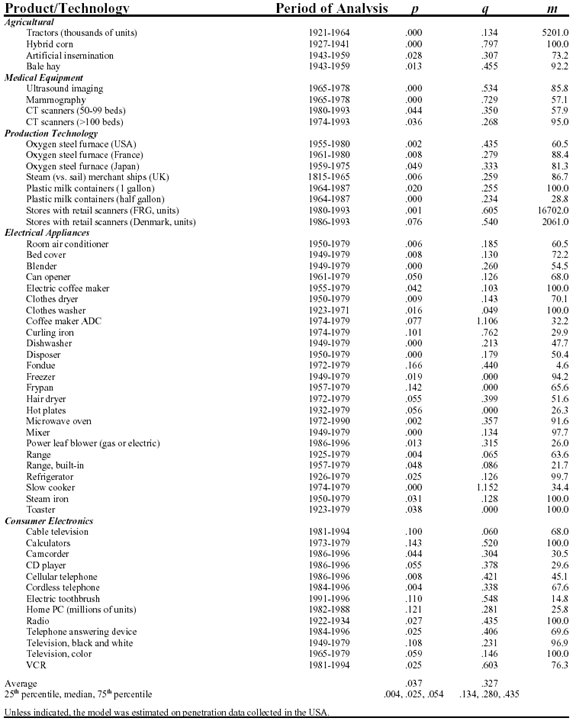

The model has three coefficient variables: m is the total number of people in a market that would ultimately use the product or the market potential; p is the possibility of people who are not using the product would begin to use it due to the external influence of early adopters and the publicity from the media and this the called thee coefficient of innovation and q that is the possibility of people who are not using the product would start using it because of internal influence or word of mouth publicity from early adopters or people that are in close contact and this is called the coefficient of imitation.

The formula used to predict the Nt the number of adopters in a given time is:

Nt = Nt-1+p(m-Nt-1)+q(Nt-1/m)(m- Nt-1)

Where Nt is the number of number of adopters in a specified time frame t.

As per the empirical research of Mahajan (1995), the value of p is between 0.02 and 0.01 and q has a value of 0.38. These values can be used in the equation along with probabilistic values for other coefficients to find the number of adopters in a society. When applied with good fieldwork, the model is found to produce very accurate results and allows forecasting to a high level of accuracy. Product manufacturers and technology innovators have often used this model to forecast sales and revenue generation among the target group. Certain modifications have been done over the course of time to increase the accuracy level of the model and to suit different market and product levels.

The model is found to work with predictive and stable goods and technology and it is difficult to use with fad products such as beauty products or fashion accessories, toys and other goods that may have a short shelf life of a few weeks if not days. The model has been used extensively in forecasting marketing potential and sales of new innovative products such as mobile phones, high definition TVs and many other products.

A number of software applications are available that allow forecasting of technology adopters and databases are also available that gives the values of p, q and m for different product, based on extensive market research (Lilien, 1999).

Following figure gives samples of values for p, q and m for different products.

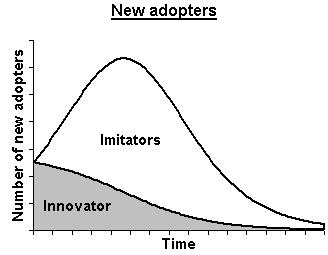

Following figure shows a graphical representation of the mechanics of flow between the two groups of innovators and the imitators. The innovators would be fewer in number initially and with passage of time, the number would start reducing, not because the product has lost appeal but because the imitators are fast catching up. The imitators would start from a lower value and then the number would rise steeply and assume a bell shape curve with a sharp peak. The time required to reach this peak would depend on the utility of the technology and its cost.

As seen in the graph, the curve for imitators would rise sharply and then fall at the same rate and would prolong for some time beyond the innovator curve. After a certain point of time, the technology would have become either widespread or may have become obsolete or new and better products and technology may have come up.

The S Curve and Technology Adoption

The S Curve or the Sigmoid curve, also called the learning curve, is used to depict the model of technology adoption or product use by businesses when they need to project the growth potential. When the growth of a product or the level of adoption of a technology is plotted as a function of time, the curve follows the alphabet ‘S’. The S curve is used to model the growth and performance in a number of industries and sectors such new start-up growth prediction, in neural networks, statistics where they are used to form the cumulative distributive function, the ecology where they are used to estimate and model growth of demographics and many other areas.

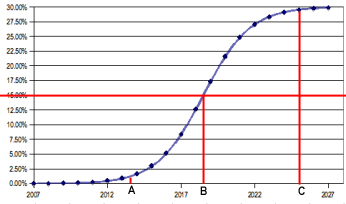

Business areas such as new product sales, absorption of new technologies have a latent inertia and there is a certain time lag from when the technology adoption strategy is announced to the point where it is adopted and grows and finally when it saturates. This is best represented by the S curve. Please refer to the following figure illustrates a plot of the S curve (Phillips, 2004).

In the above figure, the plot starts with 2007 when the initiative or product is launched and this is taken as the zero percent growth. The curve extends along the years till 2027 and the total growth during this period is 30% and after this period, the technology as saturated and the growth will either stagnate or start a reverse trend and finally go in the negative. The mid point of the curve at point B, as seen in the above figure is at 15% growth and this is occurring somewhere during the year 2018 to 2019. The other significant points are A when the growth is just beginning and point C when the growth starts stagnating.

The growth areas are clearly between points A and C and while point A would occur during the year 2014 when growth was at about 1 percent, point C would occur around the year 2024 when growth would be around 29 percent. So in effect, the company has taken 1o years to achieve a growth of 28 percent. For the total period of 30 years, the growth has been slow or stagnating for about 13 years. This is the seven years from 2007 to 2014 and the six years from 2024 to 2030. After point C is reached, there would be saturation and the sales would start stagnating and eventually, the sales would go down and the technology would become obsolete.

During the 13 years idle time, a company would be forced to bear the development, sales, manufacturing and administrative costs and this is the reason why companies that do not innovate and bring up new products stagnate and eventually close down. For even a very large company such as Toyota or Ford, 13 years of stagnation and low growth and about 17 years of high growth would be unacceptable. In the modern economy, the time frame would be very much reduced and it may be as less as a few weeks to a couple of years and for the mobile communication devices, the time frame would be a few weeks or in extreme cases, a few days.

Nevertheless, the S curve would hold the test of time and while there may be brief interruptions and the S curve may not be able to grow to the full shape, the mathematics and other functions would still hold true. With increased competition and the fast changing tastes of consumers, the easy availability of new alternatives, companies need to develop strategies that build on the high volume of high value model and optimise their opportunities as fast as possible.

Implications for Mobile, consumer driven or technology driven

Cellular technology differs from critical sectors such as healthcare and medicines. When breakthroughs such as invention of penicillin, open-heart surgery and other techniques were invented, they helped to save lives. Therefore, innovation and development was undertaken with a need to reduce diseases and human ailments and the inventors have been honoured as saviours of mankind.

The development of computers and processing speed increase along with increase in RAM and hard disks needs special mention. These developments took place because the military needed more computing power to run the defence applications and the space exploration programs. Computing power also increased because the design industry needed systems that could think and run faster and mainly because the gaming industry wanted more power so that 3D games could be built. Chip speed increased and drove development of new applications and new applications were developed that demanded ever-higher computing power. The question of saving human lives never arose; technology companies and new product launches were eagerly lapped by customers (Argote, 2008).

The mobile communication devices sector has also seen a similar trend. While it is true that the 2G technology brought in cheaper technology that allowed ease of communication, further development of applications such as ring tone download, downloading of songs and movies, online shopping and so on, cannot be regarded as saviours of human life. On the other hand, the development of these applications was designed to provide entertainment for the customers and to increase profits for the manufacturer.

Business and professionals had different needs and they wanted to access their office computer network while travelling, access large amounts of data and thus the demand for more bandwidth and speed arose. This demand led to the development of 3G, 3.5G and now even 4G and more important, applications were developed for these devices that could fulfil the need of the customers (CSTED, 2008).

Timeline of Mobile Communication Devices Invention

The invention of mobile communication devices did not happen in isolation and many other developments in other technologies and processes led to the innovation in cellular phones. Some examples of related technologies are development of: small batteries, microcircuits, advanced plastics, antennas, radio technology, computer chips, LED screens, Internet, mass production technologies and others. In many cases, technologies that were developed for other applications were adapted for use in creating mobile communication devices. Given below is the timeline of the development. It needs to be noted that there would be some difference between the announcement of a technology and its availability to the public.

Part of the reason was because the technology had not been fully developed and the technology was in the experimental stage and partly because, applications still had not been developed for it. This is the case with 4G systems and while this technology is viable and available, applications and devices have still not been developed and this may happen around 2015 (CSTED, 2008).

Table 1.1. Timeline of Mobile Communication Devices Development (CSTED, 2008).

The above table gives the main timelines and there were other developments in other technologies that were used in mobile communication devices. The timeline is a summary of developments that occurred in different countries.

Summary

The section has discussed how technology innovations are diffused and the drivers for people to accept technology. Technology diffusion occurs in a phased manner first with innovators taking the lead and then the early adopters, early majority, late majority and then finally the laggards. Historically, there has been some time lag between the Innovators and the laggards and there has been a gap of several years before telephones, automobiles and computers were accepted by large number of people. Technology would be used by larger number of people when the benefits are evident and when the technology is affordable.

To make technology affordable, technology companies have to bear the initial development costs and not pass them on to consumers. Once more and more people start using the products, companies are able to recover their investment and this is a vicious circle. Only large companies with deep pockets can afford to get into this vicious circle and bear the high start up costs. The answer to the question of whether new technologies are made available to people simply because they have been invented or they are made available because they become commercially viable is explained by the student of this thesis as.

“It is a self adapting cycle where technology is invented to whet the appetite of an uncertain people and the invention of technology makes firms to develop new applications so that people can use them and create a demand. So, one sustains and drives the other. There would be point where it would not be possible to advance the current technology beyond a point and applications stagnate. At this juncture, certain paradigm shifts occur that bring in a new paradigm shift in technology and the cycle continue. We have seen it in computers, with storage devices such as floppy discs that were replaced by USB drives, with film camera that became obsolete with digital camera and the cycle will continue”.

Mobile communication devices History and Evolution

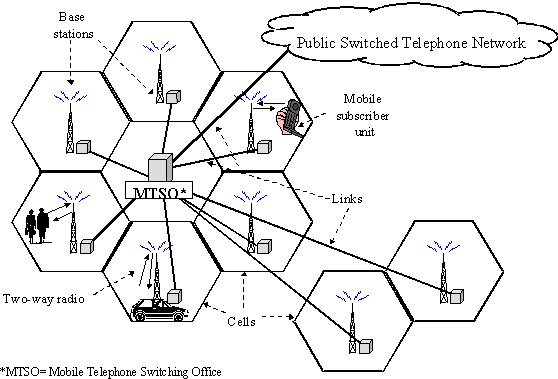

Development of mobile communication devices history began with the development of hand held devices used for radiotelephones and radios in vehicles. Initially there were two way radios also called as mobile rings and there were fitted in police cars, taxicabs, ambulances, fire fighter trucks. The technology first developed when emergency care workers, defence and paramilitary forces, fire fighters, loggers, oil rig personnel and others had to keep in constant touch with their head quarters and with their own teams. The radio devices were open band systems and anyone could tune in to the frequency and listen to any private conversations. So the need was for a technology that allowed only selected people to speak to each other.

These devices were not actually mobile phones since they were not connected to the existing telephone network. Users also could not dial any number they wanted but a general call was issued and any member who had the system on could respond. These devices were fixed to the machine and could not be carried around in the pocket. Motorola built the walkie-talkie that could be carried in a backpack and the army used it.

Later the bag phones were developed that could be carried in a bag and additionally had a cigarette lighter also. The concept of the real cellular technology was born in 1947 December when Douglas and Young from Bell Labs came up with a hexagonal cell structure for mobile phones. Porter from the same organization suggested the construction of cell towers at the vertices of the hexagon and they the towers should have directional antennas that would send and receive signals into adjacent three hexagonal cells. However, this was a concept as there was no proper technology to use this concept and it was only in 1960s that Bell Lab scientists Frenkiel and Engel developed the equipment to use this concept.

In the meantime, Ericsson in 1956 had developed the Mobile Telephone system A – MTA and released functional and commercial models in Sweden. Unlike other devices, these did not require manual trained operators and subscribers could actually make a call from one MTA device to another by dialling a number. However, the main disadvantage was the cost and weight and the device weighed 40 kilograms and found very few takers. Another model was built in 1965 and it weighed about 9 kilograms and was built to use the DTMF signaling. The unit managed to have 150 customers and this list grew to 600 by 1983 and eventually proved to be non sustainable and was closed (CSTED. 2008).

Meanwhile in Moscow, Leonid Kupriyanovich had created a radiophone that was a ‘wearable automatic mobile phone’ and called it as the LK-1. It weighed about 3 kilograms was connected to a base station with a range of 30 kilometres and was run on a battery of about 30 hours. He filed a patent and claimed that the base station could provide service to multiple customers. By 1958, he further improved the device so that it weighed 0.5 kilograms called the ‘correllator’ and it could serve more customers. The USSR government started development of a national civil mobile phone service called the Alitay. The service was started in 1963 and provided service to 30 Russian cities.

Bulgaria, another satellite state of USSR created the RAT-0.5, a pocket mobile automatic phone and showed it in the Interorgtechnika-66 festival. The system allowed 6 telephone lines to be connected to a base station and the base could offer connection to the 6 lines. By 1967, mobile phones had slowly evolved but they had to remain with the cell area that was covered by a base station for the duration of the call. If customer chose to move from one area to another, the connection was cut off and the customer had to call again. By 1970, Joel from Bell Labs designed the call handoff system that allowed mobile phone calls to be passed through multiple cell areas during the duration of a single call and there would not be any loss of connection. AT&T got its proposal accepted by the FCC to provide providing cellular phone service.

Finally after many years of fighting for their case, AT&T was granted a license in 1983 for Advanced Mobile Phone Services – AMPS, an analog service and it and was allotted to operate in the frequency of 824 to 894 MHz band. By 1990, the analog system had been replaced by digital services. By 1971, Finland saw the launch of the first mobile network called the ARP. This is also called as the 0G cellar network or the zero generation cellular network (CSTED. 2008).

The first commercially working model was created in 1973 April 3 when a Motorola employee Cooper called Engel from AT&T labs to show off his mobile phone called the DynaTAC, in front of reporters. The device was ‘small enough to be easily carried and fit very comfortably in the car trunk’. Thus was born the concept of cellular device and the new age personal communication device was evolved that allows you to surf the Net, download mails, complete office related tasks, watch movies and so on. However, these devices required the support and development of cellular networks called as 1G, 2G, 3G and so on (CSTED. 2008).

Development of Cellular Networks

Mobile phones are just devices and for them to work, they have to be supported by optimum cellular networks. Over the years, many generations of networks were developed and these are called as 0G, 1G and so on. Largely, the development of mobile devices would depend on the cellular networks that supported them. Development of mobile communication devices with multiple functions and capabilities is possible with some amount of technical skills.

However, there has to be a cellular network for the connectivity and a service provider who would operate the network. Once these entities are in place, the more and more customers can subscribe to the service and provide sufficient revenues to the operator and the developer. With revenues flowing, positive changes such as innovation, increase in bandwidth and applications occur. When the number of subcribers increase, it is possible for the operator to reduce the prices.. While 0G is regarded as the zero generation technology, the technology did not support voice across a distance, the mobile communication devices were very heavy and cumbersome and very expensive.

1G got over the problems of allowing people to communicate across wider area but essentially, both parties had to remain in the same network and cell. The devices were still unwieldy and required some expertise to operate. 2G changed the technology and people could now connect to the landline through their mobile devices. Internet had also appeared and there was a need for people to use the Internet through their telephones and 3G helped to access the Net.

Some important generations of cellular networks are explained in this section.

The following table provides details of the mobile telephony standards for each generation type. Only 3G and further generations are given since the older generation of technologies are now obsolete (Young, 2008), (Harri, 2006).

Zero Generation or ‘0G’

The Zero Generation technology networks were meant for mobile radiotelephones and served as the ancestors for next development of cellular devices. There were different technologies like IMTS – Improved Mobile Telephone Service, Push to Talk, AMTS – Advanced Mobile Telephone System and MTS – Mobile Telephone System. These were different from the closed loop systems of radio mobile devices such as the ones used by police or defence and these systems were available for private subscribers and were created as a component of the public switched telephone network. The devices were allotted individual telephone numbers and subscribers could dial the numbers and talk to the person at the other end. The devices were quite bulky and had to be fixed in trucks and cars and models that could be carried in suitcases were available. The main customers were people and firms who worked in industries such as logging, construction and even film celebrities (Harri, 2006).

People could buy them at two-way radio dealers, AKA telephone companies, wire line common carriers and other electronic hardware selling garages. In the US till the AMPS system was ready, the RCC – Radio Common Carrier was in use from 1960 till 1980 and then it became obsolete and was phased out. The system competed with IMTS and MTS. Private operators who provided the service to individuals ran the system. However, the concept of Roaming had not yet come in and the device was to be used only in the city or region where the service was provided. Actually, roaming concept was discouraged since the system of distributing and sharing call charges had not yet started. The networks were designed to run using the UHF band of 454 to 459 MHz and the VHF band of 152 to 158 MHz. It used the two-tone sequential paging to ping the mobile phone that someone was trying to call (Harri, 2006).

One G – 1G

The First Generation or 1G cellular phones were analog standards for cellular devices and these were drafted in 1980. This was replaced by the 2G systems and while 1G transmitted analog radio signals, 2G transmitted digital radio signals (Mishra, 2004). The quality, range and connectivity of 1G was very low by current standards and voices could be barely heard through the static and interferences. However, 1G is the predecessor to the future generation of cellular technology and it provided only voice carrier capability. The concept of sending music and images over cellular phones had not yet been developed in those days

Second Generation – 2G

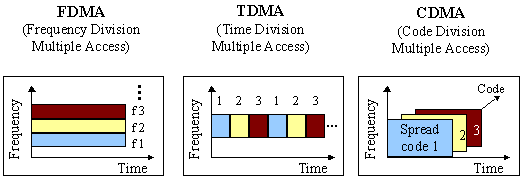

The 2G system for cellular networks was started commercially in 1991 using the GSM standard by Radiolinja in Finland. Some of the superior characteristics over 1G was that digitally encrypted conversations was allowed, the system was much more efficient on the spectrum and allowed much higher penetration levels; it also allowed data services such as SMS text messages and downloadable ring tones. Once 2G was launched, all the other systems were clubbed together under the 1G name and these quickly became obsolete. 2G allowed radio towers to relay the signals to mobile communication devices and also connect them to the telephone system. Based on the multiplexing, the system was classified as: TDMA and CDMA.

There were a number of standards that came into being and some of them are the GSM – TDMA based, PDC – TDMA based, iDEN – TDMA based. GSM was initially from Europe but was adopted by many countries and currently 80% of the subscribers in the world use GSM. IS-95, CDMA or cdmaOne as it was called was used in USA and Asia by 17% of subscribers. However, many operators are now switching over to CDMA.. PDC was used in Japan while iDEN was used by NEXTEL in US and Canada. In USA, 2G technologies are also called as personal communications service (Mishra, 2004).

- FDMA: Frequency Division Multiple Access is an analog system where the available spectrum is split into frequencies. At any instance, only one subscriber is assigned to a channel. The channel is blocked for use by other users until the initial call is terminated or if it is transferred to a different channel. A full-duplex FDMA transmission would be made up of a transmission and receiving channel. First generation analog systems use this technology.

- TDMA: Time Division Multiple Access improves the spectrum capacity and every frequency is split into time slots. All users can be provided access and usage to the full channel while making the call. Other users are also allowed to use the same frequency channel but the time slots would be different. The base station constantly switches among the multiple users. This technology is used prominently in the 2G mobile cellular networks.

- CDMA: Code Division Multiple Access allows encrypted transmission and is based on the spread spectrum technology. It is employed for defense applications. Many users can use the channel at any given time thus increase the capacity of the spectrum. Each call is assigned a separate code to segregate it from the other calls the whole radio band is used for transmission. The technology provides for soft hand-off method in which terminals can interact with multiple base stations at the same time. This technology is used for 3G networks and for IMT-2000 network.

The property of employing digital signals for relay between handsets and the relay towers allows the system capacity to increase. Digital voice data can be subjected to multiplexing and it can be compressed more efficiently than similar analog voice encoding by utilizing different Codecs. This feature allows more number of calls and traffic to be parcelled into a given radio bandwidth amount. In addition, digital systems were created to emit lesser power from the handsets and so the battery could be much smaller since lesser power was required. More batteries could be configured into the same space and gradually, the cost of cell towers and other hardware was reduced.

2G systems were adopted more quickly by larger number of customers as there were a number of advantages that the system offered. With lesser requirement of power, batteries would last longer and they could be smaller or weigh far lesser. This led to the creation of much smaller and compact devices that weighed far lesser. Digital error checking was initiated by using digital voice encoding and so the dynamic noise and static was while quality of audio was increased. Digital data service also allowed SMS and email to be sent and received. Since the calls are digitally encrypted, it was more secure and had enhanced protection against eavesdropping (Mishra, 2004).

2G systems had some inherent disadvantages that were not made public by the operators. In areas where the population density is low, weak signals cannot reach the cell tower and this is problem also exists for higher frequencies and the problem is somewhat reduced for lower frequencies. The decay curve of analog signals is smooth while digital signals have a crests and troughs. When conditions are good, the digital signals will be very good and when the condition slightly worsens, the analog signal would droop and have static while digital may dropout and fade occasionally. When conditions are much worse, then digital signals are completely lost while analog would persist for some more time and allow a few words to be exchanged.

Digital technology meant lower hiss, background noise and static but as lossy compression was used in the Codec, the timbre and range of voice is decreased. Finer tonal quality may be lost while using a digital tone but the voice comes through clearly. Users may have to ask who is speaking even when their spouse or children call up and the voice would have a metallic quality that makes recognition difficult.

Generation – 2.5G

The 2.5 Generation technology was midway between 2G and 3G. It was a variant of 2G system with packet switched domain along with a circuit switched domain. Timeslot bundling was also used for HSCSD or circuit switched data services (Mishra, 2004). It has some features of 3G such as packet switched technology and is based on the 2G protocols used for CDMA and GSM networks and GPRS is a 2.5G standard. The data bit rate is below 144 kilobits per second and the systems are much slower than 3G systems. It is further suggested that 2.5G was a marketing gimmick that was introduced by the operators to differentiate from the 2G systems.

Generation – 2.75 G

Introduced in 2003, this is the closest approach to true 3G systems. This is a backward compatible technology and has improved rates for data transmission. There are other names by which it is called and these include EDGE – Enhanced Data rates for GSM Evolution; EGPRS – Enhanced GPRS and IMT-SC – IMT Single Carrier technologies. EDGE technology was first introduced by Cingular in US and is now regarded as 3GPP and belongs to the GSM.

The existing GSM capability was improved by three times. It uses 8PSK encoding and provides much higher data transfer rates. EDGE can be employed for different packet switched applications such as HTTP protocols or the Internet, high-speed video services and others. With the introduction of evolved EDGE, data transfer rate increased to 1 Megabits per second and latency was reduced. 2.75G technologies can be implemented as an enhancement on existing 2.5G, GPRS and GSM networks and these networks can be upgraded much easily. EDGE and EGPRS can be used different types of network that where the GPRS system is upgraded.

To use EDGE in the GSM core networks, there is no need to change the software or hardware. But, EDGE compatible transceiver units have to be installed in the base stations. All software vendors provide the required options and the feature can be activated. EDGE utilizes GMSK – Gaussian minimum-shift keying and also 8PSK phase shift keying to modulate 5 upper schemes from the nine coding schemes. A 3 bit word change is created when a change happens in the carrier phase. This represents an increase by 3 times for the gross data rate, over GSM. It also uses Incremental Redundancy where more redundancy information rather than re-transmitting disturbed packets and thus boosts the correct decoding probability.

EDGE provides end-to-end latency of less than one hundred and fifty milliseconds and provides data speed of 236.8 kilobits per second in packet mode, thus four times more traffic can be handled when compared with standard GPRS. EDGE Evolution is an improvement over EDGE as half during transmission time reduces latencies from 20 mille seconds to 10 milli seconds. In network, by using dual carriers, higher order modulation of 32QAM instead of 8PSK and higher symbol rates. latencies can be reduced to 100 mille seconds and bit rates can be increased to 1 megabit per second peak speed. By employing two antennas, quality of the signal quality is improved and this increase the efficiency of the average bit rates in the spectrum. Speeds of 500 kilobits per second are available on mobile Internet connection (Moldcell, 2008).

Development of 2.75 G was a prelude to the full development of the next generation of 3G. There were many features in 2.75 G that are in common with 3G and more applications were developed in 3G that gave the customers more in entertainment and work related convenience.

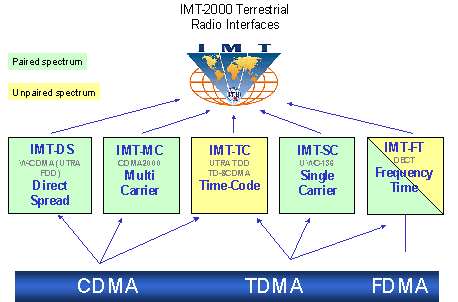

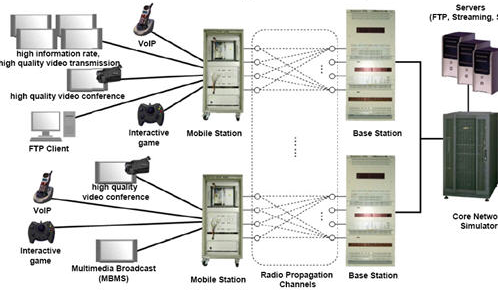

Third Generation – 3G

3G was the next generation of cellular phone technology standards introduced in 2005 and is built on the ITU – International Telecommunication Union standards that are grouped in IMT-2000. The network allows operators to provide many services that are more advanced and to obtain higher network capacity by improving the spectral efficiency. Some types of services that are offered include wide area wireless voice telephony, video calls, HSPA data transmission, broadband wireless data connectivity and others. It is claimed that for downlink, 14.4 megabits per second are possible and for uplink 5.8 megabits per second speeds are possible.

More important, the technology allows voice and data to be transmitted in streaming media mode so it if the customer is placed in an area with good connectivity, it is possible to listen to streaming music and video (Kreher, 2007).

NTT DoCoMo from Japan launched the service first in May 2001 by using the W-CDMA-GA3Y technology. SK Telecom from South Korea launched it next in 2002 January by using the 1xEV-DO. The KTF network adopted 3G on EV-DO technology. In Europe, Manx Telecom from the Isle of Man launched the services and the company was owned by British Telecom. In December 2001, 3G was adopted by Telenor but none of the customers had any commercial handsets or even had the required type of handsets. Gradually 3G networks spread across the world and was available in 40 countries around the world by December 2007. While no one doubted the advantages of the technology, the high licensing fees, high infrastructure costs and high end costs were a problem and led to the crash of a few Telecom companies (Kreher, 2007).

David (2007) speaks about how the Telecom crash occurred in 2002 to 2004. Development of 3G networks required massive investment in infrastructure and equipment and the sector depended on core telecom engineering research, development of carrier protocols and development of complex software. By 2000, 3G radio spectrum were auctioned to mobile network operators in Europe and the same process had been done in USA and was a failure and it was decided that the industry could not be expected to bear the bid costs. However, German and British governments ignored the issues learnt from US and they went ahead with the auction in the hope of getting substantial windfall tax. They allowed the operators to bear the full risk of speculative debt and at the same time, the development was happening in the 3G cellular industry and not in the 3G radio spectrum (Suk, 2007).

The development involved: creation of new types of telephone exchanges that would be able to handle voice and data traffic at high speed; super digital highways that could pack about 11000 traffic in one optical fiber and allow them to be extracted again; backbones with optical ultra high speed capability along with control and maintenance layers that would allow the technology to be maintained. This required investment of billions of dollars. In their short sighted greed, the British government asked for sealed auction bids and offered a minimum number of licenses. The operators were under a quandary as they had to get the bids or be out of the business if they lost out on the license.

To ensure that they would get the bids, they went overboard and made very high bids of about 22.5 billion GBP in UK alone and another 30 billion GBP in Germany. The amount was about 10 times higher for each megahertz than what the TV broadcasting companies charged and the bidders incurred a very heavy debt that could not be serviced. The stock market reacted adversely and the share prices of the companies fell rapidly and brought down the debt to assets ratio thus effecting the credit rating of the telephone operators. As a result, no banks or financial institutions were ready to lend them money so that the companies could not pay for the equipment and technology they had bought.

As a result, the maintenance and upgrade of the systems was not possible and this brought the whole industry in jeopardy. The telecom companies had invested excessively in infrastructure and they were now rendered insolvent and worse, even their landline business suffered. The UK and German governments did not attempt to rerun the auction or try to repair the damage that had been done and many companies went bankrupt and about 100,000 jobs were lost.

There was a failure of the government that insisted on taxation and it did not understand the complexities of the industry. Later governments in New Zealand and Australia learnt from the mistakes and when it announced open auctions, lower bids were submitted and used a different strategy of sharing revenues rather than charge heavy license fees. Following figure shows the capabilities and connectivity of 3G networks (Young, 2008).

As seen in the above figure, speeds of 300 megabits per second are possible if the 3G network is configured with four MIMO antennas – Multiple-Input Multiple-Output on both the transmission and receiving side antennas. This technology would be implemented by 2009 and would essentially allow speeds of 300 Mbps. As a comparison, in the office, when data is transferred on the Intranet between adjacent computers, data speed transmission of 100 Mbps are designed and the new experimental 3G system configuration would be about three times faster.

In practical applications, 3G networks are slower than the experimental configuration speeds. If the user is stationary or has a slow walk, then speeds of 2 Megabits per second are possible and if moving in a car, then the speed would slow down to 338 kilobits per second. For normal voice or music, listening this speed is sufficient but if users want to watch or download movies while moving, then the speed would slow down the streaming. Operators attempt to tempt users by offering a certain amount of download plan such as 3GB for 20 GBP per month or 15 GB for 30 GBP per month and so on. It must be noted that for normal cell phone users who want to only talk or send SMS then 2G and its higher variants is sufficient.

3G and its variants would be useful for those who have to connect to their offices to transact business, exchange documents and these are typically courier companies, stock brokers and other who have to use the Internet in real-time. While 3G phones such as iPhone and others have become popular, the technology is still nascent and requires a lot of development team support to monitor and upgrade the systems. The technology is expensive for 3G service license fees and many of the telephone operators are in deep debt. If they want to survive the current economic crises with reduced spending, they must reduce their operational costs and this is not possible with 3G services.

If they drop 3G and opt for 2.5G, then they lose out on all future market and this has created a vast dilemma. Users are deferring purchase of the phones as the high cost of the handsets and the expensive plans prevents them from subscribing to 3G. Other than the hype of connectivity, majority of the users do not need to be always connected to the net and if they have to download movies or songs, they can always do it home using the Internet. There are not enough applications developed yet that users want and this would take some time. Till then 3G operators need to have deep pockets to sustain themselves.

Fourth Generation – 4G

The fourth generation or 4G systems would be IP based systems and would make a commercial application sometime between 2012 and 2015. Currently the technology is under experiment and speeds of 1 Gigabytes per second are possible with assured speeds of 100 Mbps. It is still not clear how this technology would be marketed or who the possible users for this technology are as the majority of users are.

When fully operational, 4G systems are designed to provide IP based voice, data and streaming media anywhere and anytime. Access schemes that would be used for 4G include SC-FDMA – Single Carrier FDMA and OFDMA-Orthogonal FDMA. It also used MC-CDMA – Multi-carrier code division multiple access and Interleaved FDM. These systems are not very complex and can be equalized easily at the receiver end and this is important in MIMO environments. MIMO systems have spatial multiplexing transmission and these tend to need greater receiver complexity. Improved modulation methods such as 64QAM would be used for the 4G systems.

4G would be built on packet switching systems with low latency data transmission and not like 3G that are built on twin packet switched network nodes and parallel circuit switched networks. A major issue is that by 2012 when 4G would be deployed, it is expected that IPv4 address would be exhausted and since 4G is IPv6 enabled, a large quantity of wireless enabled devices would have to be supported. By reducing the IP addresses quantity, IPv6 would eliminate the requirement of NAT where a specific quantity of IP addresses are shared among a number of devices. IPv6 permits many applications to be relayed in a much better way by using multicasting and gives increased security and optimisation of routes. Antennas system evolution is important for 4G systems and a new generation of smart or intelligent antenna have been developed.

These antennas provide higher rate and reliability along with long-range communication. Some examples are Spatial multiplexing and in this system, many antennas can be used at the receiver and transmitter sides. Transmission of independent streams from all antennas at the same time is possible. By using more antennas, the probability of signal fading either at the sending or receiving end is reduced and this feature is called receive and transit diversity. Another type is the closed loop system that is provided with many multiple antennas. It is not yet clear what type of pricing plans would be announced and how many operators would offer this technology and if the technology would be viable commercially (David, 2007).

Case Studies of Innovation in mobile communication devices

The section presents a number of case studies to show how mobile phones have been put to innovative use.

Shopping and Events

Exhibitors and product sellers in a shopping area and at exhibition events create a blue tooth enabled gateway that sends advertisements and offers to visitors. Customers are required to enable blue tooth module on their handheld devices and then the advertiser sends a java-enabled application to the customer’s handset. Customers can then use the menu function to browse through the offers, avail discount offers in products of their choice, have the products home delivered and make online payments. Shops, Restaurants, Nightclubs, Shopping malls and Retail chains where information of menu and shopping offers are sent, Outdoor advertising billboards, Posters where clips of products are sent, Entertainment and Sports events where clips of sporting evens are sent and Consumer brands who provide more information about their brands.

Other marketing applications include offers at tourist destinations where attractions and information about local spots, galleries, heritage sights are sent and in conferences and trade shows were information about the events are provided. Market research agencies also send questionnaires and users have to fill out the survey instrument and return it online to get special discounts and offers (vfconnect, 2008).

Mobile Bar Code Readers

Mobile telephony services are using a new form of marketing where subscribers in Japan use their m mobile phone camera to scan the bar codes printed on product cases, in magazines on clothes and the mobile phone then connects to the database of the barcode, encrypts details of the product and the customer has full information like price, range, various choices and can order the item using the mobile phone. This is high-end integration and encourages impulse buying so when a customer sees a product that is appealing, the bar code printed on the product can be directly used to order a similar product (Zande, 2006).

Pocket Portal

This is a new space in the mobile Internet universe where users can connect through Wi-Fi systems to the pocket portal and directly interact with bands and products. Users must first download the application on their mobiles and hand held so that they can enter the mobile portal, browse through online stores, read content, buy what they want and make instant payment. This is a concept that has been launched in Australia and provides access to 1200 retail houses and has logged 2 million transactions per month (Pocket Portal, 2006).

iPhone

The iPhone introduced by Apple Computers can be regarded as a marketing application tool because it combines a phone, iPod and the Internet and allows marketing companies to target their customers. Customers usually connect to the iTune store and download the songs of their choice. But recording companies such as BMG, Sony, Universal, Fox Entertainment and others create a database of the song genre that a customer has downloaded so the song company knows that if a customer likes country, pop, rap, rock or metal and other types and also the favorite artists. These companies then provide links to servers where samples of these songs and merchandise is stored so that the customers can use the Internet feature visit the site and buy the products. With such phones it is possible to connect the phone to a computer and download whole movies and games that can be enjoyed later (iPhone. 2008).

Antitheft Devices

Communication devices have also been used in creating antitheft devices that all a stolen car to be traced. There is a minute radio frequency transceiver and it can be hidden in a vehicle. Each transceiver is allotted a unique code and this is further linked to the vehicle identification number – VIN. The VIN is provided by the vehicle registration authority and as per the rules, all private, commercial and other vehicles are given a unique VIN and this is referred to when the vehicle is stolen or is involved in an accident. If the vehicle is stolen then the police computer can be used to trace with the help of the vehicle identification number. The police can then activate the transceiver that is hidden in the stolen vehicle.

The activated device now emits an inaudible signal and the signal tracking can be accomplished by police computers that are fitted with LoJack tracking systems. These systems can be installed in police vehicles and helicopters. The police can track the signals and then track down the source of the signal, find out, and recover the stolen vehicle. More than 200,000 stolen vehicles have been recovered as of date and more than 100,00 vehicles have been returned to their owners in US itself. The system has helped in the recovery of more than 4 billion USD of vehicles (LoJack. 2009).

Star Technologies offer much more advanced devices that use advanced satellite tracking systems with integrated car alarm. The devices are fitted with a GPS receiver that shows the exact location of the vehicle. When used with a GPRS enabled GSM modem that transmits the VIN, the speed at which the vehicle is moving can be found. The device can be hidden inside the vehicle and it can be connected to the ignition system, door locks and other systems. If the vehicle suddenly starts or starts moving when it should not as in the case when the vehicle is stolen, then the owner can call the monitoring station and stop the car and the door, making the thief to abandon the vehicle. The company uses third party satellite systems so that the reach is much wider (Star, 2008).

Development of such applications has increased the practical use of mobile communication devices and the devices can now be used to protect assets and also track fleets and manage them.

Mobile Communication Devices in the Military

New technologies such as GIS – Geographical Information Systems, GPS – Global Positioning Systems and Remote sensing capability systems are being increasingly used by the defence systems both for war and peaceful purposes. These technologies have a number of mobile communication devices that help users to connect to a network and obtain information about the geographic location of a region. Mobile communication devices in the army are very important for the safety of the nation and they need to be very reliable, accurate and would be used in hostile weather. A detailed discussion of these devices is provided.

GIS in the Military

Geographic Information System – GIS is used to create, manage and analyze geographic and topographical identification marks of a an area so that the information can be used as and when needed. The term is used to denote mobile communication devices, hardware and software applications that would store, analyze, edit and integrate the geographic features of an area. Geographic features refer to rivers, mountains, plains and other features of an area and an area could be a small village, a state and even a country (DTIC, 2007).

Techniques used for GIS by the army

The army has access to certain methods of gathering data that is not available to private agencies and these include use of government owned satellites that create high-resolution Cartesian images of a region. Techniques such as digital information with digitised data creation where hard copies of survey maps are fed into a computer aided design software application to create raster images of continuous data such as rivers, mountains and also discontinuous forms such as houses, buildings, roads and so on. A trace of the geographic data on an aerial image to trace a digitising tablet can be created. The image that is formed is typically a raster image or a scalable vector image. The images that are formed are indexed as per key words and tags and stored in large relational databases so that the information can be used as and when needed (DTIC, 2007).

GIS Applications in the Military

The US Army Military District of Washington, Fort McNair, DC has published a detailed paper on how GIS is used by the army (Keys, 2007). According to the authors, the army uses GIS for military installation management and there are a number of tasks that are performed.

Management of Facilities

Planimetric base maps are used and this information is taken as input to create the GIS data. Data includes roads, walls, fences, buildings and others. The data is fixed to the ground coordinates and then superimposed on aerial photos. Typically applications are:

Develop installation master plans to evaluate infrastructure details on installation and find if any changes can be done for the master plan. 3D images of the facilities can also be generated and stored. Linking floor plans and create 3D scenarios of the present and historical data Integrate information about location of critical equipment, built up storage are, crating an intelligent street map so that personnel can plan their route marches in advance. By properly managing information about facilities, it is possible for the army to take control of very large facilities such as oil refineries, nuclear reactors, airports and so on (Keys, 2007).

Training Planning and Management

GIS can be used for effective training of the paramilitary forces to create mock rescue missions of certain area. It can be used to analyze the soil and topography features so that new construction plans can b defined, asses the natural and man made barriers to a region, take up exercises for fire rescue and other activities, perform analysis of terrain to find the type of vehicles that can go in an area and if the topography would support battle tanks or only light Armour vehicles

Protection and secure an area

GIS is very valuable when troops would want to enter an unknown area as GIS would provide details of the topography such as rivers, bridges, natural cover, loose soil and so on. The information can be integrated with the emergency rescue and evacuation teams when natural disasters strike a region and even in the event of war when troops would have to be rushed to an area.

Disaster Management planning

By using GIS data, advanced plans for disaster prevention can be framed and these would help troops to move to predefined areas and locations so that the rescue plans can be more effective.

GPS in the Military

Global Positioning System – This is a type of radio navigation system developed by the US Department of Defense. The system allows users to find their position on land, air and sea in three dimension coordinates anywhere in the world and it would be possible for people in remote areas to know clearly where they are on earth. Almost the whole world is covered by the satellites and while GIS is used by experts with extensive equipment, software and hardware, GPS devices are available as small hand held devices that can be used by an ordinary trooper who would not be expected to know intricate details of complex raster imaging algorithms. Often GPS and GIS are integrated and while GPS would tell the soldier of his location, GIS would tell him about the topography of the region (PM GPS, 2008).

Techniques used in GPS

The DOD provides access to agencies and equipment manufacturers who can then create GPS systems. There are three components: User, Control and space. Space refers to 24 satellites that are placed in geo stationary orbit around the earth so that at any point, 6 would be available and relay information to any user in the world. The control segment is made of the Colorado Springs master control station and is further provided support by five monitoring stations with antennas. The system tracks the GPS satellites and receive information from them and there is a steady relay of information and it is possible to know the exact coordinates of each satellite. The user segment including the army is made up of processors, receivers and antennas and these devices allows the troops to receive information precisely about the location anywhere on earth, estimate their speed and take the satellites as the reference point (Garmin, 2008).

Use of GPS in the Army

The army uses GPS system widely to aid troop movement in hostile and unknown areas, to take up search and rescue missions, to target specific facilities and infrastructure for assault by artillery or airplane attacks and to aid in navigation. It is also possible to program a route for troop movement so that troops follow a precise and well-defined route when taking up maneuvers across land, sea and air. Some of the areas and applications of GPS in army are (GCN, 2008):

Navigation

GPS hand held systems provides for troops to navigate in the dark to predefined areas for Reconnaissance and to coordinate the movement of equipment, supplies, ordinance and troops. Troops can be dropped in an area for forward recon activities and then they relay their position through GPS for back up support.

Tracking targets

While operating in urban areas and cities, it would be very crucial to take out enemy targets such as equipment and buildings. By integrating GPS to precision guided munitions, it is possible to target gun towers and emplacements, munitions stores of enemies, take up air to ground missile strikes by using gun cameras.

Missile guidance

Missiles such as artillery projectiles, ICBM, cruise missiles and other such munitions can be accurately guided to the exact targets by feeding the coordinates of the target to the weapons system. This provides for surgical precision strikes with less collateral damage.

Search and rescue missions

Pilots have GPS transmitters fitted to their equipment or to the ejection seat. When an aircraft is downed over land or sea, the transmitter send a steady signal to the receivers and it is possible to send a rescue team and rescue the pilot.

Reconnaissance system

GPS is also used to create maps of areas and to perform Reconnaissance operations.

GPS Jammers

To thwart enemy from sending GPS signals, GPS jammers can be used and these can block out the signals.

Remote Sensing Devices in the Army

Remote sensing is the science of detecting objects, terrain, movement, geographical features from a distance and when visual contact is not possible such as during nigh or during bad weather. The army uses a number of applications based on different technologies for remote sensing applications. Some of the applications are: early warning systems for ICBM launches either against the host country or anywhere else in the world, detection for atmospheric contamination for biological warfare such as nerve and poison gas; precise delivery and guidance for munitions, reconnaissance and surveillance detection systems and so on. They are also used for intrusion detection. A number of technologies such as laser beams of low intensity, photo electric beams, infra red and thermal devices, motion detection systems and others are used by the army. Other technologies that are integrated include GIS and GPS systems (Hudson, 2005). Some of them are as given below (Campbell, 2002):

- Radar: These devices have been in use since World War II and they have become increasingly sophisticated and allow precise plotting of coordinates of incoming objects, movement on ground, underwater and over water.

- Laser and Radar device: Special altimetry on satellites allow precise measurement of wind speeds, ocean currents, weather conditions, early hurricane warnings and other such tasks.

- Light Detection And Ranging: Also called LIDAR, these systems are used in weapons for laser illuminated guidance of projectiles where a laser is placed on a target and it is possible to strike the target very precisely. It can also be used for measurement of objects, natural formations and man made objects.

- Infra Red Devices: These are used by the army while guarding security perimeters and are made of small infra red cameras that are mounted on security fences. When any living object approaches the camera, it is possible to detect the object by its heat and warnings are flashed to the troops in an area about possible intrusion.

- Sonar devices: These are used by submarines to detect incoming undersea objects, natural barriers and other objects. Sonar guided missiles are also available that can fire missiles to the source that is emitting the sound.

Livewire Detector Communications systems in Helicopters

Livewire power lines pose a great threat to aviation safety and particularly to helicopters and other low flying aircrafts. Helicopters are sometimes termed as ‘widowmakers’ since they are notoriously hard to control. The threat of low strung electrical wires increases the number of accidents. Livewire detectors are sensing and communication devices that warn the pilot about any power lines so that corrective action can be taken. There are a number of technologies available for livewire detections. These devices are small and compact and can be fitted in the cockpit (Williams, 2001).

Electromagnetic Radiation Livewire (Powerline) Communication Devices

Power transmission lines generate electromagnetic radiations that are transmitted radial and these radiations can pass through solid objects such as the fuselage of a helicopter. While the radiations do not harm individuals, these can be detected by electromagnetic radiation detectors. Electromagnetic livewire detectors are mounted inside the cockpit of the helicopter and have a sensor that picks up electromagnetic radiations from livewire power lines. Electromagnetic waves are made of electricity and magnetism and can travel at the speed of light through space and even through solid objects. When these radiations strike the sensor, the device starts emitting audio beeps that warn the pilots of the presence of livewires. The device also has a small LED bulb that flashes on an off and gives visual warning (Williams, 2001).

The frequency of the audio beeps is directly proportional to the strength of the signal and the frequency of beeps increases when the helicopter nears the livewires. There is no need for visual sighting such as during night or in bad weather and the pilots can safely fly out of harm. Now, there are two ways in which the electromagnetic radiations are generated: Wave Form and Particle Form. Waveform detection work on the principle that electromagnetic radiations are generated in the form of waves with alternating crests and troughs. “Wave frequency is the rate of oscillation and is measured in hertz and this unit specifies the number of oscillations per second”. Wavelength is the distance between two side by side crests or troughs. Frequency is inversely proportional to the wavelength and in Particle form detectors, the wave is made of discrete packets of energy. Wave frequency is proportional to the magnitude of the particle’s energy (Williams, 2001).

Electromagnetic radiations are quite complex and there are different classifications as per their frequency. Different types are:

- EHF (Extremely high frequency or Microwaves);

- SHF (Super high frequency Microwaves);

- UHF (Ultrahigh frequency);

- VHF (Very high frequency);

- HF (High frequency);

- MF (Medium frequency);

- LF (Low frequency);

- VLF (Very low frequency);

- VF (Voice frequency);

- ELF (Extremely low frequency).

Pilots flying in areas with power lines for TV broadcasting, radio stations, etc. need to buy devices that can detect radiations in the VHF and UHF range. Pilots who fly in the countryside, can manage with ELF and LF detectors. The range of detection is crucial. This specifies the minimum distance at which the power line can be detected. This is important since helicopters can fly at speeds of more than 400 kilometres per hour. The range should be such that the pilots have sufficient time to slow down and change course before they run into the wires. Sensitivity is the smallest change in radiations or the smallest signal that can be picked up.

While a highly sensitive device is ideal, it will pick up signals from even street lights and other small electrical devices and gives rise to so many false alarms, that the device will be shut off. There should be no interference with the communication systems and the helicopter electronics, when the device is in operation. It should not produce EMF radiations by itself and has to be suitably insulated to prevent interference. Mounting technology is important and so is the size. The last important point to be considered is the cost. Expensive systems cost money and a balance needs to be struck between the cost and foreseen dangers (Williams, 2001).

RFID Livewire Communicating Devices

Radio Frequency Interference Detectors are being increasingly used. RFID tags are placed around the power lines and these tags emit signals about their position. A pilot with RFID detection system on board can easily read out the signals and take evasive actions. These systems not tell about the presence of livewires but also gives details of the location, the geographical information, distance and route to the next stop and so on. RFID detection systems are expensive and only big organizations, defence and valuable cargo movers can make use of this system (Williams, 2001).

Imaging Systems Livewire Communicating Devices

The imaging system is based on the geographical information systems (GIS) where sections of power lines are mapped. These maps are available on the pilots monitor as moving maps. The helicopter needs to have GIS and GPS systems on board and connected to the helicopters avionic circuit. As the helicopter approaches the section with power lines, the pilots can see a visual 3D rendition and take appropriate actions. These systems are expensive and a small firm that operates a couple of helicopters may find the costs of acquiring and maintaining prohibitive (Williams, 2001).

Infrared Livewire Communicating Devices

Infrared livewire and obstacle detectors are advanced systems that use thermal imaging systems to track and identify power line and other obstacles. These systems are made up of an infrared camera that acquires images of the passing terrain and displays it on an interface for the crew to see. The image is processed internally by an onboard computer and specialized software that processes the image and maps it to likely objects in the database.

The system uses advanced algorithms such as Cellular Automate and finds the best ways to resolve the pixels and come up with a man readable image. Coordinates of the object whose image is acquired is plotted and the exact dimensions, height and width are available for reference. The helicopter crews can then make an informed about the object and decide the best way to circumvent it. The image acquiring system can be controlled by the helicopter crew and they can pan the camera to the required orientation. This is a very advanced device and is used by advanced military services who need to operate deep in enemy territory, fly blind and at very low altitudes to escape radars (Williams, 2001).

Analysis and Discussion of Findings

The previous sections have presented a number of facts and figures and events that led to the development of mobile communication devices. This chapter presents an analysis and discussion of the findings in the previous chapters.

Mobile communication devices have followed the path of all technology innovations, a slow start with few subscribers, long periods of prolonged development and research when the product underwent many changes, many new technologies came about and this period was from 1947 till the late 1980’s. During this period, the technology was rudimentary and evolving, mobile communication devices were very bulky and awkward to carry and there were just a few hundred customers who wanted to use them. The public at large was not sure of these devices and since they were expensive and people had no need to be always reachable, the technology did not have mass followers.

In the early 1990s, cellular technology took off very rapidly with huge investments being made in the technology and the mobile communication devices and the number of subscribers showed a sudden rise and thousands, then millions of customers started using the service. People from different walks of life, from housewives to working women, from professionals such as doctors, lawyers, engineers, factory workers and even students, suddenly realised that they always wanted to be reachable. This was the era when people had a need to speak with their contacts while on the move and cellular phones became a coveted fashion statement, a sign of having ‘arrived’.

Let us examine these developments with the diffusion of technology models. As per the models, when a new technology is introduced, there would be a few people that would want to be the first to buy the product and these are the innovators. We see these phenomena when a new car model is introduced and somebody is always there who will buy it before others. Such innovators help in publicising the product but they do not bring in mass use as other would wait to see how the product performs and how useful it is.

Consequently, product development teams then try to devise use uses for the product or develop new applications and more people that were waiting now take up the product. After some delay, more and more people start using the product. All this time, the initial development costs would be quite high and it is the company that has to bear the overheads of development and promotion. It is only when mass commercialisation happens that the products reduce in price and become more affordable, allowing more applications and uses for the product to be developed.

This pattern has been repeated in the mobile communication devices. In the earlier era, very few people used the products as people did not feel a need for it. In the later period, as new applications developed, voice clarity increased, cell phones range increased, more people started using it till the mobile phone as become an essential item. There was a clear association of user needs being aroused by marketing companies and by development of new applications and while user needs drove the development of new applications, newer applications in turn were driven by market demands.

The pattern has been repeated with the development of 0G, 1G, 2G and 3G. These technologies brought in new paradigm changes in the manner that people communicated, sought entertainment, lived and worked. Almost everyone is reachable when their mobile phones are switched on, in any part of the country.

With the introduction of GIS and GPS enabled mobile communication devices, the great wilderness became easily navigable and these devices were used not just by the army and the airlines for whom the technology had been created but even by ordinary citizens who needed to go on adventure treks and those who wanted to find their way in a city. Thus, technology and devices transformed from one intended area to another, from the defence and transportation industry to the civilian life.