Introduction

The accurate prediction of the timing and location of the earthquakes is crucial for reducing the hazards of seismic activity and preventing the fatalities and injuries. The seismic activity and the relatively regular sequence of the earthquakes in the area of San Paul Fault generated the interest of the geologists in exploring the processes in the rupture.

The prolonged Parkfield Earthquake Experiment was not effective for predicting the 2004 Parkfield Earthquake, but became a significant step forward in the development of the geological studies and the effective short-term prediction strategies.

It is important to take into account the wide range of difficulties in monitoring the seismic mechanisms with the current level of development of geological knowledge and the wide range of factors which had impact on the development of the state of seismic activity such as the pressure of the fluids, for example.

The experiment allowed implementing the innovative models and developing new more comprehensive patterns for monitoring the seismic events in future and making the accurate predictions for preventing the tragedies and reducing the devastating effects of the disaster.

Overview of the problem

Disregarding the recent advances in understanding the physics of earthquakes, the earthquake prediction data remains uncertain and unreliable. The earthquake forecast is beneficial for society because it can reduce the seismic hazards by imposing appropriate emergency measures. Collecting the geophysical and geological data related to the earthquakes, their precursors and consequences is important for developing the models of the earthquake prediction and making the data of the scientific forecasts more reliable.

The Parkfield Earthquake Prediction Experiment is a long-term research which was started in 1985 for the purpose of exploring the seismic activity on the San Andreas fault in the State of California and providing the scientific basis for the earthquake prediction. The moderate earthquakes have been observed in the Parkfield section of the San Andreas fault at approximately regular intervals – 1857, 1881, 1901, 1922, 1934 and 1966 (“The Parkfield, California, Earthquake Experiment”).

Summing up the available data on the six above-mentioned moderate-sized earthquakes, the researchers hypothesized that the date of the next earthquake in this area would have been by 1993, but their prediction was not accurate because the anticipated earthquake took place in September 2004 only.

Though the forecast did not come true, the research results were valuable for enhancing understanding of the earthquake processes and contributed to the development of the strategies for increasing the accuracy of the prediction and making the short-term prediction possible.

The main problems with making the definite prediction of the Parkfield earthquake can be explained with uncertainty of the records dated before 1900, lack of data on the locations of the earthquakes and the wide range of inter-event intervals (from 12 to 32 years) (Kanamori 1207).

The fact that the predicted earthquake as opposed to the researchers’ forecast, did not occur in 1993 proves that the seismic processes in the Earth’s crust are complex and require considering a number of parameters and the use of a simple model is insufficient.

With the relative progress in understanding the processes, dynamic, and patterns of the earthquakes, the prediction of the seismic activity of the crust became possible only to some extent. The main hurdles for accurate short-term prediction include the incompleteness of the knowledge concerning the past earthquakes, on the one hand, and difficulties in measuring the corresponding variables, on the other hand.

Kanamori differentiated between short-term prediction (periods from hours to months), intermediate-term prediction (up to ten years) and long-term prediction (more than ten years) (1206). The beginning of the modern era of the earthquake short-term prediction is dated back to 1970s when the first attempts to reduce the seismic hazards using the scientific basis were made by Chinese officials.

The evacuation of the city of Haicheng in the northeast China because of a wide range of observations of the possible precursors of an earthquake decreased the devastating effects of the seismic activity. When the magnitude 7.3 earthquake took place in the region on February 4, 1975, the number of the fatalities and injuries was much less than it could have been.

There were 2, 041 fatalities and 27, 538 injured as compared to more than 150, 000 as an estimated number of possible victims of the earthquake (“The Parkfield, California, Earthquake Experiment”).

Still, the earthquake in an industrial city of Tangshan which was not predicted by the Chinese scientists in the following year demonstrated the insufficiency of the existing knowledge and the need of further research in the sphere. The methodology of prediction of the Haicheng earthquake was based on the foreshock sequence mostly but in other cases this approach can be ineffective.

Though the researchers working on the Parkfield Earthquake Prediction Experiment did not manage to predict the accurate date of the earthquake, the research results were valuable for developing complex models and methodologies for short-term prediction of earthquakes and reducing the hazards of the seismic activity.

The problem, sequence of events

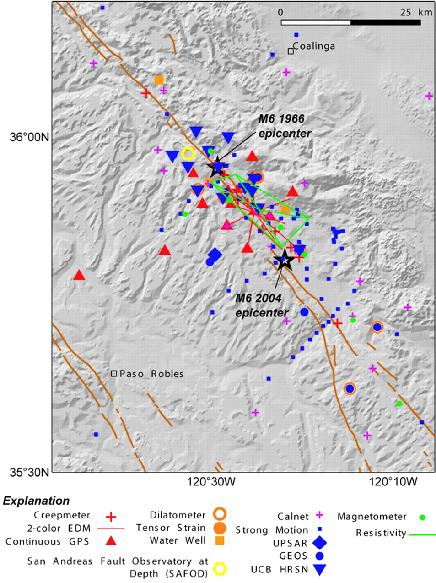

The Parkfield earthquake which took place on 28 September 2004 is recognized as the best recorded seismic event till the present moment. In the frames of the prolonged Parkfield Earthquake Prediction Experiment, the data was collected and stored by geologic, seismic, magnetic and other networks, allowing the researchers to consider a wide range of parameters which were especially important for more comprehensive understanding of physics of earthquake and developing a complex model for short-term earthquake prediction.

The San Andreas Fault (SAF) has been monitored by the US Geological Survey (USGS) since 1985 (Roeloffs 1226). The major goals of this monitoring include watching the fault behavior for predicting a moderate earthquake as its culmination and recording the seismic rupture and earthquake effects in general. After the earthquake did not occur before 1993 as it was initially predicted, the probabilities of occurrence of the earthquake in the region have been reevaluated.

With the uncertainty of the occurrence of the next Parkfield earthquake and the discouragement caused by the failure of the first predictions, the budget of the project was cut and the officials even raised the question of reasoning for continuing the experiment. Still, the experiment continued and became a significant contribution to the development of earthquake studies and the seismic hazards reduction strategies.

The microearthquakes which usually precede the main earthquake event are defined as foreshocks, and these seismic events have been monitored in the Parkfield. These observations became the basis for further work on the experiment, proving the high level of probability of occurrence of the moderate earthquake.

The picture of background seismicity has proven that the ruptures in the fault zone have a significant impact on the SAF’s behavior and are responsible for controlling it. The fault zone fluids are an important aspect which is crucial for monitoring the seismic events in the fault zone. “It has been hypothesized that high fluid pressure in the fault zone is the mechanism that reduces the frictional strength of the fault zone, and that time variations in fluid pressure control the timing of earthquakes” (Roeffols 1229).

Considering the fact that the seismicity patterns can be dependent upon the pressure in fault-zone fluids, appropriate measurements of the pressure in these fluids have been incorporated in the monitoring plans. The increase in seismicity has been monitored since October 1992 in the area of the 1966 Parkfield earthquake. A wide range of possible pre-earthquake signals have been detected since 1992, including the magnetic field variations and other seismic changes which can be regarded as possible precursors of the earthquake.

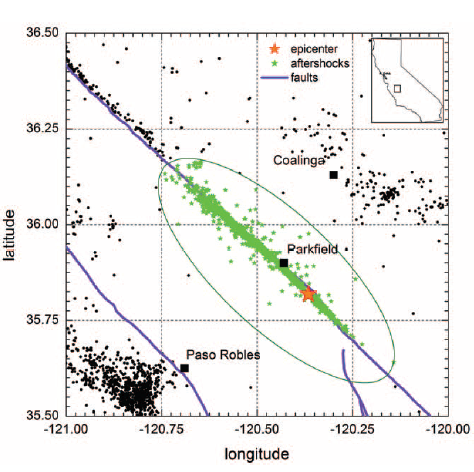

The aftershocks of the earthquake are also important sources of information which can be valuable for enhancing understanding of the physics of the earthquake and developing new strategies for short-term earthquake prediction. The data on the aftershocks of the 2004 Parkfield earthquake is one of the most significant results of the experiment under analysis (Shcherbakov 383).

The information on the reoccurrence of the aftershocks of the Parkfield main seismic shock can be used as a model for monitoring the release of the earthquake consequences and the changes caused by the increased seismic activity in the region (Figure 1). The fact that the earthquake did not occur in 1993 as it was initially predicted does not diminish the value of the experiment and the value of its results for the geophysical science and the sphere of public affairs cannot be underestimated.

The choice of the relatively narrow window for predicting the Parkfield earthquake resulted in the misconception that after the time window closed and prediction did not come true, the experiment failed.

It is significant that the mission of the experiment was no limited to the prediction of a single seismic event but was broadened to the development of the instrumental pattern and short-term prediction strategies and procedures in general. “The scientific community views it [Parkfield experiment] not only as a short-term prediction experiment but also as an effort to trap a moderate earthquake within a densely instrumented network” (Mulargia and Geller 306).

The occurrence of the 2004 Parkfield earthquake has proven that the decision to continue the experiment notwithstanding the seeming failure of the experiment.

Causes and impacts of the problem

The Parkfield Earthquake Prediction Experiment and the 2004 Parkfield earthquake have proven that the existing seismic studies and methodologies are insufficient for developing effective short-term prediction strategies and reducing the hazards of seismic events. The main causes of the problems of the experiment were rooted in the lack of knowledge of the past earthquakes and the inconsistencies in the existing methodologies and instrumentation patterns for monitoring the associated phenomena.

The short-term prediction of the 28 September 2004 earthquake was complicated with a number of technical details and the peculiarities of the M 6 earthquake itself. The time- and slip-predictable models were ineffective for the 2004 Parkfield earthquake and this fact has proven one more time that predicting the timing and locations of the moderate earthquakes is problematic.

Another significant difficulty is the absence of detectable short-term precursors for the occurrence of M 6 earthquakes. Despite the prolonged observations of the seismic activity and ruptures in the San Andreas fault zone, the accurate prediction of time and location of the main shock event was complicated with the segmentation of the fault which became a hindrance for developing a single seismic model and concentrating on it.

The researchers team was to dissipate their efforts, monitoring numerous locations of possible main seismic event and unable to focus their attention on one of them. The 2004 Parkfield earthquake did not cause any fatalities or injuries only because it occurred in a sparsely populated region (Harris and Arrowsmith 1).

The majority of the mechanical models of earthquakes point at the importance of the energy budget and releases which play an important role in the development of the seismic activity and need to be taken into consideration while monitoring the ruptures. Considering the fact that in the San Andreas fault zone a part of energy was released aseismically, in other words, without creating any seismic waves (Harris and Arrowsmith 3).

This circumstance complicated the monitoring procedures significantly and became one of the hurdles for the successful prediction of the timing and location of the Parkfield earthquake. The transient slip is regarded as another factor affecting the probability of occurrence of earthquake in particular area because it can be consistent with the reduction of stress in the zones with the increased seismic activity.

Thus, the nucleation of the 2004 Parkfield earthquake in the area of Gold Hill can be explained with the slips and reduction of stress in the zones of the 1934 and 1966 earthquakes (Murray and Segall 12). It shows that monitoring of the areas of the past earthquakes and the postshocks can be beneficial for more accurate prediction of future seismic events.

As to the consequences of the 2004 Parkfield M6 earthquake, the observations of the displacements of the surface in the following two years play an important role in the whole experiment. The monitoring of the processes in the state of the Central California lithosphere is crucial for establishing the cause-and-effect relations between the events and considering the afterslip as an important part of the postseismic mechanism.

The observations show that the afterslip is widely spread along the Parkfield rupture with some concentration in the area of the earthquake epicenter (Freed 5). These postseismic changes were so small that could not change the behaviour of the postseismic mechanism while the distribution of the afterslip gas not altered in the course of time and are not characterized with seismic activity.

Remedial action that was taken to reduce the problem

The organization of the Parkfield Earthquake Prediction Experiment was a preventative measure imposed for predicting and reducing the seismic hazards and exploring the earthquake processes for contributing to geological knowledge.

Though the researchers working on the Parkfield Earthquake Prediction Experiment did not manage to predict the accurate time and location of the seismic event, the results of the study were valuable for the development of the geological instrumentation patterns and exploration models for developing the short-term earthquake prediction strategies in future.

The decision to continue the research of the behavior of the Parkfield rupture even after the time window closed in 1993 and the initial forecast did not come true was beneficial and allowed watching the events which preceded and followed the 2004 Earthquake.

Viewing the seismic activity events by using various instrumentation patterns and considering the additional factors which have indirect impact on the seismic activity was important for watching the earthquake in its development and making appropriate conclusions for analyzing its mechanism and evaluating possible hazards of the main shock as well as the long-term consequences for the lithosphere of the central California in general.

Along with the segmentation of the Parkfield rupture, the difficulties with monitoring the foreshock events preceding the M6 earthquake became a significant hurdle for making an accurate short-term prediction.

Disregarding all the criticism of the Parkfield Earthquake Prediction Experiment, its results cannot be regarded as failure. The lack of accuracy in predicting the time and location of the main seismic event was preconditioned with the current level of geological and geophysical knowledge, the lack of data on the previous earthquakes in the area and inability to make some of the necessary measurements for taking into account the wide range of the factors which influence the mechanism of the seismic event.

Considering the difficulties with conducting some of the measurements, the laboratory experiments were also included into the program of the Parkfield Earthquake Prediction Experiment.

For example, the transient magnetic pulsation experiments were incorporated into the program and the pattern of pulsations was valuable for predicting the size of the earthquake (Bleier 1975). Along with the correlation of magnetic pulsations, the sequence of the air conductivity and Infra Red anomalous phenomena are defined as the pre-earthquake signals.

Though this model did not aid the researchers in making the accurate short-term prediction, these observations and the results of the experiment became a valuable contribution to the development of seismologic science and the development of the strategies for making more accurate forecasts. It is significant that the experiment was not finished after the mainshock event occurred in 2004, allowing the researchers to monitor the post-earthquake events and observe the seismicity in the area of San Andreas Fault.

A new three-dimensional model was used for measuring the compressional wavespeed of the post-earthquake events for making further forecasts and enhancing the accuracy of the predictions by taking advantages from the collected data and taking into account the public significance of the accurate prediction and taking the appropriate preventative measures (Thurber 49).

The results of the Parkfield Earthquake Prediction Experiment were valuable for monitoring the state of the lithosphere of the central California in general and the processes in San Andreas Fault in particular. Though the researchers’ team did not manage to make an accurate prediction of the space and timing of the 2004 Parkfield Earthquake, the study became a significant step forward on the way to the short-term earthquake prediction.

Conclusion

The researchers working on the Parkfield Earthquake Prediction Experiment made a significant contribution to the development of the seismic studies. The prolonged experiment allowed not only collecting valuable data on the seismic patterns in San Andreas Fault but also implementing the innovative models for monitoring the earthquakes, enhancing understanding of the physics of the seismic events and developing the effective short-term prediction strategies.

The failure of the initial prediction of the researchers, according to which the earthquake was expected before 1993, could be regarded as a blessing in disguise because it drew the public attention to the numerous factors which can complicate the successful implementation of the prediction strategy.

Works Cited

Bleier, Thomas et al. “Correlation of Pre-Earthquake Electromagnetic Signals with Laboratory and Field Rock Experiments”. Natural Hazards and Earth System Sciences 20 August 2010: 1965-1975. Print.

Freed, Andrew. Afterslip (and Only Afterslip) Following the 2004 Parkfield, California, Earthquake. Geophysical Research Letters March 2007: 1-5. Web.

Harris, Ruth and Ramon Arrowsmith. “Introduction to the Special Issue on the 2004 Parkfield Earthquake Prediction Experiment”. Bulletin of Seismological Society of America Sept. 2006: S1-S10. Print.

Kanamori, Hiroo. “Earthquake Prediction: An Overview”. International Handbook of Earthquake and Engineering Seismology 2003: 1205-1216. Print.

Mulgaria, Francesco and Robert Geller (eds.) Earthquake Science and Seismic Risk Reduction. Norwell: Kluwer Academic Publishers, 2003. Print.

Murray, Jessica and Paul Segall. “Spatiotemporal Evolution of a Transient Slip Event on the San Andreas Fault near Parkfield, California”. Journal of Geophysical Research Sept. 2005: 1-12. Web.

Roeloffs, Evelyn. “The Parkfield, California Earthquake Experiment: An Update in 2000”. Current Science 10 Nov. 2000: 1226-1236. Print.

Shcherbakov, Robert, Donald Turcotte and John Rundle. Scaling Properties of the Parkfield Aftershock Sequence. Bulletin of Seismological Society of America Sept. 2006: S376-S384.

Thurber, Clifford et al. “Three-Dimensional Compressional Wavespeed Model, Earthquake Relocations, and Focal Mechanisms for the Parkfield, California Region”. Bulletin of Seismological Society of America Sept. 2006: S38-S49. Print.

“The Parkfield, California, Earthquake Experiment”. USGS: Science for a Changing World Website. Web.

Figure 1 (Adapted from Shcherbakov S377)

The spatial distribution of aftershock events which occurred after the 2004 Parkfield earthquake.

Figure 2 (Adapted from Harris and Arrowsmith 4)

The instrumentation which was used for monitoring the Parkfield area during the experiment