Abstract

Memory management is one of the primary responsibilities of the OS, a role that is achieved by the use of the memory management unit (MMU). The MMU is an integral software component of the operating system that resides in the OS’s kernel. The OS manages both types of memory that are categorized into primary and secondary.

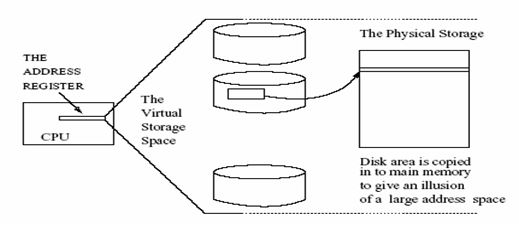

The primary memory’s responsibility is to hold data and programs that are needed for program execution while secondary memory is non-volatile and provides long term data and program storage. However, memory is limited. Due to the limitation of main memory the operating system creates virtual memory to compensate for the need for larger memory, a concept that allows programs to be faked into believing that they are running on a large memory space.

Virtual memory is a concept that provides programs with a large addressable space to support multiprogramming. That implies that the processor operates with the illusion that it is accessing a large addressable storage space. Hence, the OS ensures that the available memory is efficiently and effectively controlled to optimize system efficiency.

To achieve that entire memory management objective, the OS system assumes a supervisory role through the memory manager as discussed in the paper. The paper starts by discussing primary and secondary memory, the memory management unit (MMU), and culminates with a discussion of memory allocation policies, relocation, paging, and segmentation and other strategies that allow memory to be optimally used.

Introduction

One of the most important and critical function of the operating system is to ensure that the computer’s memory is effectively managed and controlled to ensure optimal performance of the computing system. Primary and secondary memories are the two types of memory that are managed by the OS. Primary memory is the random access memory (RAM) whose responsibility is to hold volatile data and programs that are required for programs to run in the CPU.

On the other hand, secondary memory (hard disk) is a non-volatile memory chiefly meant to provide long term storage of data and programs. Memory management encompasses managing of hardware, operating systems, virtual memory, and application memory. The OS effectively manages memory through a memory management unit (MMU). The MMU maps virtual addresses into physical addresses since running programs can only identify logical addresses only.

Virtual addresses are found in virtual memory. Virtual memory is a concept that provides programs with a large memory address space to support multiprogramming. That implies that the processor operates with the illusion that it is accessing large addressable space which, however, is not real, a concept that is supported by the OS. The CPU is a critical component in generating virtual addresses which are translated by the MMU into physical addresses.

Noteworthy, these addresses have to be similar when a program is loaded for execution. All these responsibilities are brought about by the OS through the memory manager. However, these addresses vary during program execution time. In order to ensure fair allocation and system throughput, the OS exploits the concept of paging, relocation, and segmentation.

Allocating and de-allocating memory creates holes leading to internal and external memory fragmentation. However, internal and external fragmentation can be overcome by using a number of memory allocation strategies to optimize memory usage.

All these memory management functions are achieved by the OS using the MMU as one of an integral software module in the kernel of the OS. This paper discusses operating systems memory management as one of the responsibilities of the OS and the role of the operating system as a memory management responsibility.

Review of literature

One of the functions of the operating system is to ensure that the computer’s memory or storage space is efficiently and effectively controlled and managed by the system’s memory management unit (MMU). There are two categories of memory that are recognized by the computer. Primary memory, which is also referred to as the random access memory (RAM) is responsible for holding data and other program elements that are necessary for programs to execute in the CPU.

It is the type of memory that the CPU deals with. On the other hand, secondary memory (hard disk) is a non-volatile memory chiefly meant to provide long term storage of data and programs. The memory management unit, an integral component of the OS, is responsible for managing the allocation and de-allocation of programs to the available memory (Bhat, 1).

The rationale for memory management is based on the fact that modern operating systems support multiprogramming environments where a number of executable programs are intended to reside in main memory to be accessed at any time the data structure is required. That implies that several programs that want to execute in the CPU or are executing in the CPU use the main memory to the provide address spaces that are available in main memory (Bhat, 1).

The MMU provides the functionality of translating virtual addresses which are generated by the CPU into physical addresses. On the other hand, processes only recognize and deal with logical addresses.

Both addresses have to be the same during both load and compile time but differ during program execution time (Tanenbaum & Woodhull, 4).

The operating system is typically responsible for assigning memory to a process and ensuring that the assigned memory space is effectively managed. Therefore, the operating system ensures that all running programs or processes are assigned sufficient operating memory to run. Else, if the memory is insufficient, then it takes the responsibility of creating and assigning processes to another type of memory referred to as virtual memory (Tanenbaum & Woodhull, 3).

Processes can be prepared for execution in the CPU by first allowing them to occupy main memory, before starting to run in the CPU. That can be achieved dynamically. However, it is important note that, the OS ensures that no program that is resident in main memory gets in the way of another program. That functionality is achieved by the operating system MMU (Understanding Operating Systems, 5)

The MMU ensures that processes which want to run in the CPU are allocated the available primary memory space. The MMU also ensures that processes are effectively moved between primary memory and secondary memory while ensuring optimum use of memory space.

Secondary memory, on the other hand, stores process images that are executable, program scripts, and data files that are important for running programs. In addition to that, secondary memory sometimes stores systems programs and applications (Operating Systems –Memory Management, 3).

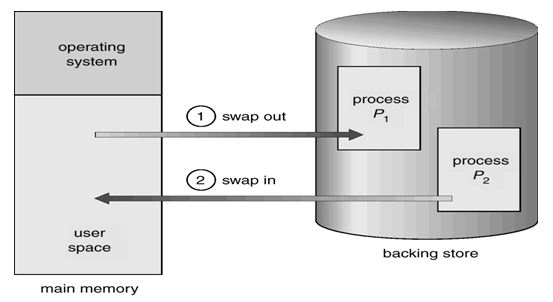

The movement of processes into and out of secondary and primary memory is referred to as swapping. During program execution, processes may need to be swapped to create storage space for other programs to occupy temporarily. Two strategies are used to swap processes. These include the backing store strategy and the roll in and roll out strategy.

“The backing store strategy is based on the principle that the disk’s storage space should be very large and capable of accommodating every copy of the user’s image” (Bhat, 1). In the views of Silberschatz and Gagne, that strategy efficiently provides the ability to reach out a user’s image, an advanatge of the strategy (4).

On the other hand, the roll out, roll in strategy uses priority based algorithms to schedule processes in assigning them the CPU. Typically, higher priority processes are loaded into memory to run in the CPU while lower priority processes are swapped out of memory to give higher priority processes time to execute in the CPU. Typically, swap time is the differential time between program movements to occupy or to be removed from memory storage. The following fig. 1 demonstrates process swapping.

The above illustration consists of two processes that are swapped into and out of memory by the memory management unit. The operating system provides the platform on which communication commands are carried out when the swapping process occurs.

Storage space may suffer from the creation of holes during the process swapping process. Depending on the number of processes that have been swapped in and out of main memory, a number of holes equivalent to the number of processes that have been swapped are created. These holes can be filled by moving in new programs. However, the swapping of processes in and out of main memory is bound to create many small holes into which new processes cannot fit.

Typically, that means that main memory is fragmented and needs to be defragmented. One of the strategies of defragmenting these holes is through a memory compaction strategy. Here, the OS ensures that available processes are moved and arranged in a contiguous manner to create a large enough memory space that can be allocated to new processes by the OS (Robinson, 4).

New processes can be allocated memory based on a number of memory allocation algorithms. These include first fit memory allocation policy. In this policy, the hole that is identified to be the first to be large enough to accommodate the incoming process is assigned the process. Typically, that follows the first come first served allocation strategy.

However, the first come first served strategy is a typical process management strategy while first come first fit policy is a typical memory management technique. In this allocation scheme, a process is assigned a process index that is specific to its position in a queue. The index is the determining element in which processes get allocated memory space (Maiorano & Marco).

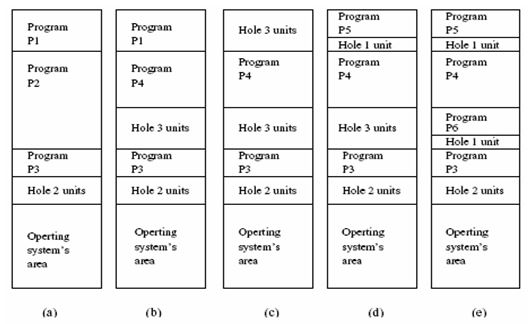

The data structure is serial. It is also vital to note that memory is always scanned to identify the next in the sequence to accommodate an incoming process. The scanning occurs from first to last. Typically, that implies that a lot of time is spent in scanning the available memory. A typical scanning and allocation mechanism is illustrated in fig. 2 below.

One benefit of the above discussed memory allocation policy is its ease of use and optimal performance (Pierre,3).

However, the first fit policy has demerits. It has been established that the policy suffers from the problem of creating very many holes during the swapping process. In addition to that, the holes created by this policy are small and not useful for future allocations. That defragments the system and creates very high creates garbage collection demands impairing the performance of the system (Silberschatz & Gagne,5).

Research shows that a typical approach to solving the problem is by scanning the whole memory to obtain critical information about the positions sizes of the holes. Once the holes and their positions have been identified, then a round robin algorithm is used to allocate processes memory to specific slots.

On the other hand, it is evident that scanning memory is time consuming and that calls for another memory allocation strategy to optimize system time and efficiency. The use of variable and fixed partitions provides an option for overcoming the above problems (Simsek, 6).

Partitioning

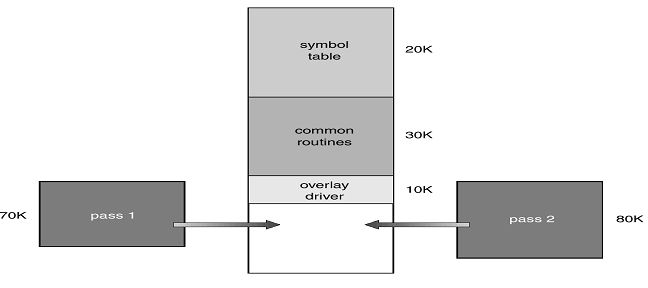

Equally fixed memory storage partitions are created by the OS in a fixed memeory partitioning strategy. The resident programs are then assigned the fixed partitions, a strategy that the OS finds easy to implement. However, a dilemma arises when a program that wants to be assigned a memory space is found to be bigger than the available fixed partition.

However, a typical solution to that problem is the use of memory overlays (Silberschatz & Gagne, 3Overlays are a concept that allows program data to be moved in and out of specific program segments while putting into use the main memory areas. Overlays are illustrated in fig. 3 below.

However, the danger of internal fragmentation is bound to arise again with the use of fixed partitions. That calls for the use of the variable partitioning strategy. In variable partitioning, memory is apportioned to processes based on their memory demands. That ensures that a best fit policy can be achieved effectively. It is possible therefore to organize a specific queue for each program. The problem of internal fragmentation is minimized despite the demerit of the policy being time consuming. That shortcoming is caused by programs that may wait in a queue to be allocated memory space (Pierre, 4).

On the other hand, both variable and fixed memory allocation strategies are disadvantaged by the occurrence of external fragmentation. External fragmentation is a situation that is characterized by partitions which do not have resident processes in them. A number of suggestions have been fronted that help address the problems of internal and external fragmentation. One such a solution is to use a dynamic memory partitioning strategy.

That implies that the size of memory required is dynamically determined and allocated. That implies the allocation event happens at run time. However, this memory allocation scheme is difficult to implement. In order to overcome the difficulty associated with the above mentioned scheme, another that has been suggested is the buddy system of memory partitioning.

Buddy Memory Partitioning

The strategy relies on the concept that memory allocation can be efficiently achieved in sizes with the power of 2. Two methods are used to allocate the available memory space. The first approach is where a hole that is closest to the power of 2 is allocated a process. On the other hand, if a hole is not identified that closely fits into the above description, then another hole that closely fits into the above description is looked for which has a power next to power 2 and split into two halves.

These two holes are referred to as buddies. The buddy system works by assuming that the number of holes is fixed but with variable sizes. One advantage of the buddy system is that internal fragmentation is minimal and is typical of the system. However, the system has a critical demerit where memory allocation takes much time, hence causing the allocation strategy to be slow.

Memory Partitions and Virtual Memory

In order for processes to run efficiently, it is important for main memory to be continuously unlimited. However, that in reality is not possible. Therefore, the concept of virtual storage is embraced. The CPU supports programming concerns by generating numerous logical addressable memory spaces. However, the number of addressable memory spaces is limited compared with the logical addresses that are generated by the CPU. It is important to note that the OS supports the concept of virtual memory by copying a large amount of disk memory and writing it into main memory (Simsek,3).

Virtual memory comes with a range of benefits as a memory management strategy. Virtual memory provides a large addressable space and provides residence for a large number of processes. A detailed discussion of the benefit derived by the use of main memory to host several programs will help to crystallize that benefit.

When a program runs in the CPU, it operates with a minimum number of set instructions. That is also the case with the data used by the program. Typically, that implies that when a program is running, it optimizes the usage of available memory space and only makes reference to the data being used by the process. This situation is effected due to the locality of reference.

That means that only the essential portions of a program can be resident in main memory allowing for several programs to occupy the main memory at the same time. In other words a small amount of physical memory can help service several resident programs.

In addition to that, it implies that a small chunk of memory should be created to address the need for several resident programs to run. This demand makes paging and segmentation very important concepts in memory management. The need to discuss and crystallize the meaning of paging and segmentation is therefore important at this point.

Paging

Paging is where programs get allocated to physical memory when it is made available. In a typical windows operating systems environment, page tables and page directories are created for each of the running programs. In the windows environment, the physical address of a page table is entered into the page directory during the creation of a process (Bhat, 22).

These page table entries can either be valid or invalid. Depending on their status of validity, each page table may contain the physical address pages that are directly allocated to a running program. At this point, it is important to remember that processes do not identify physical addresses but only know logical addresses.

Therefore, as discussed elsewhere, it is the responsibility of the MMU and the processor to map logical addresses to physical addresses. In windows operating system, the page directory page address is the page directory where a process can be found in the physical memory of the system. It is important to note that the page directory of the page address is found located in the register of the CPU and is referred to as CR3 (Bhat, 22).

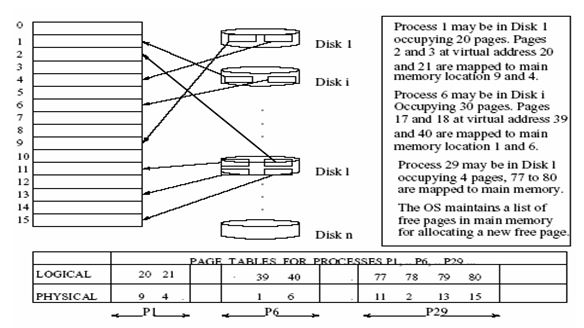

Typically, pages are created by dividing the available logical memory data structure into storage blocks while physical storage is partitioned into fixed memory chunks known as frames. Therefore page tables are set to translate logical addresses to physical addresses as discussed above. The operating system ensures that frames are kept track of as they are used during program execution. Therefore, a program acquires a number of pages that are similar to the number of frames to load into.

Ideally, virtual memory is partitioned into page sizes that are equal to page frames, a characteristic that allows the OS to fetch and move pages with flexibility on the disk to the page frames found in the physical memory. That flexibility of fetching and mapping pages to page frames is illustrated in the fig. 4 below.

In the above illustration, paging requires the support of the OS and the hardware as illustrated in the figure 4 above. As shown on the diagram, a number of pages have been created and made available in main memory. The pages created using this strategy are referred to as the resident set with a locality of reference being taken care of always.

It is important to note that the set of pages that are required by a running program are referred to as the working set. Both the resident set and the working set have to be the same despite the fact that the requirement is not always fulfilled. It is the duty of the OS to enforce the latter requirement. When the OS fails to achieve the latter objective, then it is referred to as a page fault (Callitrope, 3). However, when a page fault occurs, the OS’s responsibility is to identify the required page and fetch it and load it into its free page frame.

Then, the OS proceeds to make entries for the specific page into the designate page table. On the other hand, for optimal use of the CPU and main memory, the operating system swaps processes into and out of memory by deleting specific entries of the page tables for the programs that are swapped out of memory. It is important to see the link at this point between the OS and the memory manager. Sometimes the OS forces pages out of memory to allow other pages to be loaded into memory.

That situation arises when all the page frames in memory are in use, but another process wants to use a page frame. To achieve that objective, the OS uses a page replacement policy. A page replacement policy is typically characterized by the way a process uses page frames. The OS ensures that it has a record of the way pages are used in a read and write operation.

Once a page has been written to or read from, it subjected to a modified bit. One reason is that the page has already been referenced. Implying that the page can be moved using the correct page replacement policy. That ensures that the system throughput is maintained to achieve good system efficiency (Bhat, 22).

A page replacement policy allows the OS to swap processes within the memory storage area following a specific algorithm. These policies include FIFO, LRU, and NFU policies. The FIFO policy is based on first in first out process swapping. Thus, processes are moved based on their arrival time. On the other hand, LRU is a least recently used policy that identifies and moves pages whose usage was further from the current time. The NFU is based on the frequency of usage of the pages based on program count (Callitrope, 3).

In a typical windows operating systems environment, the frame size is usually 1024 bytes. However, other page frames vary up to 4 k. It is important to note that paging is a very important concept in multiprogramming. Paging therefore supports an environment where several resident programs execute in the CPU at the same time (Callitrope, 3).

Relocation

Research has indicated that some programs use dynamically oriented data structures. That implies that the programs uses dynamically allocated and de-allocated memory spaces. Hence, the deleted data structures that are discarded by programs are not collected for use by the operating system immediately. It has been demonstrated that if the OS collects the deleted data structures immediately, that could adversely impair its performance. The space left by the data structures is referred to as garbage.

However, the OS allows garbage to accumulate to a certain levels before demanding compaction to be performed so as not to impair the performance of the system (Bhat, 1). Otherwise, id compaction does not occur, the memory may be so defragmented that there could be left no memory to be allocated to programs that want to run in the CPU (Callitrope, 3).

In a multiprogramming environment, several programs reside in main memory and demand the allocation of the CPU. Therefore, conflicts are likely to occur due to programming errors. In that case, the memory space of other programs can be used by other programs to write data into other programs’ instruction areas.

That has the potential of corrupting a program. However, it is the duty of the operating system to provide a protection mechanism in which no program is allowed to interfere with another program’s instruction area. One of the methods used to achieve process protection is through process isolation (Callitrope, 3).

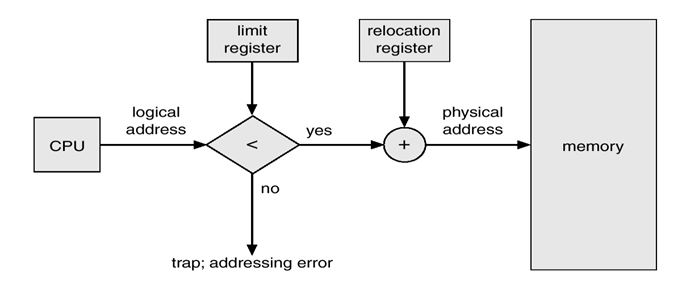

Process isolation is a strategy that ensures that processes are protected from writing into the spaces of other processes and is achieved through virtual address spaces. A virtual address space ensures that a program accesses adequate memory space despite that fact that such addresses may be used by other programs. The OS assigns these addresses to resident user programs which are translated as required into physical addresses by the MMU. The virtual address concept is illustrated in fig. 5 below.

On the other hand, virtual memory management scheme provides another strategy used by the operating system to manage the memory required by programs that want to run in the CPU. Therefore, virtual memory is a concept where programs are able to see a large contiguous memory without caring whether the actual physical memory is equally large.

Therefore, there is a critical need for virtual addresses to be mapped into physical addresses by the MMU. That enables a running process to access the required data and other requirements to run in the CPU. That typically demands that processes be relocated when required to achieve the objective of protection and efficient memory usage. One of the memory management techniques is relocation (Bhat, 2).

Relocation is a vital concept in memory management. One typical approach to a detailed understanding of the relocation concept is by considering a linear map where known contents of a given address are located and fetched (Bhat, 2).

Then a program that is residing in memory can be loaded into memory with its absolute address that points to the instructions and data where main memory is free (Loepere,5). However, in this strategy, no more than one process can be loaded into main memory if another process is running in the main memory. Another disadvantage with this approach is lack of flexibility. That is, one process must be removed from main memory before another process is loaded into main memory.

However, that impairs the efficiency of the system and creates several holes that need to be removed. That is typically accelerated by the fact that when the process is loaded back into the hole it was moved from, it may find the hole no longer available, thus creating a re-location problem. The relocation mechanism uses the single partitioning allocation strategy to protect programs from interfering with each other.

“The relocation register is characterized by values that describe the smallest physical addresses and a limit register that contains a number of logical addresses” (Bhat, 2). It is worth noting that limit registers are bigger than logical addresses (Bhat, 2). The relocation strategy that is supported by the system hardware is illustrated in the diagram fig. 6 below.

In order to understand the relocation process, it is important to examine in detail the linking and loading mechanism of a typical process as the responsibility of the operating system. Dynamic loading occurs at run time and comes with various benefits. These include unused routines are not loaded therefore enabling efficient utilization of memory, allows efficient use of memory when several processes are running, and minimal use of the OS is required at this time.

On the other hand, dynamic linking is executed at run time, resident memory library routines are located by stubs which are small pieces of programs, and provides efficient utilization of memory and the CPU. A number of strategies are used to ensure efficient utilization of space and memory as discussed below. One such approach is segmentation (Bhat,4).

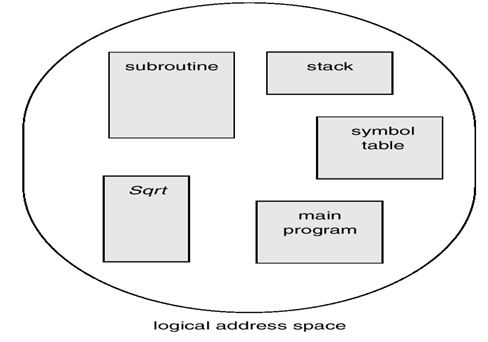

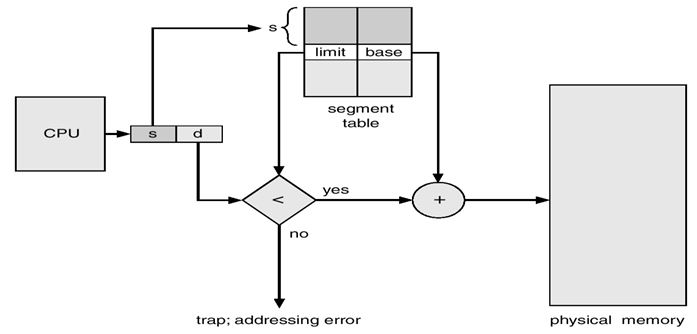

Segmentation

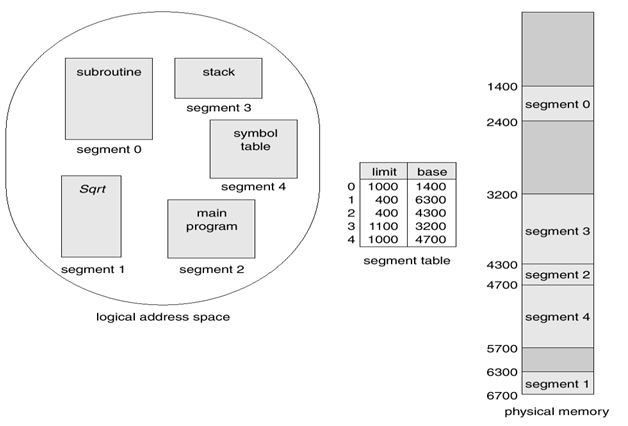

Another memory management concept mentioned is segmentation (Bhat, 22). Segmentation is one of the unique component elements that support the concept of virtual memory. It provides support on what users consider about running programs which are illustrated in the following diagrams in figs 7, 8, and 9 below.

Segmentation may be defined by an object, a method, a stack, and a procedure among other elements. A segment is characterized by logical addresses which consist of uniquely identifying numbers and unique offsets. It is important to note that the characteristics of a segment vary with time. Typically, a segment, like a stack is based on function calls that are active at any given time.

Segmentation is typically implemented like paging except that segment tables are used with look ups to identify and provide information about addresses specific to each page table (Bhat, 22). However, segmentation has the problem of suffering from external fragmentation though the approach is advantaged by the fact that it offers separate program compilation.

Therefore, it is important to combine paging and segmentation to optimize system performance and security. In addition to that, segmentation with paging reduces the search time of a program and the problem of external fragmentation (Bhat, 12).

However, the CPU’s performance may be underutilized by a process that may devote more time swapping pages in and out of memory. That concept is referred to as thrashing. Thrashing leads to the underutilization of the CPU due to performance overheads affecting the overall system throughput (Bhat, 22).

Point of view

It is important to note, from the foregoing discussion that memory management is one of the critical functions of the operating system. Therefore, memory has to be efficiently managed to ensure efficient utilization. The operating system fulfills the whole objective of memory management by using an integral software component referred to as the memory management unit (MMU).That implies that the OS delegates some of its memory management responsibilities to the MMU while supervising its functionality.

It is arguable that the OS will always report any error that may arise due to allocation and de-allocation of main memory. The MMU is one of the kernel components of the OS. Typically, the operating system divides memory into two classes. That is primary memory and secondary memory. Primary memory is concerned with the storage of volatile data and programs while secondary memory provides long term data and program storage.

Programs wanting to execute in the CPU have to be moved into and out of main memory through mechanism known as swapping. Depending on the number of processes that have been swapped in and out of main memory, a number of holes equivalent to the number of processes that have been moved are created. These holes can be filled by swapping programs into and out of memory. However, the movement of processes in and out of main memory is bound to create many small holes into which new processes cannot fit.

This causes the system efficiency to be compromised. To enhance system throughput, memory can be compacted or a number of placement algorithms can be used to overcome the fragmentation problem. However, to optimize system efficiency, memory should be compacted or defragmented. On the other hand, when programs run system efficiency can also be optimized by use of virtual memory.

Virtual memory is an important concept in memory management as programs that need large memory space in a multiprogramming environment may be limited by physically available memory. To overcome that, the OS creates another type of memory referred to as virtual memory.

Virtual memory is an important concept in memory management as it allows programs to run on limited memory while the OS fakes these programs into believing that they are running on a large contiguous memory. Hence virtual memory provides a large addressable space that accommodates a large number of resident processes. That concept therefore supports multiprogramming.

Conclusion

In conclusion therefore, it is evident that memory management is one of the critical responsibilities of the operating system. The OS divides memory into primary and secondary memory and ensures that policies are put in place to effectively manage and control these memory types. Typically, primary memory is volatile as it holds the data and programs needed for processes to execute in the CPU while secondary memory provides long term data and program storage.

In order to efficiently manage programs and data in primary memory, the operating system assigns the responsibility of managing memory to the memory management unit (MMU) also referred to as the memory manager. The OS assumes supervisory roles and ensures that programs and data are assigned and moved out of memory during program execution through the MMU, an integral component of the OS.

The MMU resides in the OS’s kernel. When programs want to run in the CPU, processes have to be swapped in and out of main memory. The swapping process creates holes that have the possibility of impairing the system’s throughput. That is because swapping may cause internal or external fragmentation of the main memory. To enhance system throughput and minimize the effects of fragmentation, several memory placement strategies are used to achieve compaction.

These include the first fit policy and best fit policy. In first fit policy, the OS allocates a process to a hole that is first available so long as it is able to accommodate the process. Therefore, the allocation mechanism uses a process’s index to allocate the process a position in the queue. However, the best fit policy can easily lead to the creation of many holes, impairing the efficiency of the OS. To overcome that weakness, the OS uses the best fit policy.

The policy is based on the fact that main memory is first scanned of all the holes that have been created and the hole that can fit the process’s memory requirements is assigned. One of the algorithms that is used to assign processes memory holes is the round robin algorithm. These two allocation methods have been identified to be very efficient. However, for the OS to efficiently allocate memory chunks to running programs, it uses fixed or variable partitioning strategies.

These partitioning strategies have their merits and demerits which are also overcome by the use of the buddy partitioning strategy. On the other hand, memory management cannot be complete if virtual memory is not considered. Virtual memory supports multiprogramming by allowing several resident programs to run at the same time.

Works Cited

Bhat, P. C. P. Operating Systems/Memory management. Bangalore. Lecture Notes, 2004. Web.

Breecher, J. Operating systems memory management. 2011. Web.

Callitrope, P. T. Withington, Memory Management. 2011. Web.

Loepere, K. Mach 3 Kernel Principles. Open Software Foundation and Carnegie Mellon University, 1992. Web.

Maiorano, A., Paul Di M., Memory Management. Web.

Operating Systems – Memory Management. ECE 344 Operating Systems. Web.

Pierre, J. An Introduction to Intel Memory Management. Web.

Robinson, T. Memory Management 1. 2002. Web.

Simsek, B. Memory Management for System Programmers. 2005. Web.

Silberschatz, Galvin & Gagne. Operating System Concepts with Java, Chapter 9, Memory Management 2003. Web.

Tanenbaum, Andrew, s. and Albert s. Woodhull. Operating systems design and Implementation. 2006, Prentice Hall. Web.

Understanding Operating Systems. Memory Management, Early systems. Web.