Introduction

Digital imaging holds many benefits for the photographer however there are many commercial photographers as well as many amateurs that remain unhappy with the image quality of the digital camera as opposed to film in cameras of comparable price. In addition, there are concerns about the rapidly changing computer market and finding compatible hardware and software programs to keep digital images from being lost in the technical divide. The boom of available digital cameras and their immediate integration with desktop computers and other devices has been revolutionizing the photography industry. There are many advantages to going digital over more traditional methods. Although the quality of digital camera pictures wasn’t up to the same level as film cameras when they were first developed, digital cameras have been developed now that provide an acceptable image quality at a comparable cost. In addition, digital cameras provide the convenience of needing no film and being able to store many more pictures than the traditional 24 or 36 film rolls.

The ability to preview the photo on site plus the ability to instantly download pictures into a desktop computer and send them out via email around the world makes digital photography very attractive to the average camera user. However, ever-changing camera formats also make it difficult for the average layperson to determine which digital cameras will provide adequate images for the given cost even when they are willing to pay more for the camera upfront for the trade-off of less expenditure later. Digital images have proven difficult to authenticate and remain difficult to project at high resolutions. Finally, digital cameras, because of their computerized parts, tend to be more delicate, having less tolerance to extreme heat, cold, and moisture than traditional film cameras.

History of Color Theory

Our conception of color is actually somewhat short of the truth. We see a red apple on the counter and assume it is red. However, if we watch the apple over the course of several hours, we might notice that as the light coming into the house shifts as the sun passes overhead from morning to night that the red of the apple changes in hue. Sometimes it is an almost violet apple and other times it is almost yellow-orange, the only difference being in the quality of the light that is falling on it. As dusk falls, the apple becomes gray, eventually appearing black if no one remembers to switch on the electric light. This color change is due to the way in which the human eye perceives color, which is actually a quality of the light as it enters our eye after being reflected from the surface of the apple.

The process of seeing and perceiving color is, therefore, both internal and external. The light from the sun must first pass through the filter of our environment (the window, the curtains or blinds, the pollution in our environment, etc.), all of which begin to absorb differing wavelengths, which represent color bands to us. As the remaining light falls on the apple, the apple absorbs most of them but rejects some. These rejected wavelengths become reflected light. In the case of our apple, most of the wavelengths are absorbed with the exception of those that lie on the red end of the spectrum. These reflected wavelengths then bounce off of the apple and into the human eye that is watching it. The eye identifies these wavelengths and sends a message to the brain that indicates the apple is a particular shade of red at this moment. How the eye functions to identify these wavelengths and how the brain interprets the message being received is generally similar in all human eyes, but there is a wide scope for variation from one human to another so that even if two people are watching the same apple from the same viewpoint at the same time (an utter impossibility), they would probably still discern two different shades of red. “The most technically accurate definition of color is:

’Color is the visual effect that is caused by the spectral composition of the light emitted, transmitted, or reflected by objects.” (Morton, 2006).

The Mind’s Eye

The human eye interprets color through the use of the photoreceptor cones that are located within the retina, a space on the back of the eye similar to a cinema screen that collects and interprets the incoming light waves. Although scientists still aren’t sure how these cones work, they do know that they “contain light-sensitive pigments called iodopsins, but their nature and function are still matters of conjecture” (Zelanski & Fisher, 2003, p. 23). The strongest theory to date is the Trichromatic Theory, proposed by Thomas Young in 1801 and developed further by Hermann von Helmholtz. This theory proposes that the cone has three general kinds of pigments – “one for sensing the long (red range) wavelengths, one for the middle (green range) wavelengths, and one for the short (blue-violet range) wavelengths” (Zelanski & Fisher, 2003, p. 23).

The response levels within the eye are then mixed by the brain to form a color impressions. In an attempt to explain situations in which direct light is not the stimulant, such as in cases of afterimages, the Opponent Theory has been developed. This theory suggests that as part of a process, which has not yet been completely identified, “some response mechanisms … register either red or green signals, or either blue-violet or yellow. In each pair (red/green, blue-violet/yellow) only one kind of signal can be carried at a time, while the other is inhibited” (Zelanski & Fisher, 2003, p. 24). Oddly enough, these pairs correspond to the complementary colors on color wheels.

The idea of the Color Wheel

The idea of the color wheel was built upon by Johanes Wolfgang Goethe in the 1800s from a more psychological approach, working to determine the reasons why artists chose to use particular colors in their representations. Because he was approaching color from a different perspective, he felt an entirely different color model was necessary. For this, he developed his color triangle in which the three primary colors of blue, yellow, and red were placed at the three corners of an equilateral triangle. The secondary colors, those formed by mixing two of the primaries, formed secondary triangles between the corners, and the tertiary colors filled in the gaps. “Goethe’s original proposal was ‘to marvel at color’s occurrences and meanings, to admire and, if possible, to uncover color’s secrets.’ To Goethe, it was most important to understand human reaction to color, and his research marks the beginning of modern color psychology. He believed that his triangle was a diagram of the human mind and he linked each color with certain emotions” (Blumberg, 2005).

Phillip Otto Runge took this concept another giant step forward with the introduction of shades and highlights in his creation of the color sphere. Conducting his research during roughly the same period as Goethe, Runge took his explorations into the third dimension by placing the color wheel at the equator of a sphere, allowing them each to blend to white at one pole and black at the other (Vogel, 2006).

The Color Wheel

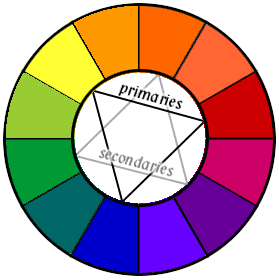

As a building block of how color is seen and represented, the color wheel is of prime importance to our understanding. Basically, it consists of a range of usually 12 colors placed around a circle and typically refers to the colors that can be achieved in paint rather than light.

The three primary colors (red, yellow, and blue) are placed equidistant apart on the wheel. These are called primary colors because they are not mixed with other colors but must come directly from their source. They are broken apart by the secondary colors, which are created when any two of the primary colors are mixed. For instance, when blue and red are mixed, you achieve violet, when blue and yellow are mixed, you achieve green and when red and yellow are mixed, orange appears. The rest of the circle is filled in with tertiary colors, which are those colors that are achieved by mixing a primary color and a secondary color. These include red-orange, yellow-orange, yellow-green, blue-green, blue-violet, and red-violet.

Warm and Cool

These colors are often further divided into the concepts of warm and cool colors, based upon the perceived mood they were seen to portray. Warm colors are generally considered to be those lying between red and yellow-green as one progresses through the orange side of the wheel. Cool colors include the red violets through greens on the blue side of the wheel. Warm colors tend to jump forward and assert themselves in a design while cool colors tend to recede in our perception. This is partly because of the way in which we see the world; where atmospheric effects can make things very far away take on a bluish hue. Because we’re accustomed to blue indicating the very far away, we are pre-conditioned to see these colors as further back in the image presented. However, depending upon the shade used, greens and red violets can also be considered warm, so they have become widely associated among designers as relatively neutral colors that can be used to complement many different color schemes. “The combination of warm and cool colors generally intensifies the relative temperature of each” (“The Color Wheel”, 2006).

In addition to distance perspectives, the use of cool or warm colors can establish a mood. “Cool colors tend to have a calming effect. At one end of the spectrum, they are cold, impersonal, antiseptic colors. At the other end, the cool colors are comforting and nurturing. Warm colors convey emotions from simple optimism to strong violence. The warmth of red, yellow, pink or orange can create excitement or even anger” (Bear, 2006). Colors that fall between the two extremes, the red violets or the greens, can be used to both calm and excite.

Traditional Photography

Traditional cameras work to capture the same colors we see by controlling the amount of light allowed to strike a light-sensitive film hidden inside. It is a light-proof housing for this film that has various means of controlling the wavelengths that enter it, exposing only a small segment of film at a time. A small shutter controls the length of time that the film is exposed while a tiny hole determines the amount of light allowed to enter at once (Hedgecoe, 1991).

As in a human vision, the entire process begins with reflected light as it bounces off of objects within the camera’s field of view and enters the lens. Although a lens is little more than a piece of glass designed to be used to focus light on a specific point, this initial contact with the camera provides a lot of possible effects to the photographer. The focal length of the lens is defined as “the distance from the lens to the point of focus when the lens is focused on infinity” (Wills, 2006). A normal lens will provide a normal-looking picture in that all the objects seen within it will be presented approximately as it was seen with the eye. According to Wills, ‘normal’ for 35 mm film is typically around 50 mm. Depth of field increases with a wide-angle lens while sacrificing some focus and distorting the perspective somewhat. “Objects close to the camera will look much larger and closer than they really are and objects far from the camera will look much smaller and farther away than normal” (Wills, 2006). A telephoto lens further limits the field of vision but further throws off perspective through compression, giving it the least depth of field of all the options.

Digital Color Theory

Subtractive/Additive

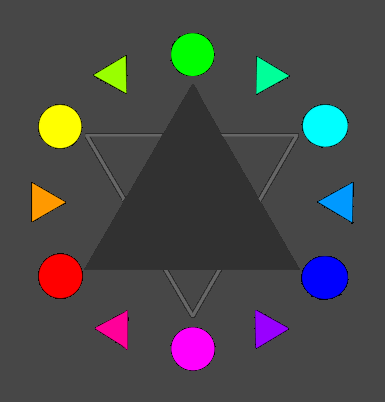

Because a great deal of the digital world displays its information through the medium of light, the color spectrum involved and color models used are different than those used for traditional photography or pigment. When referring to the pigment color schemes, one typically uses the term ‘subtractive’ because the colors are created by adding pigments to a surface that will function to absorb light rays hitting it, effectively removing wavelengths from the image. When referring to color produced by light directly, one uses the term ‘additive’ because light must be added in order to produce the white of a blank page (Kassa, 2006). Therefore, the three primary colors of light are different from those used for pigment. In light, the primaries are red, green, and blue. Therefore, this color model is referred to as the RGB color mode. When all three of these colored lights are allowed to overlap, they produce white, making white the lone tertiary color. Like the subtractive color wheel, though, the additive color wheel has secondary colors, which in this case are yellow, cyan, and magenta.

Complimentary/Analogous

The ideas regarding color usage remain valid when dealing with light as they are when dealing with pigment, such as complementary and analogous color schemes. “The complementary color for a primary color in this scheme is the secondary color that does not use the primary color as a part of its make-up. For example, the complementary color for red would be cyan, which is made up of overlapping blue and green lights and therefore does not contain red.” (Gabriel-Petit, 2006). Likewise, the complementary color for a secondary color is the primary that does not include the primaries that make up the original secondary color (cyan’s complement is red). Intermediate or tertiary colors also exist by combining equal portions of adjacent primary and secondary colors, just like what was seen in the subtractive model. “The colors created are light chartreuse, orange, hot pink, bright violet, azure blue, and chrysoprase green.” (Gabriel-Petit, 2006).

All digital devices operate upon the RGB color mode because of their dependence on light to display images. This is most apparent in video monitors such as the computer monitor or the television, but is also employed in the display screen of a digital camera and is the format in which the image captured is stored. Images displayed on these surfaces are done so through the use of thousands of tiny receptor dots embedded in the screen. As in the retina of the human eye, these receptor dots are keyed to display red, blue or green. When electronically activated, the dots light up, combining with surrounding dots to create the desired color effect by the time the human eye processes the light coming from the screen (Kassa, 2006).

Photosites

Although the outside of a digital camera may look quite similar to the outside of a film camera, many are now capable of very detailed manual settings, the inner workings of the camera are vastly different. To begin with, rather than using film, the digital camera uses a specialized silicon chip, often referred to as the sensor, to detect the light entering through the lens aperture and shutter. Unlike film, which is completely treated with light sensitivity and therefore able to pick up even the smallest details and relate them to paper, the sensors in a digital camera contain tiny light-sensitive spots called photosites, which do not necessarily cover the entire surface of the sensor and can therefore lose some information in the fine details (Hogan, 2004). These photosites are extremely important to the proper function of the camera as “a photosite essentially converts the energy from a light wave into photoelectrons. The longer a photosite is exposed to light, the more photoelectrons it accumulates” (Hogan, 2004).

Also unlike film, the digital camera sees everything with relatively the same degree of on or off, or black and white. To produce color images, the camera uses an array of specialized color filters over the photoreceptors to limit the amount of light it sees. These color arrays are based on either the subtractive or the additive models depending upon the maker of the camera. The analog data thus created moves to the edge of the sensor and is processed by a converter and then another processor that works to average out the RGB values of each individual pixel captured. However, all of this work is done internally with little to no interference from the user. When it’s complete, the image is ready to be transferred to the computer or onboard display for review.

Hardware

One way of improving the quality of prints made from digital cameras and processed through digital technology is by configuring all of the hardware to be used to its most advantageous setting. In most situations, this would include the digital camera as much as possible, the monitor to be used while editing the photos and the printer to be used in producing the final print, whether that is a home printer for personal use or an offsite printer for commercial use. Unfortunately, much of this customization is difficult or impossible for the end-user to manipulate.

Cameras

Digital cameras, for example, have pre-installed color filters that determine how color is recorded to the sensor that is based on either an RGB or CMYG (cyan, magenta, yellow and green) model that cannot be changed. In addition, certain properties of how these cameras work mean a higher degree of image breakdown in warmer temperatures as electrons, excited by the heat, begin to bleed over into other photoreceptors and adding ‘noise’ to the image. To help reduce this so-called Dark Current, many newer cameras actually take two photos on longer exposures, enabling them to compare the photosite array that was exposed to light to the one that wasn’t in order to discern the pattern seen in the photo without light and mask it out of the one with the light. If this is not done automatically on the digital camera in question, it can be achieved manually by taking the picture with light, then snapping on the lens cover and taking a second picture to be used later during the photo editing process.

Monitors

Monitors are slightly more amenable to adjustment than digital camera displays simply because their function is geared to display exclusively. “Most professional photographers and creative types understand the importance of monitor calibration. The process helps make sure you can trust what you see on your screen so your editing decisions are based on the right information” (Turner, 2006). Even when correctly calibrated to work with the rest of your system, the monitor can be misleading as the ambient light available in the room can offset the colors being seen. “Experts agree that the most effective workspace for critical color work using computers is a specially designed, darkened room, in which the overall illumination is lower than the light emitted by the computer monitor” (Wedding, 2001). At the least, it is recommended that the monitor be placed in an area of subdued light.

Printers

The printer is the final piece of hardware involved in transferring the photo from the camera through the computer to the printed page. “Calibrating a monitor is necessary for screen displays, which in turn replicate what is printed on paper. Calibrating a printer makes it certain that a print job is coherent with what is reflected onscreen” (Pinkerton, 2006). For most people, the visual calibration process is the most cost-effective and provides adequate results. “The basic visual calibration process entails the use of test images with an extensive variety of tonal values. Tonal values comprise a number of color bars, photographs, and blocks of colors. They can be visually corrected with onscreen and print colors. Preferably, one should print a test image, then contrast and modify the grayscale and color outputs in the controls a printer offers” (Pinkerton, 2006).

Software applications

Photoshop/Paint

In addition to the various hardware applications that can be utilized to increase the quality of digital prints, there are several software packages available that are specifically designed to increase the editing capability of the individual artist and to encourage the various parts to play nice with each other. These image editing software packages work to provide the necessary bridge between the camera and the printer, adding several benefits to the photographer in the form of image adjustment as well as in the ability to manipulate images in various and sometimes drastic ways. Two of these programs are Adobe Photoshop and JASC’s Paint Shop Pro.

Some similar features between these two programs include the ability to adjust an image’s brightness, contrast, and color balance. This offers a significant benefit over the traditional camera in that the artist has complete control over the picture even after its left the camera as opposed to the limited control provided over the film as its being developed in an offsite photo processing center. Often, once the film has left the camera, the artist no longer has any control, even in their own darkroom, to alter the image once its been printed and found lacking in some way. With these programs, the image can be adjusted many times over, offering different results every time if desired and providing plenty of room for experimentation with different effects and settings.

While the programs can occasionally be somewhat complicated, providing options that allow the user to adjust levels by manipulating the curves for the various primaries used within the selected image, the control is enormous once these features are learned. While the photo processor may not be aware that you intended to crop that train out of the image, the software programs available in the computer will do this with almost no effort on the part of the user – merely a few clicks here and there and the train disappears.

In addition, both programs allow the user to add unique features that weren’t originally a part of the picture taken with the camera, a feature not typically offered with film development. These features include alternate color treatments, the inclusion of words or an easier compilation of multiple pictures into one cohesive image or a collage of images. Other common tools include the ability to paint over sections of an image or to add additional shapes and features to the image. Several tools exist in these programs to help the artist achieve the exact effects desired, and it is the availability of these specific tools that marks the difference between the higher-priced Adobe Photoshop and the lesser-priced Paint Shop Pro.

File Formats

Any discussion of how software affects the quality of the finished image must also include a discussion of the available file formats associated with these images. Depending upon the end-use of the image, it should be saved in one of several different ways. As already mentioned for images intended to be printed, the.tiff file is preferred because of the reduction of compression involved in the saving process. This provides the printer with the most amount of information regarding the image as possible to provide the clearest possible print with the most accurate colors. This file format is also the preferred format for archival images because it preserves the images without losing any of the data information associated with it. The highly popular.jpg format is particularly well-suited for those images that are meant to be used in the digital environment. “JPG uses lossy compression. Lossy means that some image quality is lost when the JPG data is compressed and saved, and this quality can never be recovered” (Fulton, 2005). This is an intentional characteristic of the file format as it was designed to be used to make images as small as possible while still conveying the general scope and idea of the original image. “JPG modifies the image pixel data (color values) to be more convenient for its compression method. A tiny detail that doesn’t compress well (minor color changes) can be ignored (not retained). This allows amazing size reductions on the remainder” (Fulton, 2005).

For this reason, the.jpg format is most suited for use when sending images via email or for use on web pages or other online applications. The.gif format is another highly popular file format. Like.jpg,.gif is designed to be used on the internet and, as such, is intended to be lossy when compressing images. Unlike.jpg,.gif files use indexed color, which is intended to reduce the amount of time spent cross-referencing color models and encourages accurate color representation across all platforms. The drawback to this is that it is limited to only 256 colors, which prevents the smooth gradations frequently necessary for the accurate portrayal of shape and form desired for many images. Instead, the.gif format is typically only used for digital graphics with large blocks of solid color and little detail.

Conclusion

Although it cannot be concisely proven that digital photography has unequivocally improved the art of photography, it has presented some very unique opportunities that have never been available before. Ever-improving technology is working to address some of the concerns of professional photographers in the actual capturing of images. This can be seen in the way that they are adding additional photosites to the sensors within the camera that replaces film and in limiting the effect of stray electrons due to extreme heat or prolonged shutter speeds. Otherwise, many of the same principles attached to traditional film photography, such as shutter speed and aperture setting, remain identical to the methods used by digital cameras. Another of the larger concerns regarding quality in digital photography has to do with the quality of the end product.

Once the problem of lesser detail in the digital camera is solved, the problem of unpredictable and often unmatched branding of computer systems remains, offsetting the color models being used and having interesting effects on how colors perceived on the monitor end up being presented coming out of the printer. Recognizing this, companies heavily involved in the digital photography industry have been working diligently to develop color models that can be used across platforms and devices to provide the utmost in color matching from camera to final product, whether that final product is some sort of print object or an image to be used in the digital environment. Some of the more sophisticated image editing software programs, such as Adobe Photoshop, are even being designed embedded with their own color modeling systems as well as ICC standardized color systems in preparation for the day when all of the devices, brands, and platforms can converse. These programs sometimes also offer special wizard tools to carefully help end-users walk through the often confusing and frightening process of color management among the various devices being used. With proper use of the tools available and appropriate hardware and materials as well as the frequent improvements in technology, it is conceivable that digital photography will soon be able to match film photography in overall quality and adaptability.

References

Bear, Jacci Howard. (2006). “The Meaning of Color.” About Desktop Publishing. Web.

Blumberg, Roger. (2005). “Color Mixing and Goethe’s Triangle.” Computer Science. Providence, RI: Brown University.

“Color Wheel, The.” (2006). Design Center. Shaw Industries. Web.

Fultan, Wayne. (2005). “Image File Formats: Which to Use?” Scanning Tips. Web.

Gabriel-Petit, Pabini. (2006). “Color Theory for Digital Displays: Part I.” UX Matters. Web.

Hedgecoe, John. (1991). John Hedgecoe’s Complete Guide to Photography. New York: Sterling Publishing.

Hogan, Thom. (2004). How Digital Cameras Work. Web.

Kassa, Chris. (2006). “Understanding Color.” RGB World. Web.

Morton, Jill. (2006). “Color Matters: Color & Vision, How the Eye Sees Color.” Creative Tools for Digital Camera. AKVIS. Web.

Pinkerton, Kent. (2006). “Printer Calibration.” Ezine Articles. Web.

Poynton, Charles. (2000). “Brightness and Contrast Controls.” Web.

Sanford. (2005). “The Color Wheel.” A Lifetime of Color. Web.

Turner, Kelly. (2006). “PMA Notebook: Color for the Rest of Us.” Macworld. Web.

Vogel, Jakob. (2005). “Runge’s Color Sphere.” Web.

Wedding, George. (2001). “The Darkroom Makes a Comeback.” Creative Pro. Web.

Wills, Keith. (2002). “Lens.” Photography Lab. Web.

Zelanski, Paul & Fisher, Mary Pat. (2003). Color. 4th Ed. New Jersey: Prentice Hall.